Recurrent Neural Network with Sequential Weight Sharing

Explore how a recurrent neural network utilizes sequential weight sharing to process foveated input images, select actions, and achieve better generalization. The network aims to reduce parameters, input space, and computational resources while maintaining biological compatibility. Various methods, including LSTM usage and cross-entropy training, are implemented. Challenges such as unclear experiment topology and training epochs are addressed, highlighting the network's potential in image processing tasks.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

J. M. Allred and K. Roy, "Convolving over time via recurrent connections for sequential weight sharing in neural networks," 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, 2017, pp. 4444-4450. doi: 10.1109/IJCNN.2017.7966419

J. M. Allred and K. Roy, "Convolving over time via recurrent connections for sequential weight sharing in neural networks," 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, 2017, pp. 4444-4450. doi: 10.1109/IJCNN.2017.7966419

Broad strokes A recurrent network recieves a foveated input image It processes and stores information in its hidden states A new location for the centre of the 'glimpse' is output An action is selected This repeats until the end of the task

Motivation Reduce the number of network parameters Reduce the input space fast compute, less resource Biological compatability Cropping an image can reduce task difficulty Network complexity not dependent on input size

Methods Location is drawn from distribution, mean from network and fixed variance - discrete failed Action is task dependent BP and RL to train the network Core network uses LSTMs Rest is fully connected linear rectifier

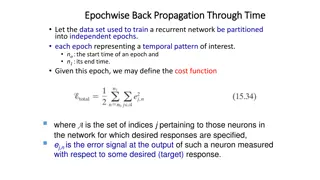

Maths POMDP Cross-entropy was used to train the network when performing classification

Comments Talk about better generalisation compared to other but no comparison Unclear topology for each experiment Unknown number of epochs to train/converge

Feedback What can be improved? More or less detail? Any particular snacks? Length? Topic? More discussion, less presenting? Journal club name?

![textbook$ What Your Heart Needs for the Hard Days 52 Encouraging Truths to Hold On To [R.A.R]](/thumb/9838/textbook-what-your-heart-needs-for-the-hard-days-52-encouraging-truths-to-hold-on-to-r-a-r.jpg)