OpenMP Barriers and Locks in Parallel Programming

Exploring the concepts of OpenMP barriers and locks in parallel programming, this discussion covers the importance of synchronization through barriers, the use of lock variables for finer control over synchronization, and examples like the Dining Philosophers problem. Learn how these primitives facilitate parallel decomposition and enhance the efficiency of parallel algorithms.

Uploaded on Oct 09, 2024 | 4 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Parallel Programming Barriers, Locks, and Continued Discussion of Parallel Decomposition David Monismith Jan. 27, 2015 Based upon notes from the LLNL OpenMP Tutorial and from Introduction to Parallel Computing by Grama, Karypis, Kumar, and Gupta

Last Time Parallel Decomposition Review of OpenMP Directives parallel for shared private reduction

This Time OpenMP Barriers OpenMP Locks An example with the Dining Philosophers problem More decompositions Exploratory Decomposition Speculative Decomposition Tasks and Interactions Load balancing Handling overhead Parallel Algorithm Models

OpenMP Barriers A barrier is a parallel programming primitive that forces all threads to wait until every thread in the process has reached the barrier. Barriers are used to enforce synchronization within programs. OpenMP Barriers are implemented with the following syntax: #pragma omp barrier Important barriers cannot be used in a #pragma omp parallel for, they may only be used within a #pragma omp parallel block

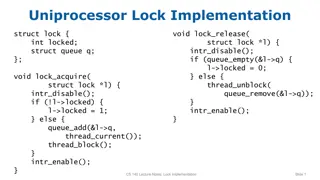

OpenMP Lock Variables Lock variables allow for finer granularity of control over synchronization In OpenMP the lock variable type is omp_lock_t omp_lock_t myLock; Locks must be initialized using the omp_init_lock function. omp_init_lock(&myLock); After initializing a lock, the lock may be acquired using the omp_set_lock function and a lock may be released using the omp_unset_lock function. omp_set_lock(&myLock); omp_unset_lock(&myLock); Locks must be destroyed using the omp_destroy_lock function. omp_destroy_lock(&myLock);

Example: Dining Philosophers Five students eating at Joy Wok with chopsticks Only five chopsticks available. A student needs two chopsticks to eat. Chopsticks are situated between students. Students can't move from their positions at the table. Students alternate between thinking and eating.

Example: Dining Philosophers (Contd..) \ (S1) | (S2) / (S3) Rice (S4) / (S5) \ A possible solution to deadlock: Students always reach right first. (S1)-Req->[R2]-Ac->(S2)-Req->[R3]-Ac->(S3)-Req->[R4]-Ac->(S4)-Req-+ ^ | | | +--------------Requests----(S5)<----Acquired---[R5]<--------------+

Philosopher Pseudocode 1. Check if the index value of the left chopstick is less than the index value of the right chopstick. If true, do 2 then 3. Otherwise, do 3 then 2. 2. Attempt to acquire the left chopstick 3. Attempt to acquire the right chopstick . 4. Eat for specified time period 5. Release chopsticks 6. Think for a specified time period

Review of OpenMP Dining Philosophers See the OpenMP Dining Philosophers Example on the course website. Be sure to make note of the following items OpenMP Parallel Section OpenMP Barriers OpenMP Locks Deadlock Prevention by disallowing a cycle in the resource allocation graph

Exploratory Decomposition Exploratory Decomposition used to decompose problems corresponding to a solution space that must be searched. Partition search space into smaller parts. Search each part concurrently until solutions are found. Examples 15 puzzle Chess AI (Tree search) Checkers We will return to this later when we investigate parallel depth-first search.

Speculative Decomposition Speculative decomposition used when a program may take one of many computationally significant branches based upon the computation that preceded it. Discrete Event Simulations are an example of such types of computations and will also be covered in following lectures.

Task Properties Four properties play a major role in mapping tasks Task Generation Task Size Prior knowledge of sizes Amount of data associated with each task

Task Creation Tasks (i.e. threads) may be created dynamically or statically Static creation all tasks are known before beginning an algorithm Example matrix multiplication and LU factorization Dynamic generation tasks and a dependency graph may not be available before starting an algorithm Examples include the 15-puzzle, chess, and other exploratory decomposition algorithms

Task Sizes Uniform time complexity (and often data complexity) is similar across tasks. Example matrix multiplication Non-uniform significant variation in time and space complexity across tasks. Example performing a parameter sweep Prior knowledge of the sizes of tasks can drastically affect how tasks are handled and load balanced. Similarly, prior knowledge of the amount of data to be processed by each task can affect such handling.

Task Interaction Static vs. Dynamic Static - Interaction pattern for each task occurs at predetermined times/intervals Dynamic time of execution of tasks cannot be determined prior to execution of the algorithm Regular vs. Irregular Regular structure can be exploited for efficient computation (e.g. matrix multiplication) Irregular no such pattern exists (e.g. sparse matrix-vector multiplication) Read-only vs. Read-write One-way vs. two-way One-way one task provides work other tasks without being interrupted (e.g. read-only data) Two-way - data/work needed by tasks is provided by another task and access is coordinated by the pair/group of tasks (e.g. producer-consumer)

Load Balancing Overhead task idle time, context switching time, and time spent initiating interactions with other tasks Want to reduce the amount of time processes and threads spend interacting with each other Want to reduce idle time Static Mapping distribute tasks among processes or threads prior to running the algorithm E.g. Array_length/num_threads Dynamic Mapping distribute work during algorithm execution Task sizes may be unknown and may cause load imbalances Data may need to be moved between processes to balance the load

Data Partitioning for Static Mapping Array Distribution Assume tasks are associated with data such that distributing data is equivalent to distributing tasks Block Distribution Assign uniform portions of an array to different processes Example: assign n/p rows of an n by n matrix to each of p processes Example: assign n/p blocks of size n/sqrt(p) by n/sqrt(p) of an n by n matrix to each of p processes Useful to ensure data reuse in cache Cyclic and Block Cyclic Distribution Partition an array into many more blocks than available processes Assign partitions and associated tasks to processes in a round robin fashion so each process gets several non-adjacent blocks Used to reduce idling in operations such as LU factorization to ensure each process gets an equal sampling of a data structure

Other Mapping and Partitioning Schemes Randomized Block Distributions Graph Partitioning Task Partitioning Hierarchical Mapping All are somewhat more complex and will be discussed at a later date

Dynamic Load Balancing Centralized All executable tasks are maintained in a common data structure. Process designated to manage pool of available tasks is called the master. Worker processes are called slaves. Processes that have no work to do take work from the master. Newly generated tasks are added to the data structure. Example: processes could process small chunks of data and request more to process once they become idle Distributed Executable tasks are divided between processes Allow processes to send and receive work from any other process Important to take care in how much work is transferred, how often, how processes are paired, and how work transfer is initiated (e.g. by sender or receiver)

Controlling Overhead Maximize data locality Promote and maximize use of local data or recently fetched memory (i.e. cache lines) Use row-major operations in C and column-major in Fortran Minimize data exchange volume Maximize temporal data locality Make as many consecutive references to the same data as possible Example: Use block operations to perform matrix multiplication Store intermediate results in local data, and perform shared data access only to store the final result Minimize frequency of interactions Restructure your algorithm so that shared data are accessed and used in large chunks Minimize contention Avoid having multiple processes access the same resources at the same time Example: sending multiple messages to the same process at the same time, outputting data from multiple processes to one file at the same time, etc. Overlap computation and interaction Perform computations while waiting for shared data or messages to arrive

Controlling Overhead Replicate data or computations Replicate copies of commonly used data structures on each process as memory permits. This avoids communication overhead between processes, especially if the data structures are read-only. Additionally, performing redundant computations may cost less time than performing message-passing. Use carefully as appropriate Use optimized collective interaction operations Broadcast, All-to-All, and other shared data operations have been implemented in a highly optimized fashion in the MPI library. We will make use of such functions later in the course.

Parallel Algorithm Models Data Parallelism Task Graph Model Work Pool Model Master-Slave (i.e. Client-Server) Producer-Consumer