Modeling CPU-GPU Systems with Queueing Theory

Explore the effectiveness of GPGPUs in CPU-GPU systems, architectural limitations, parallelism, and mathematical modeling approaches. Delve into OpenCL models, Kuck diagrams, and queueing equations for a comprehensive understanding.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Queueing Theory Modeling of a CPU-GPU System September 15, 2010 Lindsay B. H. May, Ph.D. Systems Engineer

Problem/Goals GPGPUs have been touted as the solution to the loss of the Altivec engine in PowerPCs in the embedded space We set out to answer a few questions Are GPGPUs all that they are purported to be? What are some of the defining architectural limitations? We know they re good for graphics but how well do they map into our problem space? Is there a discernable task/data level parallelism line defined by the architecture? Is there a reasonable mathematical modeling approach to this problem?

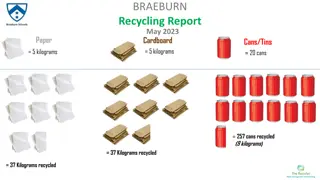

OpenCL Model, Kuck Diagram, and Queueing Model Global Memory Compute Device Memory CPU Compute Device Global/Constant Memory Data Cache PCIe Connection Local Memory Local Memory GPU Global Memory Station 1 Compute Unit 1 Compute Unit N Work Item 1 Work Item M Work Item 1 Work Item M Private Memory Private Memory Private Memory Private Memory GPU Shared Memory SM3 Station 2 Station 3 SM net3 SM2 SM net2 Depends on shader clock rate Compute Units SM1 SM1 SM net1 SM net1 M0 M0 M0 M0 P01 P01 P0M P0M

Queueing Equations M/M/1/K Arrival Rate ( ( ) ) K 1 ( ) K ( ) 1 M0 + 1 1 K ( 1 = P Queue size = Kq K K = ( ) 1 + 1 ) P0 = * P Server K Service Rate ( ( ) ) + + * * K 1 ( K 1 )( ) * ( ) 1 + * * K 1 1 K 1 ( ) = N = K = Kq+1 = * ( ) 1 2 *, * include effects of blocking GPGPUs for HPEC Hype or Hope?