Managing Large Graphs on Multi-Cores with Graph Awareness

This research discusses the challenges in managing large graphs on multi-core systems and introduces Grace, an in-memory graph management and processing system with optimizations for graph-specific and multi-core-specific operations. The system keeps the entire graph in memory in smaller parts and provides a C-style API for graph workloads. Graph-aware partitioning techniques are used to optimize graph placement and achieve better performance.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Managing Large Graphs on Multi-Cores With Graph Awareness Vijayan, Ming, Xuetian, Frank, Lidong, Maya Microsoft Research

Motivation Tremendous increase in graph data and applications New class of graph applications that require real-time responses Even batch-processed workloads have strict time-constraints Multi-core revolution Default standards on most machines Large-scale multi-cores with terabytes of main memory Run workloads that are traditionally run on distributed systems Existing graph-processing systems lack support for both

A High-level Description of Grace Outline Grace is an in-memory graph management and processing system Overview Implements several optimizations Graph-specific Multi-core-specific Details of optimizations Details on transactions Supports snapshots and transactional updates on graphs Evaluation shows that optimizations help Grace run several times faster than other alternatives Subset of results

An Overview of Grace Keeps an entire graph in memory in smaller parts. v = GetVertex(Id) for (i=0; i<v.degree;i++) neigh=v.GetNeighbor(i) Iterative Programs (e.g., PageRank) Grace API Net Exposes C-style API for writing graph workloads, iterative workloads, and updates. RPC Graph and Multi-core Optimizations B A C E Design driven by two trends - Graph-specific locality - Partitionable and parallelizable workloads D Core 0 Core 1

Data Structures Vertex Log A B C Edge Log Edges of A Edges of B Edges of C A 0 B 1 C 2 Edge Pointer Array 0 1 1 1 Vertex Index Vertex Allocation Map Data Structures in a Partition

Graph-Aware Partitioning & Placement Partitioning and placement are they useful on a single machine? Yes, to take advantage of multi-cores and memory hierarchies Solve them using graph partitioning algorithms Divide a graph into sub-graphs, minimizing edge-cuts Grace provides an extensible library Graph-aware: heuristic-based, spectral partitioning, Metis Graph-agnostic: hash partitioning Achieve better layout by recursive graph partitioning Recursively run graph partition until a sub-graph can fit in a cache line Recompose all the sub-graphs to get the vertex layout

Platform for Parallel Iterative Computations Iterative computation platform implements bulk synchronous parallel model. Parallel computations Iteration 1 Propagate updates Barrier Iteration 2

Load Balancing and Updates Batching Problem1: overloaded partitions can affect performance Solution1: Load balancing is implemented by sharing a portion of vertices Problem2: Updates in arbitrary order can increase cache misses Solution2: Updates batching is implemented by - grouping updates by their destination part - Issuing updates in a round-robin fashion Barrier Cache line B D A C Part1 Core1 Part2 Core2 Part0 Core0

Transactions on Graphs Grace supports structural changes to a graph BeginTransaction() AddVertex(X) AddEdge(X, Y) EndTransaction() Transactions use snapshot isolation - Instantaneous snapshots using CoW techniques - CoW can affect careful memory layout!

Evaluation Graphs: - Web (v:88M, e:275M), sparse - Orkut (v:3M, e:223M), dense Workloads: - N-hop-neighbor queries, BFS, DFS, PageRank, Weakly- Connected Components, Shortest Path Architecture: - Intel Xeon-12 cores, 2 chips with 6 cores each - AMD Opteron-48 cores, 4 chips with 12 cores each Questions: - How well partitioning and placement work? - How useful are load balancing and updates batching? - How does Grace compare to other systems?

Partitioning and Placement Performance On Intel 12 30 PageRank Speedup 10 25 8 20 6 15 4 10 2 5 0 0 3 6 12 3 6 12 Orkut graph partitions Web graph partitions Hash Heuristic Metis Hash + Placement Heuristic + Placement Metis + Placement 1 2 3 Observation: Placing neighboring vertices close together improves Observation: Careful vertex arrangement works better when graph Observation: For smaller number of partitions, partition algorithm didn t make a big difference performance significantly partitioning is used for sparse graphs Reason: L1, L2, and L3 cache and Data-TLB misses are reduced Reason: graph partitioning puts neighbors under same part Reason: All the partitions fit within cores of single chip minimizing communication cost helping better placement

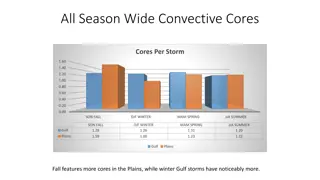

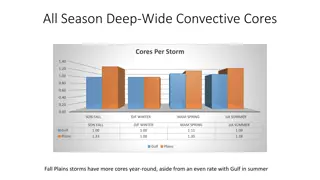

Load Balancing and Updates Batching On Intel PageRank Speedup 2 2 1 1 0 0 3 6 12 3 6 12 Orkut graph partitions Web graph partitions Load Balancing Load Balancing + Update Batching 1 2 Observation: Load balancing and updates batching didn t improve performance for web graph improvement for Orkut graph Observation: Batching updates gives better performance Reason: Sparse graphs can be partitioned better and there are fewer updates to send 1500 Reason: Updates batching reduces remote cache accesses Retired Load Balancing Load 1000 500 Load Balancing + Updates Batching 0 Sibling Core Remote Chip

Comparing Grace, BDB, and Neo4j 10000 1000 Running Time (s) 100 BDB 10 Neo4j 1 Grace 0.1

Conclusion Grace explores graph-specific and multi-core specific optimizations What worked and what didn t (in our setup; your mileage might differ) Careful vertex placement in memory gave good improvements Partitioning and updates batching worked in most cases, but not always Load balancing wasn t as useful