Linear Transformations and Matrices in Mathematics

Linear transformations play a crucial role in the study of vector spaces and matrices. They involve mapping vectors from one space to another while maintaining certain properties. This summary covers the introduction to linear transformations, the kernel and range of a transformation, matrices for linear transformations, and applications of linear transformations. Additionally, it explores the concept of preimage, image, and range in linear transformations with practical examples and graphical representations.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

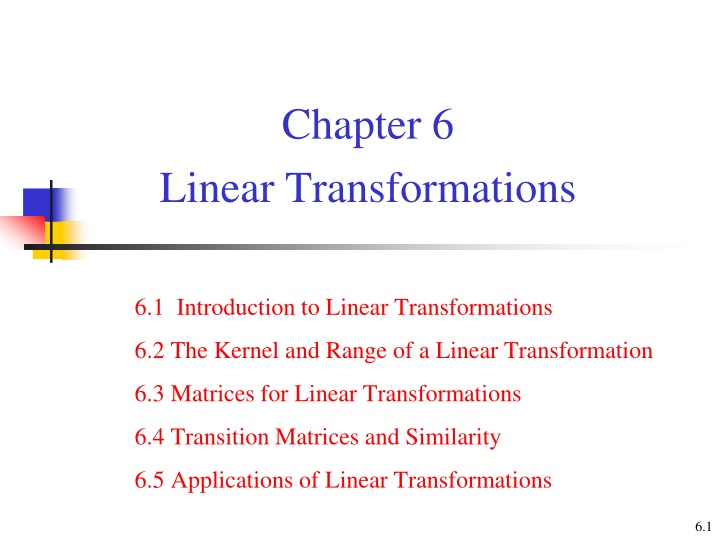

Chapter 6 Linear Transformations 6.1 Introduction to Linear Transformations 6.2 The Kernel and Range of a Linear Transformation 6.3 Matrices for Linear Transformations 6.4 Transition Matrices and Similarity 6.5 Applications of Linear Transformations 6.1

6.1 Introduction to Linear Transformations A function T that maps a vector space V into a vector space W: : , , :vector spaces T V W V W mapping V: the domain ( ) of T W: the codomain ( ) of T Image of v under T ( T v ): If v is a vector in V and w is a vector in W such that = ( ) v w , T then w is called the image of v under T (For each v, there is only one w) The range of T (T ): The set of all images of vectors in V (see the figure on the next slide) 6.2

The preimage of w (w): The set of all v in V such that T(v)=w (For each w, v may not be unique) The graphical representations of the domain, codomain, and range For example, V is R3, W is R3, and T is the orthogonal projection of any vector (x, y, z) onto the xy-plane, i.e. T(x, y, z) = (x, y, 0) (we will use the above example many times to explain abstract notions) Then the domain is R3, the codomain is R3, and the range is xy-plane (a subspace of the codomian R3) (2, 1, 0) is the image of (2, 1, 3) The preimage of (2, 1, 0) is (2, 1, s), where s is any real number 6.3

Ex 1: A function from R2 into R2 ( ) , ( 2 1 v v v T = (a) Find the image of v=(-1,2) (b) Find the preimage of w=(-1,11) Sol: (a) ( 1, 2) ( ) ( 1, 2) ( 1 2, 1 2(2)) T T = = v (b) ( ) ( 1, 11) T = = v w ) 2 , ( ) , ( 2 1 2 1 2 1 + = v v v v v v T 1 2 1 = + v v 4 , 3 2 1 = = v v Thus {(3, 4)} is the preimage of w=(-1, 11) = 2 2 2 v : ( , ) T R R v v R 1 2 + , 2 ) v v v 1 2 1 2 = v + ( 3, 3) = = , 1 ( 11 ) = v v 2 11 1 2 6.4

Linear Transformation (): , vector W spaces V : linear tra A W nsformatio n of into if the T V V W following properties two are true + = + u v u v u v (1) ( ) ( ) ( ), , T T T V = u ), u ) 2 ( ( ) ( T c cT c R 6.5

Notes: (1) A linear transformation is said to be operation preserving (because the same result occurs whether the operations of addition and scalar multiplication are performed before or after the linear transformation is applied) + = + = u v u v u u ( ) ( ) ( ) ( ) ( ) T T T T c cT Scalar Scalar Addition in V Addition in W multiplication in V multiplication in W (2) A linear transformation from a vector space into itself is called a linear operator ( ) : T V V 6.6

Ex 2: Verifying a linear transformation T from R2into R2 = + ( , ) ( , 2 ) T v v v v v v 1 2 1 2 1 2 Pf: = = 2 u v ( , ), ( , vector : ) in , any : number real u u v v R c 1 2 1 2 (1) Vector addition: + = u v ) ( , + = + + ( , u u ) ( , ) v v u v u v 1 2 1 2 1 1 2 2 + = + + u v ( ) = ( , ) T T u + v u v 1 1 2 u 2 v + + + + (( ) ( ), ( ) ( 2 + )) u v u v u v 1 1 2 2 1 1 u 2 2 v = + + + (( u ) u ( ), ( v 2 ) ( 2 )) u u v v u v 1 2 1 2 + 1 2 1 2 = + + ( T , 2 ) ( , 2 ) u + u v v v 1 2 1 v 2 1 2 1 2 = u ( ) ( ) T 6.7

) 2 ( Scalar c = u multiplica u u tion cu = ( , ) ( , ) c cu 1 2 1 = 2 = + u ( ) ( u , ) ( , 2 ) T c T cu cu cu cu cu cu 1 2 1 2 1 2 = + ( , 2 ) c u u u 1 2 1 2 = u ( ) cT Therefore, T is a linear transformation 6.8

Ex 3: Functions that are not linear transformations (a) ( ) sin f x x = ) sin( ) sin( 1 2 1 x x x + + sin( ) sin( ) sin( 2 3 2 + + sin( ) x 2 (f(x) = sin x is not a linear transformation) ) 3 = x 2 (b) ( ) f x ( x ) + + 2 2 1 2 2 x x + x 1 + 2 (f(x) = x2is not a linear transformation) 2 2 2 1 ( ) 2 1 2 = + + ) + + (c) ( ) f x f f 1 = x x x + + ( ( ) ( 1 ) 1 + + x x x ) x x 1 2 f 1 2 = + ) 1 + = + + ( ( 2 x x x 1 2 1 2 1 2 ( ) ( ) ( ) f x x f x f x (f(x) = x+1 is not a linear transformation, although it is a linear function) 1 2 1 2 In fact, ( ) ( ) f cx cf x 6.9

Notes: Two uses of the term linear. = x + (1) is called a linear function because its graph is a line ( ) 1 f x (2) is not a linear transformation from a vector space R into R because it preserves neither vector addition nor scalar multiplication = x + ( ) 1 f x 6.10

Zero transformation (): W V T : = v ( ) v 0 , T V Identity transformation ( ): V V T : = v v v ( ) , T V Theorem 6.1: Properties of linear transformations 0 = ) ( ( v ( u v u, (T(cv) = cT(v) for c=0) v : , 0 = ) v c v T (1)T (2) (3) (4) V T T If W V = v v ( u c ) ( ) T T + (T(cv) = cT(v) for c=-1) = + v + ) ( ) T (T(u+(-v))=T(u)+T(-v) and property (2)) , v c v + 1 1 2 2 n n = + + then ( ) ( T ) c T T c v c v c v + 1 1 2 2 n n = + + (Iteratively using T(u+v)=T(u)+T(v) and T(cv) = cT(v)) ( ) ( ) ( ) c v c T v T v 1 1 2 2 n n 6.11

Ex 4: Linear transformations and bases , 2 ( ) 0 , 0 , 1 ( = T = T = T Find T(2, 3, -2) 3 3 Let be a linear transformation such that : R R T ) 4 , 1 ) 0 , 1 , 0 ( , 5 , 1 ( ) 2 ) 1 , 0 , 0 ( ) 1 , 3 , 0 ( Sol: ) 2 ) 0 , 0 , 1 ( 2 = ) 0 , 1 , 0 ( 3 + ) 1 , 0 , 0 ( 2 , 3 , 2 ( According to the fourth property on the previous slide that ( ) n n T cv c v cT v + + + = + + + ( ) ( ) ( ) 2 2 c v c T v c T v 1 1 1 1 2 2 n n ) 2 = + , 3 , 2 ( T 2 ) 0 , 0 , 1 ( T 3 ) 0 , 1 , 0 ( T 2 ) 1 , 0 , 0 ( T = ) 4 , 1 , 5 , 1 ( 3 + ) 2 , 2 ( 2 2 ) 1 , 3 , 0 ( T = ) 0 , 7 , 7 ( 6.12

Ex 5: A linear transformation defined by a matrix 3 0 v = = 2 3 v v The function is defined as : R T R ( ) 2 1 T A 1 v 1 2 2 = (2, 1) v v (a) Find ( ), where (b) Show that is a linear transformation form T Sol: (a) (2, 1) = v T 2 3 into R R 2 vector 3 R vector R 3 0 6 2 = = = v v ( ) 2 1 3 T A 1 1 2 0 T ) 1 ) 0 , 3 , 6 ( = , 2 ( + ) = ( + c = ) + ( = + u v u c u v = u cT v ( ) u ( ) v (b) ( ) A ( ) ) u T A u A = A ) u T T (vector addition) = ( ( T c A (scalar multiplication) 6.13

Theorem 6.2: The linear transformation defined by a matrix Let A be an m n matrix. The function T defined by v T = ) ( is a linear transformation from Rn into Rm v A Note: n m vector vector R R + + + a a a v a v a v a v 11 12 1 1 11 1 12 2 1 n n n + + + a a a v a v a v a v = = v A 21 22 2 2 21 1 22 2 2 n n n + + + a a a v a v a v a v 1 2 1 1 2 2 m m mn n m m mn n = v v ( ) T A n m : T R R If T(v) can represented by Av, then T is a linear transformation If the size of A is m n, then the domain of T is Rnand the codomain of T is Rm 6.14

Ex 7: Rotation in the plane Show that the L.T. given by the matrix : R T 2 2 R cos cos sin = A sin has the property that it rotates every vector in R2 counterclockwise about the origin through the angle Sol: (Polar coordinates: for every point on the xy- plane, it can be represented by a set of (r, )) y = = ( cos , sin ) r v ( , ) x y r r the length of v ( ) the angle from the positive x-axis counterclockwise to the vector v T(v) = + 2 2 x v 6.15

cos cos cos sin cos sin cos x r = = = v v ( ) T A sin sin sin sin y r cos cos sin r r = + sin cos cos sin r r according to the addition formula of trigonometric identities ( ) + cos( ) r = + sin( ) r r remain the same, that means the length of T(v) equals the length of v + the angle from the positive x-axis counterclockwise to the vector T(v) Thus, T(v) is the vector that results from rotating the vector v counterclockwise through the angle 6.16

Ex 8: A projection in R3 3 3 The linear transformation is given by = 0 0 : T R R 1 0 0 0 1 0 A 0 is called a projection in R3 1 0 0 0 1 0 0 0 0 x y z x y = = If is ( , , ), x y z A v v 0 In other words, T maps every vector in R3 to its orthogonal projection in the xy-plane, as shown in the right figure 6.17

Ex 9: The transpose function is a linear transformation from Mm n into Mn m : ( ) ( M T A A T = T ) M m n n m Show that T is a linear transformation Sol: M B A , + = + m n = + = + T T T ( ) ( ) ( ) ( ) T A B A B A B T A T B = = = T T ( ) ( ) ( ) T cA cA cA cT A Therefore, T (the transpose function) is a linear transformation from Mm n into Mn m 6.18

Keywords in Section 6.1: function: domain: codomain: image of v under T: T v range of T: T preimage of w: w linear transformation: linear operator: zero transformation: identity transformation: 6.19

6.2 The Kernel and Range of a Linear Transformation Kernel of a linear transformation T ( T ): Let be a linear transformation. Then the set of all vectors v in V that satisfy is called the kernel of T and is denoted by ker(T) : T V W v = ) 0 ( T = = v v 0 v ker( ) { | ( ) , } T T V For example, V is R3, W is R3, and T is the orthogonal projection of any vector (x, y, z) onto the xy-plane, i.e. T(x, y, z) = (x, y, 0) Then the kernel of T is the set consisting of (0, 0, s), where s is a real number, i.e. ker( ) {(0,0, )| is a real number} T s s = 6.20

Ex 1: Finding the kernel of a linear transformation = T ( ) ( : ) T A A T M M 3 2 2 3 Sol: 0 0 = ker(T ) 0 0 0 0 Ex 2: The kernel of the zero and identity transformations (a) If T(v) = 0 (the zero transformation ), then V T = ) ker( : T V W (b) If T(v) = v (the identity transformation ), then } { ) ker( 0 = T : T V V 6.21

Ex 5: Finding the kernel of a linear transformation x x x 1 1 1 2 = = 3 2 ( ) x x ( : ) T A T R R 2 1 2 3 3 = ker( ) ? T Sol: = = 3 ker( ) {( , x x x , )| ( , T x x x , ) (0,0), and ( , , ) } T x x x R 1 2 3 1 2 3 1 2 3 = ( , , ) ) 0 , 0 ( T x x x 1 2 3 x 2 1 1 1 2 0 = x 2 1 3 0 x 3 6.22

1 1 2 0 0 1 0 0 1 1 0 1 G.-J. E. 1 2 3 0 1 x t 1 = = 1 1 x t t 2 x t 3 = a is | ) 1 , 1 ker( ) { , 1 ( t number real } T t = 1 , 1 span{( , 1 )} 6.23

Theorem 6.3: The kernel is a subspace of V The kernel of a linear transformation is a subspace of the domain V Pf: : T V W 0 = u u ( Theorem by ( 0 v u v = ( ) T = cT ) ( ( ) 6. ) 1 ker( nonempty a is ) subset of V T T Let and + vectors be in the v ) ( = kernel 0 0 + of 0 Then . T + T is a linear transformation = = ) = T T + u v u v ( ker( ), ker( ) ker( )) T T T u u 0 0 ( ) T c c u u ( ker( ) ker( )) T c T Thus, ker( ) is a subspace of (according to Theorem 4.5 that a nonempty subset of is a subspace of if it is closed under vector addition and scalar multiplication) T V V V 6.24

Ex 6: Finding a basis for the kernel = 5 4 5 x x is x Let : be defined by ( ) where , in and T R R T A R 0 1 2 0 1 1 2 1 3 1 = A 0 1 0 2 0 1 0 0 2 8 Find a basis for ker(T) as a subspace of R5 Sol: To find ker(T) means to find all x satisfying T(x) = Ax = 0. A 0 Thus we need to form the augmented matrix first 6.25

2 1 = 0 A 1 2 0 3 1 1 0 2 1 0 0 1 8 1 0 0 0 0 1 0 0 2 0 1 0 2 4 0 t 0 0 0 1 0 0 0 s 0 0 0 G.-J. E. 1 0 0 2 1 0 0 0 s t + + x x x x x 2 2 1 2 0 1 2 1 1 0 0 s t 2 = = = + x s t s 3 4 t 4 t 4 1 5 = 0 , 0 , 1 , 1 , 2 ) 1 , 4 ( , 0 , 2 , 1 ( ), : one basis for the kernel of T B 6.26

Corollary to Theorem 6.3: kernel = x = n m x = x Let : be the linear fransforma tion given by ( ) . T R R T A x 0 Then the of T is equal to the solution space of A n m ( ) x (a linear transformation : = = x ) T A T R R = x n n x ker( ) ( ) | 0, (subspace of ) T NS A A R R The kernel of T equals the nullspace of A (which is defined in Theorem 4.16 on p.239) and these two are both subspaces of Rn) So, the kernel of T is sometimes called the nullspace of T 6.27

Range of a linear transformation T (T): linear tra a be : Let W w nsformatio Then the n. set of all T V W vectors in T that images are of any vector T v s in is V called the range of denoted is and by = range ( ) | ) v range ( ) { ( } T T V For the orthogonal projection of any vector (x, y, z) onto the xy- plane, i.e. T(x, y, z) = (x, y, 0) The domain is V=R3, the codomain is W=R3, and the range is xy-plane (a subspace of the codomian R3) Since T(0, 0, s) = (0, 0, 0) = 0, the kernel of T is the set consisting of (0, 0, s), where s is a real number 6.28

Theorem 6.4: The range of T is a subspace of W The range Pf: linear tra a of nsformatio n : a is subspace of W T V W = ( ) 0 (Theorem 6.1) 0 T range T ( nonempty a is ) subset of W and ) u vectors are ) v because u v Since ( ( in range( ), and we have T T T + V Range of is closed under vector addition because ( ), ( ), ( T T T u v T + = + u v u T is a linear transformation v ( ) ( ) ( ) range ( ) T T T T + u v ) range( ) T = u u because c ( ) ( ) range u ( ) cT T c T Range of is closed under scalar multi- plication because ( ) and ( T u T u ) range( ) T c T V Thus, range( ) is a subspace of that a nonempty subset of under vector addition and scalar multiplication) (according to Theorem 4.5 is a subspace of T W if it is closed W W 6.29

Notes: : is a linear transformation T V W ) 1 ( ker( subspace is ) of T V (Theorem 6.3) ) 2 ( range ( subspace is ) of T W (Theorem 6.4) 6.30

Corollary to Theorem 6.4: = n m x x Let : be the linear tra nsformatio n given by ( ) . CS T R R T A = The (1) According to the definition of the range of T(x) = Ax, we know that the range of T consists of all vectors b satisfying Ax=b, which is equivalent to find all vectors b such that the system Ax=b is consistent (2) Ax=b can be rewritten as a a a a A x x = + + range of is T equal to the column space of range i.e. , A ( ) ( ) T A a a 11 12 1 n 21 22 2 n + = x b x 1 2 n a a a 1 2 m m mn Therefore, the system Ax=b is consistent iff we can find (x1, x2, , xn) such that b is a linear combination of the column vectors of A, i.e. Thus, we can conclude that the range consists of all vectors b, which is a linear combination of the column vectors of A or said . So, the column space of the matrix A is the same as the range of T, i.e. range(T) = CS(A) b ( ) CS A b ( ) CS A 6.31

Use our example to illustrate the corollary to Theorem 6.4: For the orthogonal projection of any vector (x, y, z) onto the xy- plane, i.e. T(x, y, z) = (x, y, 0) According to the above analysis, we already knew that the range of T is the xy-plane, i.e. range(T)={(x, y, 0)| x and y are real numbers} T can be defined by a matrix A as follows 1 0 0 0 1 0 , such that 0 0 0 0 1 0 1 0 0 0 0 x y z x y = = A 0 0 The column space of A is as follows, which is just the xy-plane 1 0 0 0 1 0 0 0 0 x x 1 = + + = ( ) , where , CS A x x x x x R 1 2 3 2 1 2 0 6.32

Ex 7: Finding a basis for the range of a linear transformation = 5 4 5 x ,where is x x Let : be defined by ( ) 1 2 2 1 1 0 0 0 and T R R T A R 0 3 1 1 0 2 1 0 1 8 = A 2 0 Find a basis for the range of T Sol: Since range(T) = CS(A), finding a basis for the range of T is equivalent to fining a basis for the column space of A 6.33

1 2 2 1 0 3 1 1 0 2 c 1 1 0 0 0 w 0 1 0 0 w 2 0 1 0 1 8 c 1 0 0 0 w 2 4 0 = = G.-J. E. A B 1 0 0 c 2 1 0 0 c 0 c w w 1 2 3 4 5 1 2 3 4 5 , , and are indepdnent, so ( ) CS B , , can w w w w w w 1 2 4 1 2 4 form a basis for Row operations will not affect the dependency among columns , , and are indepdnent, and thus a b That is, (1, 2, 1, 0), (2, 1, 0, 0), (1, 1, 0, 2) is a basis for the range of T , , c is c c asis for c CS A c c 1 2 4 1 2 4 ( ) 6.34

Rank of a linear transformation T:VW (T): rank( ) the dimension of the range of T = = dim(range( )) T T According to the corollary to Thm. 6.4, range(T) = CS(A), so dim(range(T)) = dim(CS(A)) Nullity of a linear transformation T:V W ( T ): nullity( ) the dimension of the kernel of T = = dim(ker( )) T T According to the corollary to Thm. 6.3, ker(T) = NS(A), so dim(ker(T)) = dim(NS(A)) Note: = n m x , then x If : is a linear transformation given by ( ) dim(range( )) dim( dim(ker ity( ) ( )) T T = = T R R T T A = = = = rank( ) null ( )) ( ) A rank( ) nullity T CS A NS A dim( ) ( ) A The dimension of the row (or column) space of a matrix A is called the rank of A The dimension of the nullspace of A ( ) is called the nullity of A = = x x ( ) { | NS A 0} A 6.35

Theorem 6.5: Sum of rank and nullity Let T: V W be a linear transformation from an n-dimensional vector space V (i.e. the dim(domain of T) is n) into a vector space W. Then + = rank( ) + n ullity ( ) T T n = (i.e. dim(range of ) dim(kernel of ) T dim(domain o f )) T T You can image that the dim(domain of T) should equals the dim(range of T) originally But some dimensions of the domain of T is absorbed by the zero vector in W So the dim(range of T) is smaller than the dim(domain of T) by the number of how many dimensions of the domain of T are absorbed by the zero vector, which is exactly the dim(kernel of T) 6.36

Pf: = Let represente be d by an matrix assume and , rank( ) T m n A A r = = = = (1) rank( ) dim(range of ) dim(column space of ) rank( ) T T A A r = = n r = (2) nullity( ) dim(kernel of ) dim(null space of ) T T A according to Thm. 4.17 where rank(A) + nullity(A) = n + = + = rank T ( ) nullity T ( ) ( ) r n r n Here we only consider that T is represented by an m n matrix A. In the next section, we will prove that any linear transformation from an n-dimensional space to an m-dimensional space can be represented by m n matrix 6.37

Ex 8: Finding the rank and nullity of a linear transformation 3 3 Find the rank and nullity of the linear tra nsformatio n : T R R define by 1 0 2 = 0 1 1 A 0 0 0 Sol: = = rank ( ) T rank = ( ) 2 T A = = nullity ( ) dim( domain of ) rank ( ) 3 2 1 T T The rank is determined by the number of leading 1 s, and the nullity by the number of free variables (columns without leading 1 s) 6.38

Ex 9: Finding the rank and nullity of a linear transformation 5 7 Let : (a) Find the dimension of the kernel of if the dimension of the range of is 2 (b) Find the rank of if the nullity of is 4 (c) Find the rank of if ke T r( ) T = 0 be a linear transformation T R R T T T T { } Sol: = = (a) dim(domain of ) dim(kernel of ) 5 T n = 5 2 = = dim(range of ) 3 T n T = 5 4 1 = = (b) rank( ) nullity( ) T n T = 5 0 5 = = (c) rank( ) nullity( ) T n T 6.39

One-to-one (): A function : every in the range consists of a single vector. This is equivalent to saying that is one-to-one iff for all and in , ( ) implies that = u v is called one-to-one if the preimage of T V W w = u v u ( ) v T V T T one-to-one not one-to-one 6.40

Theorem 6.6: One-to-one linear transformation Let : is one-to-one iff ker( ) { } T be a linear transformation. Then T = 0 T V W Pf: ) Suppose is one-to-one T ( Due to the fact that T(0) = 0 in Thm. 6.1 = = = v T can have only one solution: 0 } { 0 v 0 Then ( ) ker( i.e. T ) ) Suppose ker( )={ } and ( )= ( ) T u v u = ) ( ) ( T T T is a linear transformation, see Property 3 in Thm. 6.1 0 ( u v ( T T = v 0 ) T u v is one-to-one (because ( ) T = = u v 0 T u v ( ) implies that T v ker( ) T = = u u v ) 6.41

Ex 10: One-to-one and not one-to-one linear transformation = T (a) The linear transformation : is one-to-one because its kernel consists of only the m n zero matrix given by ( ) T M M T A A m n n m 3 3 (b) The zero transformation : is not one-to-one T R R because its kernel is all of R3 6.42

Onto (): function A W : is said V onto be to if every element T V W in preimage a has in (T is onto W when W is equal to the range of T) Theorem 6.7: Onto linear transformations Let T: V W be a linear transformation, where W is finite dimensional. Then T is onto if and only if the rank of T is equal to the dimension of W = = rank ( ) dim( range of ) dim( ) T T W The definition of onto linear transformations The definition of the rank of a linear transformation 6.43

Theorem 6.8: One-to-one and onto linear transformations Let : both of dimension . Then is one-to-one if and only if it is onto n T be a linear transformation with vector space and T V W V W Pf: ( ) If is one-to-one, then ker( ) { } and dim(ker( )) T = = 0 0 T T Thm. 6.5 = = According to the definition of dimension (on p.227) that if a vector space V consists of the zero vector alone, the dimension of V is defined as zero = dim( range ( )) dim(ker( )) dim( ) T n T n W Consequently, is onto T ( = = If ) T is onto, then dim( range of T ) dim( ) W n Thm. 6.5 = = = = 0 dim(ker( )) dim( range of ) 0 ker( ) { } T n T n n T Therefore, is one-to-one T 6.44

Ex 11: The linear transformation : and rank of and determine whether is one-to-one, onto, or neither T = n m x . Find the nullity x is given by ( ) T T R R T A 1 0 0 2 1 0 0 1 1 1 0 0 2 1 0 1 0 0 2 1 0 0 1 0 1 0 2 1 0 = = = (a) (b) A A = (c) A (d) A 1 = dim(range of T) = # of leading 1 s Sol: If nullity(T) = dim(ker(T)) = 0 If rank(T) = dim(Rm) = m = dim(Rn) = n = (1) (2) = dim(ker(T)) dim(domain of T) (1) 3 2 3 3 rank(T) (2) 3 2 2 2 T:Rn Rm nullity(T) 1-1 onto (a) T:R3 R3 (b) T:R2 R3 (c) T:R3 R2 (d) T:R3 R3 0 0 1 1 Yes Yes No No Yes No Yes No 6.45

Isomorphism (): linear tra A nsformatio n : that is V one to W one and onto T V W is called isomorphis an Moreover, m. if and vector are W spaces V such that there exists isomorphis an from m to then , and V W are said to be isomorphic each to other Theorem 6.9: Isomorphic spaces ( ) and dimension Two finite-dimensional vector space V and W are isomorphic if and only if they are of the same dimension Pf: ( Assume ) T dim(ker( isomorphic is L.T. a exists is one-to-one T = of range dim( that to W W where , that V one dimension has one to V n There : is and onto T V dim(V) = n )) 0 = = = T ) dim( domain of T ) dim(ker( )) 0 T n 6.46 n

is onto dim( T = = range of T ) dim( ) W n = = Thus dim( ) dim( ) V W n Assume ) ( Let that w arbitrary an and , W , dimension have both , be a basis of n w V v + V v n = = v v , be a basis of and B V 1 2 n w ' Then , , B W 1 2 vector in represente be can d as = + + v v v c c nc 1 1 2 2 n w and you can define a L.T. : ( ) T c = = w v w as follows (by defining ( ) T V W + + + = w v w ) c c T 1 1 2 2 n n i i 6.47

Since is a basis for V, {w1, w2,wn} is linearly independent, and the only solution for w=0 is c1=c2= =cn=0 So with w=0, the corresponding v is 0, i.e., ker(T) = {0} ' B T is one - to - one By Theorem 6.5, we can derive that dim(range of T) = dim(domain of T) dim(ker(T)) = n 0 = n = dim(W) T is onto Since this linear transformation is both one-to-one and onto, then V and W are isomorphic 6.48

Note Theorem 6.9 tells us that every vector space with dimension n is isomorphic to Rn Ex 12: (Isomorphic vector spaces) The following vector spaces are isomorphic to each other R = 4 (a) 4-space M = (b) space of all 4 1 matrices 4 1 M = P x = (c) space of all 2 2 matrices 2 2 (d) ( ) space of all polynomials of degree 3 or less 3 = 5 (e) {( , , , , 0), are real numbers}(a subspace of i x x x x x ) V R 1 2 3 4 6.49

Keywords in Section 6.2: kernel of a linear transformation T: T range of a linear transformation T: T rank of a linear transformation T: T nullity of a linear transformation T: T one-to-one: onto: Isomorphism (one-to-one and onto): isomorphic space: 6.50