Insights on Data Dredging and Financial Tips

Explore the concept of guilt-free data dredging via privacy and the implications of false discovery, alongside intriguing investment tips shared through email correspondence between "Trustworthy-broker@trustme.com" and "aaroth@cis.upenn.edu" regarding SHAK stock predictions.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

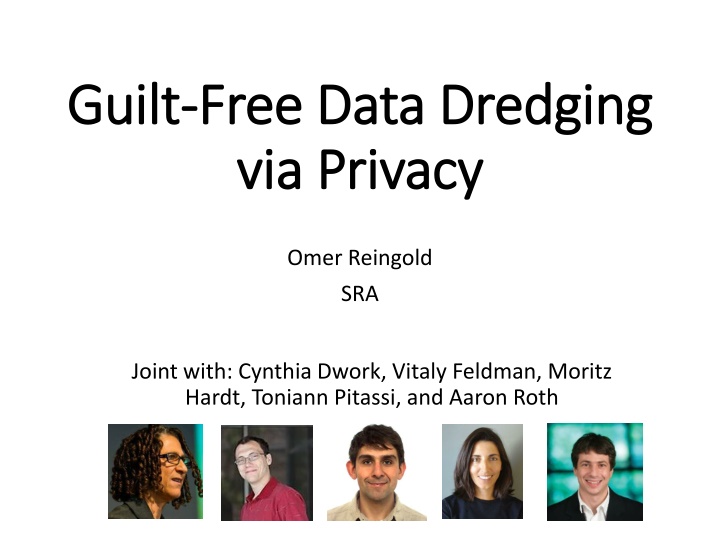

Guilt Guilt- -Free Data Dredging Free Data Dredging via Privacy via Privacy Omer Reingold SRA Joint with: Cynthia Dwork, Vitaly Feldman, Moritz Hardt, Toniann Pitassi, and Aaron Roth

False discovery Just Getting Worse Trouble at the Lab The Economist

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 2/27/15 Subject: Gr8 investment tip!!! Hi! You don t know me, but here is a tip! Go long on SHAK it will go up today.

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 2/28/15 Subject: Gr8 investment tip!!! Hi again! Go short today. SHAK is going down.

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/1/15 Subject: Gr8 investment tip!!! Down again.

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/2/15 Subject: Gr8 investment tip!!! Up!

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/3/15 Subject: Gr8 investment tip!!! Down.

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/4/15 Subject: Gr8 investment tip!!! Up!

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/5/15 Subject: Gr8 investment tip!!! Up!

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/6/15 Subject: Gr8 investment tip!!! Up!

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/7/15 Subject: Gr8 investment tip!!! Down.

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/8/15 Subject: Gr8 investment tip!!! Down.

From: Trustworthy-broker@trustme.com To: aaroth@cis.upenn.edu Date: 3/8/15 Subject: Gr8 investment opportunity!!! Hi there. I m tired of giving out this great advice for free. Let me manage your money, and I ll continue giving you my stock prediction tips in exchange for a small cut! BANK ACCOUNT NUMBER PLS!!

Hmm The chance he was right 10 times in a row if he was just randomly guessing is only 1 210 0.0009. ? < 0.05. I can reject the null hypothesis that these predictions were luck. HERE IS MY MONEY!

What happened 100,000

What happened 50,000

What happened 25,000

After 10 days There remain 100 people who have received perfect predictions. The individual recipient s error was his failure to take into account the size of the email pool.

The Multiple Hypothesis Testing Problem A finding with p-value 0.05 has only a 5% probability of being realized by chance But we expect 5 such findings if we test 100 hypotheses, even if they are all wrong Even worse: Publication Bias

Hack Your Way To Scientific Glory Try to prove a hunch: The U.S. economy is affected by whether Republicans or Democrats are in office. Using real data going back to 1948. 1,800 possible combinations Many possible settings of the learning algorithm P hacking , data dredging , very tempting

Preventing false discovery Decade old subject in Statistics Powerful results such as Benjamini-Hochberg work on controlling False Discovery Rate Lots of tools: Cross-validation, bootstrapping, holdout sets Theory focuses on non-adaptive data analysis

Non-adaptive data analysis Specify exact experimental setup hypotheses to test, Collect data Run experiment Observe outcome Data analyst Can t reuse data after observing outcome.

Adaptive data analysis Specify exact experimental setup hypotheses to test, Collect data Run experiment Observe outcome Revise experiment Data analyst

Why adaptivity is troublesome Exacerbates the multiple hypothesis testing problem exponentially must account for all hypotheses that might have been tested.

An adaptive data analyst is a decision tree ?1 ?4 ?2 ?10 ?5 ?16 ? ?11 ?14 ?13 ?37 ?24

An adaptive data analyst is a decision tree Must account not just for the ? queries actually made, but the (2?) queries that could have been made given all possible outcomes. ?1 ?4 ?2 ?10 ?5 ?16 ? ?11 ?14 ?13 ?37 ?24

Adaptivity arises naturally. Freedman s Paradox: an equation ?=??+? is fitted erroneously with a little bit of adaptivity. Vast literature on addressing this and other special cases. Natural learning procedures (like gradient descent) adaptively query the data. Common practice to first feel/plot/see the data before coming up with hypotheses and setting the right parameters More insidious: studies conducted by researchers who have read papers that used the same data set must be considered adaptive. Will be the norm as large data sets are shared and re- used!

Better Habits? For every hypothesis to be tested gather fresh data? Bad habits are sometimes prevailing for a reason: Easy, Cheap, Addictive Better if we can provide automatic correctness protection

Standard holdout method training data unrestricted access Data holdout good for one validation Data analyst Reusing can cause overfitting: Kaggle s data analysis competition Non-reusable: Can t use information from holdout in training stage adaptively Variant of Freedman s Paradox

Our Reusable holdout training data unrestricted access Data reusable holdout can be used many times adaptively Data analyst essentially as good as using fresh data each time!

Our Reusable holdout Check validity against holdout via a mechanism (differentially private) Goals: Provides useful information about the distribution, Control information leakage sufficiently well to prevent over-fitting reusable holdout Data analyst can be used many times adaptively essentially as good as using fresh data each time!

Differential Privacy [Dwork-McSherry-Nissim-Smith 06] D Xavier Bob Chris Donna Ernie Alice Algorithm ratio bounded Pr [r]

Intuition Differential privacy is a stability guarantee: Changing one data point doesn t affect the outcome much Stability implies generalization Overfitting is not stable Differential privacy composes well (helps deal with adaptivity)

Rich Algorithmic Literature Counts, linear queries, histograms, contingency tables (marginals) Location and spread (eg, median, interquartile range) Dimension reduction (PCA, SVD) Support Vector Machines Sparse regression/LASSO, logistic and linear regression, gradient descent Clustering Boosting, Multiplicative Weights Combinatorial optimzation, mechanism design Privacy Under Continual Observation, Pan-Privacy Kalman filtering Statistical Queries learning model, PAC learning The Algorithmic Foundations of Differential Privacy, Dwork and Roth, August 2014

Example: Thresholdout, an Instantiation of the Reusable Holdout [relies on HR10] Input: Training set St, holdout set Sh, Budgets: m on total number of queries, and B on number of over fitting detected (Sh diff from St), Other Parameters: threshold T, tolerance Given a StatisticalQuery : X [ 1,1], do: 1. If B < 1 or m< 1, output 2. Else sample , Lap( ) (a) If |E_Sh[ ] E_St[ ]| > T + , output E_Sh[ ] + set B B 1. (b) Otherwise, output E_St[ ]. 3. m m 1 Parameters: m can be exponential in |Sh|. B quadratic.

Conclusions and Further Research Reusable holdout: provable correctness protection Utility and privacy can be in sync. Parameters: can we improve? What are the limits? Do we need Differential Privacy (max-information). Experiments: is it practical? Design practical methods for real data analysis problems Limitations for efficient mechanisms [Hardt,Ullman 14] and [Steinke,Ullman 15] Improved parameters and more general settings [Bassily, Nissim, Smith, Steinke, Stemmer, Ullman 15]

An illustrative experiment Data set with 2n = 20,000 rows and d = 10,000 variables. Class labels in {-1,1} Analyst performs stepwise variable selection: 1. Split data into training/holdout of size n 2. Select best k variables on training data 3. Only use variables also good on holdout 4. Build linear predictor out of k variables 5. Find best k = 10,20,30,

No correlation between data and labels data are random gaussians labels are drawn independently at random from {-1,1} Thresholdout correctly detects overfitting!

High correlation 20 attributes are highly correlated with target remaining attributes are uncorrelated Thresholdout correctly detects right model size!