Improving Local Storage Systems Performance Trade-off

Exploring the trade-off between consistency and performance in local storage systems by applying distributed system principles. Discusses the reasons behind the trade-off and strategies to achieve speedup using stale data effectively.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Towards Weakly Consistent Local Storage Systems Ji-Yong Shin1,2, Mahesh Balakrishnan2, Tudor Marian3, Jakub Szefer2, Hakim Weatherspoon1 1Cornell University, 2Yale University, 3Google ACM Symposium on Cloud Computing Oct 6, 2016

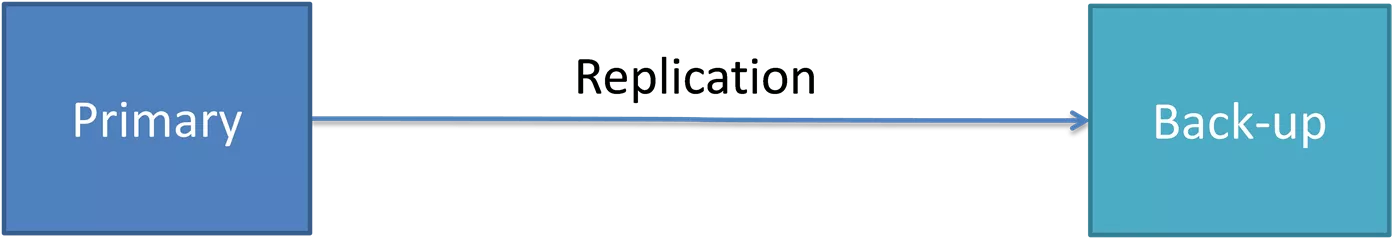

Consistency/Performance Trade-off in Distributed Systems Slower reads to latest version of data Client Client Client Client Clients Faster reads to stale data using weak consistency Replication Primary Back-up ACM Symposium on Cloud Computing Oct 6, 2016 2

Server Comparison Year Model (4U) Dell PowerEdge 6850 Dell PowerEdge R930 CPU [# of cores] [ 8 ] Memory 64GB 2006 2016 4 2 core Xeon 4 24 core Xeon [ 96 ] 6TB 2 1GigE 2 10GigE 12 X 96 X 11 X Network 2 1GigE 4.2 X (175X) 8 SCSI/SAS HDD 24 SAS HDD/SSD Storage 10 x PCIe SSD Modern Server Distributed System ACM Symposium on Cloud Computing Oct 6, 2016 3

Can we apply distributed system principles to local storage systems to improve performance? Consistency and performance trade-off ACM Symposium on Cloud Computing Oct 6, 2016 4

Why Consistency/Performance Trade-off? Distributed Systems Different versions of data exist in different servers due to network delays for replication Modern Servers Different versions of data exist in different storage media due to logging, caching, copy-on- write, deduplication, etc. Older versions are faster to access when they are on faster storage media Older versions are faster to access when the client is closer to the server Reasons for different access speeds RAM, SSD, HDD, hybrid-drives, etc. Disk arm contention SSD under garbage collection Degraded mode in RAID ACM Symposium on Cloud Computing Oct 6, 2016 5

Fine-grained Log and Coarse-grained Cache Multiple logged objects fit in one cache block Fast read (stale) Memory Cache Read A A B D ( 3 ) ( 4 ) ( 5 ) Slow read (latest) Read B Read D A B C A D SSD ( 3 ) ( 4 ) ( 4 ) ( 5 ) ( 5 ) Log of KV pairs Key (Ver) Key (Ver) ACM Symposium on Cloud Computing Oct 6, 2016 6

Goal Speedup local storage systems using stale data (consistency/performance trade-off) How should storage systems access older versions? Which version should be to returned? What should be the interface? What are the target applications? ACM Symposium on Cloud Computing Oct 6, 2016 7

Rest of the Talk StaleStore Yogurt: An Instance of StaleStore Evaluation Conclusion ACM Symposium on Cloud Computing Oct 6, 2016 8

StaleStore A local storage system that can trade-off consistency and performance Can be in any form KV-store, filesystem, block store, DB, etc. Maintains multiple versions of data Should have interface to access older versions Can estimate cost for accessing each version Aware of data locations and storage device conditions Aware of consistency semantics Ordered writes and notion of timestamps and snapshots Distributed weak (client-centric) consistency semantics ACM Symposium on Cloud Computing Oct 6, 2016 9

StaleStore: Consistency Model Distributed (client-centric) consistency semantics Per-client, per-object guarantees for reads Bounded staleness Read-my-writes Monotonic-reads: A client reads an object that is the same or later version than the version that was last read by the same client ACM Symposium on Cloud Computing Oct 6, 2016 10

StaleStore: Target Applications Distributed applications Aware of distributed consistency Can deal with data staleness Server applications Can provide per client guarantees ACM Symposium on Cloud Computing Oct 6, 2016 11

Rest of the Talk StaleStore Yogurt: An Instance of StaleStore Evaluation Conclusion ACM Symposium on Cloud Computing Oct 6, 2016 12

Yogurt: A Block-Level StaleStore An log-structured disk array with cache [Shin et al., FAST 13] (Linux kernel module) Prefer to read from non-logging disks Prefer to read from the most idle disk Read Cache Fast read Fast read Log Read (stale) Read (stale) Slow read (latest) Disk 2 Disk 0 Disk 1 ACM Symposium on Cloud Computing Oct 6, 2016 13

Yogurt: Basic APIs Write (Address, Data, Version #) Versioned (time-stamped) Write Version # constitutes snapshots Read (Address, Version #) Versioned (time-stamped) Read GetCost(Address, Version #) Cost estimation for each version ACM Symposium on Cloud Computing Oct 6, 2016 14

Yogurt Cost Estimation GetCost(Address, Version) returns an integer Disk vs Memory Cache Cache always has lower cost (e.g. cache = -1, disk = positive int) Disk vs disk Number of queued I/Os with weights Queued writes have higher weight than reads ACM Symposium on Cloud Computing Oct 6, 2016 15

Reading blocks from Yogurt Monotonic-reads example Client session Lowest Ver = Read version [Blk 1: Ver 5] 3 1. 2. Read block 1 Checks current timestamp: highest Ver = Issues GetCost() for block 1 between versions 3 and 8 (N queries with uniform distance) Reads the cheapest: e.g. 1 (5): Read(1, 5) Records version for block 1 Global Timestamp 8 3 4 5 6 7 8 3. 4. Cache 3 1 5 3 (3) 1 (4) 2 (4) 1 (5) 3 (5) 1 (6) 2 (6) 3 (7) 2 (8) ACM Symposium on Cloud Computing Oct 6, 2016 16

Data construct on Yogurt High level data constructs span multiple blocks Blocks should be read from a consistent snapshot Later reads depend on prior reads: GetVersionRange() Cornell University 3 in NYC 3 3 3 Cornell University in NYC 2 Cornell University in NYC 2 Timestamp 1 Cornell University 1 in Ithaca 1 Cornell University in Ithaca 1 0 0 0 Ithaca College in Ithaca 0 Ithaca College in Ithaca 0 1 Block Address 2 ACM Symposium on Cloud Computing Oct 6, 2016 17

Rest of the Talk StaleStore Yogurt: An Instance of StaleStore Evaluation Conclusion ACM Symposium on Cloud Computing Oct 6, 2016 18

Evaluation Yogurt: 3 disk setting with memory cache Focus on read latency while using monotonic-reads Clients simultaneously access servers Primary-backup setting Baseline 1: reads latest data in the primary server 100ms delay Client Client Client Client Clients Baseline 2: reads latest data in a local server Stream of Versioned Writes Back-up (Yogurt) Primary Utilize stale data in a local server ACM Symposium on Cloud Computing Oct 6, 2016 19

Evaluation: Block Access Uniform random workload 8 clients access one block at a time X-axis: # of available older versions built up during warm up 200 180 Average Read Latency (ms) 160 Primary 140 Local latest 120 Yogurt MR 100 80 60 40 20 0 1 2 3 4 5 6 7 8 Number of Stale Versions Available at Start Time ACM Symposium on Cloud Computing Oct 6, 2016 20

Evaluation: Key-Value Store YCSB Workload-A (Zipf with 50% read, 50% write) 16 clients access multiple blocks of key-value pairs KV Store greedily searches the cheapest using Yogurt APIs KV pairs can be partially updated 180 Average Read Latency (ms) 160 140 120 Primary 100 Local latest 80 Yogurt MR 60 40 20 0 4KB 8KB 12KB 16KB 20KB Key-Value Pair Size ACM Symposium on Cloud Computing Oct 6, 2016 21

Conclusion Modern servers are similar to distributed systems Local storage systems can trade-off consistency and performance We call them StaleStores Many systems have potentials to use this feature Yogurt, a block level StaleStore Effectively trades-off consistency and performance Supports high level constructs that span multiple blocks ACM Symposium on Cloud Computing Oct 6, 2016 22

Thank you Questions? ACM Symposium on Cloud Computing Oct 6, 2016 23

Extra slides ACM Symposium on Cloud Computing Oct 6, 2016 24

Fine-grained log and coarse-grained cache Multiple logged objects fit in one cache block Fast read (stale) Read A Memory Cache A B C D ( 3 ) ( 1 ) ( 1 ) ( 2 ) Slow read (latest) Read B Read C A B C A C D SSD ( 3 ) ( 1 ) ( 0 ) ( 4 ) ( 1 ) ( 2 ) Log of KV pairs Key (Ver) Key (Ver) ACM Symposium on Cloud Computing Oct 6, 2016 25

Fine-grained log and coarse-grained cache 8 threads reading and writing at 9:1 ratio KV-pairs per cache block from 2 to 16 Allowed staleness from 0 to 15 updates (bounded staleness) 1200 Average Read Latency (us) 1000 Max 60% 800 600 400 200 2 items 4 items 8 items 16 items 0 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Allowed Staleness (# of updates) ACM Symposium on Cloud Computing Oct 6, 2016 26

Deduplicated system with read cache Systems that cache deduplicated chunks Fast read (stale) Memory Cache Logical block to physical chunk map Read B 1 B 0 C 2 B 1 B 2 Read B2 Slow read (latest) C 2 C 3 Disk ACM Symposium on Cloud Computing Oct 6, 2016 27

Deduplicated system with read cache 8 threads reading and writing at 9:1 ratio Deduplication ratio controlled from 30 to 90% Allowed staleness from 0 to 15 updates (bounded staleness) 25000 30% 50% 70% 90% Average Read Latency (us) 20000 Max 30% 15000 10000 5000 0 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Allowed Staleness (# of updates) ACM Symposium on Cloud Computing Oct 6, 2016 28

Write cache that is slow for reads Griffin: disk cache over SSD for SSD lifetime Slow read (latest) Read Addr 1 Disk Cache 3 (5) 1 (2) 1 (3) Logged blocks Fast read (stale) Flushes latest 0 (3) 1 (1) 2 (0) 3 (4) SSD Linear block address space Addr (Ver) ACM Symposium on Cloud Computing Oct 6, 2016 29

Write cache that is slow for reads 8 threads reading and writing at 9:1 ratio Data flushed from disk to SSD every 128MB to 1GB writes Allowed staleness from 0 to 7 updates (bounded staleness) 8000 128MB 7000 Average Read Latency (us) 256MB 6000 512MB 5000 Max 95% 1024MB 4000 3000 2000 1000 0 0 1 2 3 4 5 6 7 Allowed Staleness (# of updates) ACM Symposium on Cloud Computing Oct 6, 2016 30