Hands-Off Persistence System (HOPS) Overview

Hands-Off Persistence System (HOPS) is a novel approach to memory hierarchy design, focusing on volatile memory with minimal changes. It enables multiple copies of the same cacheline, handles cross-dependencies conservatively, and optimizes transaction processing epochs efficiently. The system architecture maintains correctness and coherence without frequent flushing, providing improved performance for various workloads.

Uploaded on Oct 05, 2024 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Hands Hands- -Off Persistence System Off Persistence System (HOPS) (HOPS) Swapnil Haria1, Sanketh Nalli1, Haris Volos2, Kim Keeton2, Mark D. Hill1, Mike M. Swift1 1 University of Wisconsin-Madison 2 Hewlett Packard Labs 1

WHISPER Analysis WHISPER Analysis HOPS Design HOPS Design 4% accesses to PM, 96% to DRAM Volatile memory hierarchy (almost) unchanged 5-50 epochs/transaction Order epochs without flushing Self-dependencies common Allows multiple copies of same cacheline Cross-dependencies rare Correct, conservative method based on coherence 2

Outline Outline 1.8 CLWB+Fence 1.6 1.4 PM Normalized Speedup 1.2 HEAD 1 0.8 ? 0.6 0.4 C A B 0.2 0 ctree hashmap echo nstore vacation average Evaluation Motivation HOPS Design 3

ACID Transactions (currently) ACID Transactions (currently) Acquire Lock 1 Prepare Log Entry N FLUSH EPOCH Mutate Data Structure1 N FLUSH EPOCH Commit Transaction FLUSH EPOCH Release Lock 4

Base System Base System CPU 0 CPU 1 Private L1 Private L1 Shared LLC DRAM Controller PM Controller Volatile Persistent 5

Base System: Flush Base System: Flush 1. Flush A 1. Flush A 2. Flush B CPU 1 CPU 0 A=1 Writeback A Flush ACK Long Latency PM Write DRAM Controller PM Controller Volatile Persistent 6

Outline Outline 1.8 CLWB+Fence 1.6 1.4 PM Normalized Speedup 1.2 HEAD 1 0.8 ? 0.6 0.4 C A B 0.2 0 ctree hashmap echo nstore vacation average Evaluation Motivation HOPS Design 7

Hands Hands- -off Persistence System (HOPS) off Persistence System (HOPS) Volatile memory hierarchy (almost) unchanged Order epochs without flushing Allows multiple copies of same cacheline Correct, conservative method for handling cross-dependencies 8

Base System + Persist Buffers Base System + Persist Buffers Base System Base System CPU CPU Persist Buffer Front End Persist Buffer Front End Private L1 Private L1 Shared LLC +Stores Loads Loads + Stores Persist Buffer Back End DRAM Controller PM Controller Volatile Persistent 9

Persist Buffers Persist Buffers Volatile buffers Front End (per-thread) Address, Ordering Info Back End (per-MC) Cacheline data Enqueue/Dequeue only Not fully-associative 10

Hands Hands- -off Persistence System (HOPS) off Persistence System (HOPS) Volatile memory hierarchy (almost) unchanged Order epochs without flushing Allows multiple copies of same cacheline Correct, conservative method for handling cross-dependencies 11

OFENCE: Ordering Fence OFENCE: Ordering Fence Orders stores preceding OFENCE before later stores OFENCE Thread 1 Thread 1 Volatile Memory Order ST A=1 ST B=2 Time Persistence Order ST A=1 ST B=2 Happens Before 12

Base System + Persist Buffers Base System + Persist Buffers CPU CPU Persist Buffer Front End Persist Buffer Front End Private L1 Private L1 Shared LLC Stores Loads + Stores Loads Persist Buffer Back End DRAM Controller PM Controller Volatile Persistent 13

Ordering Epochs without Flushing Ordering Epochs without Flushing 1. ST A = 1 2. ST B = 1 3. LD R1 = A 4. OFENCE 5. ST A = 2 CPU 1 Local TS 25 26 A = 1 A = 2 A = 2 26 B = 1 B = 1 25 A = 1 25 Persist Buffer L1 Cache 14

ACID Transactions in HOPS ACID Transactions in HOPS Acquire Lock Volatile Writes 1 Prepare Log Entry N Persistent Writes Mutate Data Structure1 N OFENCE Commit Transaction NOT DURABLE! Release Lock 15

ACID Transactions in HOPS ACID Transactions in HOPS Acquire Lock Volatile Writes 1 Prepare Log Entry N Persistent Writes Mutate Data Structure1 N OFENCE DFENCE Commit Transaction Release Lock 16

DFENCE: Durability Fence DFENCE: Durability Fence Makes the stores preceding DFENCE durable DFENCE Thread 1 Thread 1 Volatile Memory Order ST A=1 ST B=2 Time Happens Before Persistence Order ST A=1 ST B=2 17

Durability Durability is important too! is important too! 1. ST A = 1 2. ST B = 1 3. LD R1 = A 4. OFENCE 5. ST A = 2 CPU 1 N. DFENCE Local TS 26 A = 1 A = 2 B = 1 A = 2 26 Persist Buffer L1 Cache 18

Hands Hands- -off Persistence System (HOPS) off Persistence System (HOPS) Volatile memory hierarchy (almost) unchanged Order epochs without flushing Allows multiple copies of same cacheline Correct, conservative method for handling cross-dependencies 19

Preserving multiple copies of Preserving multiple copies of cachelines cachelines 1. ST A = 1 2. ST B = 1 3. LD R1 = A 4. OFENCE 5. ST A = 2 CPU 1 Local TS 26 A = 1 A = 2 A = 2 26 B = 1 B = 1 25 A = 1 25 Persist Buffer L1 Cache 20

Hands Hands- -off Persistence System (HOPS) off Persistence System (HOPS) Volatile memory hierarchy (almost) unchanged Orders epochs without flushing Allows multiple copies of same cacheline Correct, conservative method for handling cross-dependencies 21

Outline Outline 1.8 CLWB+Fence 1.6 1.4 PM Normalized Speedup 1.2 HEAD 1 0.8 ? 0.6 0.4 C A B 0.2 0 ctree hashmap echo nstore vacation average Evaluation Motivation HOPS Design 22

System Configuration System Configuration Evaluated using gem5 full-system mode with the Ruby memory model Parameter Setting CPU Cores 4 cores, OOO, 2Ghz L1 Caches private, 64 KB, Split I/D L2 Caches private, 2 MB DRAM 4GB, 40 cycles read/write latency PM 4GB, 160 cycles read/write latency Persist Buffers 64 entries 23

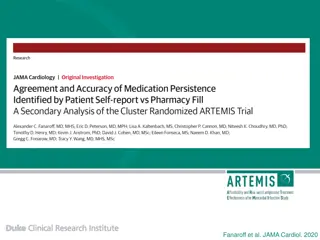

Performance Evaluation Performance Evaluation 1 Baseline, uses HOPS HOPS + Persistent Ideal performance, clwb + sfence Write Queue Baseline + Persistent Write Queue unsafe on crash 0.9 0.8 Normalized Runtime 0.7 (Lower is Better) 0.6 x86-64 (NVM) x86-64 (PWQ) 0.5 HOPS (NVM) HOPS (PWQ) 0.4 IDEAL (NON-CC) 0.3 0.2 0.1 0 echo ycsb redis ctree hashmapvacation average 24

WHISPER Analysis WHISPER Analysis HOPS Design HOPS Design 4% accesses to PM, 96% to DRAM Volatile memory hierarchy (almost) unchanged 5-50 epochs/transaction Order epochs without flushing Self-dependencies common Allows multiple copies of same cacheline Cross-dependencies rare Correct, conservative method based on coherence 25

Questions? Questions? Thanks! 26

BENCHWARMERS BENCHWARMERS 27

Handling Cross Dependencies Handling Cross Dependencies CPU 1 CPU 0 ST A = 4 Local TS 25 24 Local TS 14 0:25 A = 4 A = 1 3 L1 Cache L1 Cache 1 2 Directory A = 4 14 0:25 Persist Buffer 28

Comparison with DPO (Micro 16) Comparison with DPO (Micro 16) Parameter HOPS Ordering, Durability Buffered None DPO Ordering Buffered Primitives Conflicts Effect on Volatile accesses Fully associative PBs snooped on every coherence request Lazy (cumulative) updates of PB drain Works natively Scalable to multiple cores Global Broadcast on every PB drain Scalable to multiple MC Designed for one MC 29

Comparison with Efficient PB (Micro 15) Comparison with Efficient PB (Micro 15) Parameter HOPS Ordering, Durability Buffered Buffered 1 bit Efficient PBs Ordering Primitives Intra-thread Conflict Causes Synchronous Flush Buffered (upto 5) Inter-thread Conflict Cache modifications Proportional to number of cores, inflight epochs supported 30

Comparison with Non Comparison with Non- -Temporal Stores (x86) Temporal Stores (x86) Parameter HOPS Yes Yes, with fast OFENCE Yes, with DFENCE NT Stores Stores cached Cache copy invalidated Yes, with slower FENCEs Ordering Guarantees Durability Guarantees No 31

Linked List Insertion Linked List Insertion - - Naive Naive CACHE HEAD 1. Create Node 2. Update Node Pointer NODE C NODE A NODE B 3. Update Head Pointer CACHE WRITEBACK PM HEAD NODE C NODE A NODE B 32

Linked List Insertion Linked List Insertion - - Naive Naive CACHE HEAD NODE C NODE A NODE B Caches (volatile) wiped clean System Crash Main Memory inconsistent! PM HEAD ? NODE C NODE A NODE B 33

Linked List Insertion Linked List Insertion Crash Consistent Crash Consistent CACHE HEAD 1. Create Node Epoch 1 2. Update Node Pointer NODE C NODE A NODE B 3. FLUSH EPOCH 1 EXPLICIT WRITEBACK CACHE WRITEBACK Epoch 2 4. Update Head Pointer PM HEAD 5. FLUSH EPOCH 2 NODE C NODE A NODE B 34

Performance Evaluation Performance Evaluation 1 Baseline, uses clwb + sfence Baseline + Persistent Write Q HOPS HOPS + Persistent Write Q Ideal performance, incorrect on crash 0.9 0.8 Normalized Runtime 0.7 (Lower is Better) 0.6 x86-64 (NVM) x86-64 (PWQ) 0.5 HOPS (NVM) HOPS (PWQ) 0.4 IDEAL (NON-CC) 0.3 0.2 0.1 0 echo ycsb redis ctree hashmapvacation average 35

Proposed Use Proposed Use- -Cases Cases File Systems Existing FS (ext4) NVM-aware FS (PMFS, BPFS) Persistent Data stores Key-Value stores Relational databases Persistent Heap Various forms of caching Webserver/file/page caching Low-power data storage for IoT devices 36

Intel extensions for PM Intel extensions for PM CLWB - Cache line Write Back Write back cached line (if present in cache hierarchy), may retain clean copy 37

Evaluating Intel extensions Evaluating Intel extensions Crash-Consistency Sufficient to provide crash consistency, if used correctly Programmability Pushes burden of data movement onto programmer Performance Provides ordering and durability mixed in one 38

Evaluating Intel extensions Evaluating Intel extensions Crash-Consistency Sufficient to provide crash consistency, if used correctly Programmability Pushes burden of data movement onto programmer Complex ordering guarantees, expressed in imprecise prose Complex semantics, expressed in imprecise prose Makes the programmer worry about caches 39

Program 1. A = 1 2. B = 1 3. CLWB A 4. CLWB B CPU Coherent Cache Hierarchy Caches -> Memory Controller Queues A=1 B=1 Persistent Write Queue (PM Controller) A=0 A=0 B=0 B=0 Persistent Address Space 40

Intra Intra- -thread Dependency (Epoch) : Track thread Dependency (Epoch) : Track Local timestamp (TS) register maintained at L1 cache CPU 1 Local TS Indicates epoch TS of current (incomplete) epoch Local TS copied as part of PB entry for incoming PM stores Persist Buffer L1 Cache Local TS incremented on encountering persist barrier 43

Intra Intra- -thread Dependency (Epoch) : Track thread Dependency (Epoch) : Track Local timestamp (TS) register maintained at L1 cache CPU 1 Local TS 25 Indicates epoch TS of current (incomplete) epoch Local TS copied as part of PB entry for incoming PM stores Persist Buffer L1 Cache Local TS incremented on encountering persist barrier 44

Intra Intra- -thread Dependency (Epoch) : Track thread Dependency (Epoch) : Track ST A = 1 Local timestamp (TS) register maintained at L1 cache CPU 1 Local TS 25 Indicates epoch TS of current (incomplete) epoch A = 1 Local TS copied as part of PB entry for incoming PM stores A = 1 25 Persist Buffer L1 Cache Local TS incremented on encountering persist barrier 45

Intra Intra- -thread Dependency (Epoch) : Track thread Dependency (Epoch) : Track ST A = 1 Local timestamp (TS) register maintained at L1 cache ST B = 1 CPU 1 Local TS 25 Indicates epoch TS of current (incomplete) epoch A = 1 B = 1 B = 1 25 Local TS copied as part of PB entry for incoming PM stores A = 1 25 Persist Buffer L1 Cache Local TS incremented on encountering persist barrier 46

Intra Intra- -thread Dependency (Epoch) : Track thread Dependency (Epoch) : Track ST A = 1 Local timestamp (TS) register maintained at L1 cache ST B = 1 CPU 1 OFENCE Local TS 26 Indicates epoch TS of current (incomplete) epoch A = 1 B = 1 B = 1 25 Local TS copied as part of PB entry for incoming PM stores A = 1 25 Persist Buffer L1 Cache Local TS incremented on encountering persist barrier 47

Intra Intra- -thread Dependency (Epoch) : Track thread Dependency (Epoch) : Track ST A = 1 Local timestamp (TS) register maintained at L1 cache ST B = 1 CPU 1 OFENCE ST A = 2 Local TS 26 Indicates epoch TS of current (incomplete) epoch A = 2 A = 2 26 B = 1 B = 1 25 Local TS copied as part of PB entry for incoming PM stores A = 1 25 Persist Buffer L1 Cache Local TS incremented on encountering persist barrier 48

Intra Intra- -thread Dependency (Epoch) : Enforce thread Dependency (Epoch) : Enforce EXAMPLE TO BE DROPPED EXAMPLE TO BE DROPPED Persist Buffer Drain requests for all entries in epoch sent concurrently A = 2 26 B = 1 25 A = 1 25 ST B = 1 ST A = 1 Epoch entries drained after all drain ACKs received for previous epoch PM Controller PM Controller PM Region PM Region 49

Intra Intra- -thread Dependency (Epoch) : Enforce thread Dependency (Epoch) : Enforce Persist Buffer Drain requests for all entries in epoch sent concurrently A = 2 26 B = 1 25 A = 1 25 ACK ACK Epoch entries drained after all drain ACKs received for previous epoch PM Controller PM Controller B = 1 A = 1 PM Region PM Region 50