Exploring Word Embeddings and Syntax Encoding

Word embeddings play a crucial role in natural language processing, offering insights into syntax encoding. Jacob Andreas and Dan Klein from UC Berkeley delve into the impact of embeddings on various linguistic aspects like vocabulary expansion and statistic pooling. Through different hypotheses, they showcase how embeddings aid in handling out-of-vocabulary words and medium-frequency words, ultimately enhancing features like tense and transitivity.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

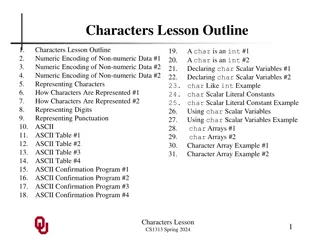

How much do word embeddings encode about syntax? Jacob Andreas and Dan Klein UC Berkeley

Everybody loves word embeddings fewmost that the aeach every this [Collobert 2011] [Collobert 2011, Mikolov 2013, Freitag 2004, Schuetze 1995, Turian 2010]

What might embeddings bring? Mary Cathleen complained about the magazine s shoddy editorial quality . average executive

Three hypotheses Cathleen Vocabulary expansion (good for OOV words) Mary Statistic pooling (good for medium-frequency words) average editorial executive Embedding structure (good for features) tense transitivity

Vocabulary expansion: Embeddings help handling of out-of-vocabulary words Cathleen Mary

Vocabulary expansion Cathleen yellow Mary John enormous Pierre hungry

Vocabulary expansion Mary Cathleen complained about the magazine s shoddy editorial quality. Cathleen yellow Mary John enormous Pierre hungry

Vocab. expansion results 100 95 91.22 91.13 90 85 80 75 70 65 60 +OOV Baseline

Vocab. expansion results 75 (300 sentences) 74 73 72.20 71.88 72 71 70 +OOV Baseline

Statistic pooling hypothesis: Embeddings help handling of medium-frequency words average editorial executive

Statistic pooling {NN} {NN, JJ} editorial {JJ} executive giant {NN} kind {NN, JJ} average

Statistic pooling {NN, JJ} {NN, JJ} editorial {JJ, NN} executive giant {NN, JJ} kind {NN, JJ} average

Statistic pooling editorial NN {NN} {NN, JJ} editorial {JJ} executive giant {NN} kind {NN, JJ} average editorial NN

Statistic pooling results 100 95 91.13 91.11 90 85 80 75 70 65 60 +Pooling Baseline

Vocab. expansion results 75 (300 sentences) 74 73 72.21 71.88 72 71 70 +Pooling Baseline

Embedding structure hypothesis: The organization of the embedding space directly encodes useful features tense transitivity

Embedding structure transitivity vanishing dining dined vanished tense devoured devouring assassinated assassinating dined VBD dined VBD [Huang 2011]

Embedding structure results 100 95 91.13 91.08 90 85 80 75 70 65 60 +Features Baseline

Embedding structure results 75 (300 sentences) 74 73 71.88 72 71 70.32 70 +Features Baseline

To summarize 100 Baseline 95 +OOV 90 +Pooling +Features 85 80 (300 sentences) 75 70 65 60

Combined results 100 95 90.70 90.11 90 85 80 75 70 65 60 +OOV +Pooling Baseline

Vocab. expansion results 75 (300 sentences) 74 73 72.21 71.88 72 71 70 +OOV +Pooling Baseline

What about Domain adaptation? (no significant gain) French? (no significant gain) Other kinds of embeddings? (no significant gain)

Why didnt it work? Context clues often provide enough information to reason around words with incomplete / incorrect statistics Parser already has a robust OOV, small count models Sometimes help from embeddings is worse than nothing: bifurcate homered tuning Soap Paschi unrecognized

What about other parsers? Dependency parsers (continuous repr. as syntactic abstraction) Neural networks (continuous repr. as structural requirement) [Henderson 2004, Socher 2013] [Henderson 2004, Socher 2013, Koo 2008, Bansal 2014]

Conclusion Embeddings provide no apparent benefit to state-of-the-art parser for: OOV handling Parameter pooling Lexicon features Code online at http://cs.berkeley.edu/~jda