Enhancing Memory Bandwidth with Transparent Memory Compression

This research focuses on enabling transparent memory compression for commodity memory systems to address the growing demand for memory bandwidth. By implementing hardware compression without relying on operating system support, the goal is to optimize memory capacity and bandwidth efficiently. The approach aims to leverage memory compression to free up space and enhance overall system performance without requiring significant changes in the operating system. The ultimate objective is to achieve practical transparent memory compression that maximizes bandwidth benefits with minimal overhead, targeting robust performance with commodity memory.

Uploaded on Nov 20, 2024 | 1 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

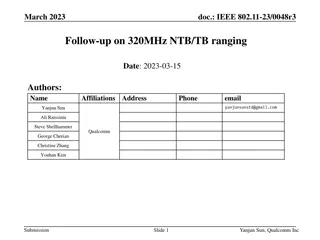

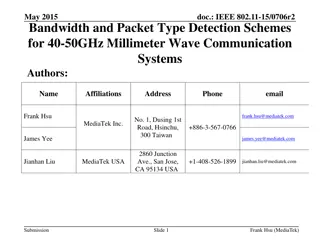

Enabling Transparent Memory-Compression for Commodity Memory Systems HPCA 2019 Vinson Young* Sanjay Kariyappa* Moinuddin Qureshi *These authors contributed equally to this work 1

MOORES LAW HITS BANDWIDTH WALL Channel On-Chip Bandwidth demand Need practical solutions for scaling memory bandwidth 2

MEM COMPRESSION FOR CAPACITY AND BANDWIDTH Data A Operating System Data B Data B OS Mem Capacity Physical Capacity 3 2 OS Mem Capacity Compression frees up space to store more data, improves capacity Phys Capacity But, memory capacity fluctuates needs OS support to utilize capacity Capacity benefits require OS support (multi-vendor) hinders adoption. Instead, we focus solely on improving memory bandwidth, with Transparent Memory Compression: HW compression for BW without OS 3

TRANSPARENT MEMORY COMPRESSION TMC: Enable bandwidth benefits without OS support Memzip e.g., Memzip* Bandwidth Benefits M M M M M M M M Data A Line A (top) Line A (top) Line B (compress) Line B (compress) OS transparent Line A (bottom) Line A (bottom) Commodity Memory Channel Channel Channel Negligible Metadata Overhead Can read compressed lines with half bandwidth Current TMC proposals require non-commodity memory and require significant metadata overhead *Ali Shafiee, Meysam Taassori, Rajeev Balasubramonian, & Al Davis. Memzip in HPCA 2014 4

GOAL: PRACTICAL TRANSPARENT MEM COMPRESSION Bandwidth Benefits OS Transparent ? ? ? Commodity Memory Negligible Metadata Overhead Robust Performance Our goal is to enable Practical TMC to obtain bandwidth benefits without the costs of compression. Should be OS transparent, use commodity memory, have minimal metadata access, and be robust. 5

OVERVIEW Background Proposal Address Mapping In-line Metadata Location Prediction Results Dynamic Policy Useful for Commodity Memory 6

PROBLEM OF TMC ON COMMODITY MEMORIES Interface: Conventional systems transfer 64B on each access Compressed Commodity Memory Line A Line A Line B Line B Data A Data A Channel No Partial-line Transfers in Commodity Memory TMC on commodity memory does not improve memory bandwidth 7

ENABLING TMC ON COMMODITY MEMORIES Compressed Commodity Memory Approach: Relocate lines together in one location Line B Line A Line A Line B Data A Channel Retrieve two lines per access, and store into L3. If both lines useful, 2x effective bandwidth. Access length unmodified. Pair-wise remapping compression enables 2x effective bandwidth, and works on commodity DRAM 8

OVERVIEW Background Proposal Address Mapping In-line Metadata Location Prediction Results Dynamic Policy Targeting Metadata Overhead 9

UNDERSTANDING METADATA LOOKUP Location of B changes depending on compressibility Read Request: Line B Uncompressed Read Metadata, informs uncompressed mapping Read location-2 M M M M=0 0 1 Line A 2 Line B Double-access to read one line Compressed Read Metadata, informs compressed mapping Read location-1 0 M M M M=1 1 Line A Line B 2 Double-access to read two lines Prior approaches rely on reading metadata first to learn mapping. Costs bandwidth and latency 10

TMC WITH METADATA (+ METADATA CACHE) Metadata Lookup limits performance (41% slowdown) TMC (metadata) Ideal 1.8 Speedup w.r.t Uncompressed Slowdown on average 1.6 1.4 1.2 1 0.8 0.6 0.4 GAP (Graph) MIX SPEC TMC suffers from constantly accessing metadata Evaluated on 8-core with 2 channels of DRAM 11

INSIGHT: CAN WE STORE METADATA IN LINE? Read Request: Line B Compressed Read Metadata, informs compressed mapping Read Line Read Line and metadata M M M M Line A Line B Single-access, avoid metadata lookup Storing metadata with line, enables single-access mem read 12

IN-LINE COMPRESSION-STATUS MARKER Lines compressible together if size is <64B Often, compressed lines don t use all space Double 64B Can spare 4B cheaply Double 60B 100 % of Compressible 50 Lines 0 Insight: We can repurpose space inside compressed line to store a small 4-Byte marker that denotes compressibility 4-byte marker 0xdeadbeef Line A Line B Invalid marker On reading a line with marker, we know it is a compressed line Marker informs compressibility, without metadata lookup 13

MARKER COLLISION But, an uncompressed line could coincidentally store the marker value(we call this marker collision). 1 in 4 billion chance ? 0xdeadbeef Line A ? Line A Line B 0xdeadbeef Solution: Track lines that coincidentally store marker value in a small SRAM structure (16-entry table of colliding addresses). Marker Collision Table Address A Small Collision Table (64B) is sufficient. Average time for collision table overflow is 10 million years. See paper for more detailed collision analysis. 14

METADATA WITH LINE, HOW TO LOCATE? But, marker is with line. Don t know where to access Uncompressed Find Line B ? ? 1 Line A 2 Line B Single access Compressed 1 0xdeadbeef Line A Line B Single access 2 Invalid Marker Reading all possible locations will waste bandwidth Solution: We can Predict compressibility and location, to enable reading & interpreting line in one access 15

OVERVIEW Background Proposal Address Mapping In-line Metadata Location Prediction Results Dynamic Policy Targeting Metadata Overhead 16

PAGE-BASED LINE LOCATION PREDICTOR Generally, lines within a page have similar compressibility Page Addr Hash M = 0 1 Predict Compressed indexing Store last- compressibility seen M = 1 Predict Base indexing 0 M = 1 M = 0 Page-based predictor achieves 98% location-prediction accuracy, by exploiting spatial locality in compressibility 17

VERIFYING PREDICTIONS WITH MARKER Correct prediction Access Line B Compressed Line B found Correct pred 1 0xdeadbeef Line A Line B Location Predictor 2 Invalid Marker Single-access, avoid metadata lookup Incorrect prediction Access Line B Compressed Incorrect pred Location Predictor 1 0xdeadbeef Line A Line B Line B not found 2 Invalid Marker Double-access, some bandwidth overhead Fortunately, mispredictions are rare With in-line metadata and location prediction, Practical TMC avoids most of the metadata overhead 18

OVERVIEW Background Proposal Address Mapping In-line Metadata Location Prediction Results Dynamic Policy 19

METHODOLOGY Commodity DRAM CPU Core Chip 3.2GHz 4-wide out-of-order core 8 cores, 8MB shared last-level cache Compression FPC + BDI. Supports 4-to-1 also (see paper) 20

METHODOLOGY Other sensitivities in paper Commodity DRAM CPU Commodity DRAM 16GB DDR1.6GHz, 64-bit 2 channels 25 GBps 35ns Capacity Bus Channels Bandwidth Latency 21

TMC AND PRACTICAL TMC PERFORMANCE Significant Location Mispredictions wastes bandwidth and latency Inline-Metadata + Location-Prediction, eliminates metadata lookup TMC (metadata) PTMC 1.8 Speedup w.r.t Uncompressed Metadata Lookup limits performance 1.6 1.4 1.2 1 0.8 0.6 0.4 GAP (Graph) MIX SPEC PTMC eliminates most metadata lookup to enable speedup Evaluated on 8-core with 2 channels of DRAM. See paper for more workloads. 22

OVERVIEW Background Proposal Address Mapping In-line Metadata Location Prediction Results Dynamic Policy Enabling Robust Performance 23

BANDWIDTH BENEFITS/COSTS OF COMPRESSION Benefits Costs Location Misprediction 2 Line A Line B 1 useful line with 2 accesses Useful Prefetch 1 Relocating lines when compressibility changes 3 Line A Line B 2 useful lines with 1 access Should disable compression when costs outweigh benefits 24

DYNAMIC PTMC IMPLEMENTATION 4096 Benefits Increment Utility Counter Set 1 Set 1 Set 1 1 Enable Enforce Policy Set 0 Sampled Set (always compress) 2 Decrement Utility Counter 3 Disable Compaction Set 99 Set 99 Set 99 Costs 0 Utility Counter observes if cost of compression is greater than benefit. If cost is greater, Dynamic-PTMC disables compression. Can extend to multi-core with per-thread counters Note: disabling compaction for metadata-based approaches does not reduce metadata lookup 25

DYNAMIC PTMC PERFORMANCE Disables compression when harmful (e.g. low location-prediction accuracy) PTMC Dynamic-PTMC 1.8 Speedup w.r.t Uncompressed 1.6 1.4 1.2 1 0.8 0.6 0.4 GAP (Graph) MIX SPEC Dynamic-PTMC ensures robust performance (no slowdown across all workloads tested) 26

HARDWARE COST OF PROPOSED DYNAMIC-PTMC Memory controller modifications Compression / Decompression Logic Additional SRAM storage in controller SRAM Storage 72B 64B 128B 12B 276B Markers Collision Table Location Predictor Dynamic-PTMC counter Total Storage Dynamic-PTMC enables robust speedup with minimal cost (276B SRAM, single-point modification) 27

PRACTICAL TRANSPARENT COMPRESSED MEM Bandwidth Benefits OS Transparent ? ? ? Commodity Memory Negligible Metadata Lookup Robust Performance Dynamic Solution Modified Mapping In-line Marker Line A Line B 0xdeadbeef Location Predictor Invalid Marker Thank you! 28

ADDITIONAL SLIDES Additional Slides 29

SECURITY OF MARKERS Marker Collisions where data stored matches marker We use 32-bit markers, which are created per-line and with a cryptographic collision-resistant hash. 1 / (2^32) chance of line coincidentally storing the same marker value. On average, memory space has one marker collision. We provision storing 16 of these exceptions, and overflowing is unlikely (> 10 million years per overflow event, assuming all of memory is written each nanosecond. On overflow, can change marker and recompress memory) 30

DYNAMIC SOLUTION ON PRIOR METADATA METHODS Prior approach: Major cost is metadata access, which occurs even for incompressible lines. Even if you disable actively compacting lines, you still need to read metadata (until you clean all possibly compressed lines from memory). Whereas, Dynamic-PTMC can disable compression costs by simply choosing not to actively compress data. Lines will be in uncompressed index and location will be easily predicted. 31

LOCATION PREDICTION ACCURACY VS. METADATA CACHE HIT-RATE 72% metadata cache hit-rate, vs 98% location pred. Prior approaches pay high BW overhead for metadata 32

ENABLING 4-TO-1 COMPRESSION On write: Reorganize compressed lines together in mem Restrict to 3 possible remappings (4-to-1, 2-to-1, uncompressed) 1 2 2 3 Possible locations: uncompressed A B C D A B C D 2:1 compressed A B C D A B C D 4:1 compressed A B C D B has two possible locations On read: lines have 1-3 possible locations and compression status Page-based Last-compressibility Line Location Predictor still effective. Need additional 4-to-1 marker value. 33

INVERT LINE ON COLLISION: ONLY COMPRESSED LINES STORE MARKER Marker x44444444 Marker Collision ? x0 . . . . . . . . . . . . . . . . 44444444 No 2 Line to install in mem addr A Update LIT A Invert on collision 1 - - - Install as is Yes x1 . . . . . . . . . . . . . . . . BBBBBBBB Line inversion Table 1 2 Do: & Inverted line Store uncompressed lines that store marker value, in inverted form. Only compressed lines have marker. (Reduces collision table check for compressed lines) 34