Discovering Parallelizing Approximation Framework

Explore a remarkable framework, PANDORA, by Greg Stitt from the University of Florida, supporting approximate computing to enhance performance and energy efficiency in the face of transistor limitations. Learn about the potential of approximate computing, its open questions, and how PANDORA automates the discovery of parallelizing approximations for improved code execution.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

PANDORA: A Parallelizing Approximation- Discovery Framework Greg Stitt Assoc. Professor of ECE University of Florida *This material is based upon work supported by the National Science Foundation under Grant Nos. CNS- 1149285, CNS-1718033, and CCF-1909244. UF ECE Big Ideas Seminar Sep 3th2020

Introduction New technologies enabled by faster computers Historically, faster computers result primarily from smaller transistors Scary time for computing Extra transistors providing fewer benefits Moore s Law slowing down, Dennard scaling has ended Increasingly problematic leakage power Increase in dark silicon Reaching physical limits How to improve performance and energy without more and/or faster transistors? Current trend is towards heterogeneous accelerators (GPUs, FPGAs) Helps, but these accelerators suffer from same issues What else can be done? 2

Approximate Computing Crazy idea: what if we eliminate the assumption that application output has to be correct? Instead focus on good-enough output How much can performance/energy be improved? Approximate computing Emerging area Attractive tradeoffs between performance/energy and error Potential solution to transistor bottlenecks 3

Approximate Computing Open questions When is approximation safe? How much approximation can be tolerated? How to create approximations? Mainstream applications already approximate Just not thought of that way Examples: Any application using real numbers Simulation of continuous processes (e.g. scientific computing) Machine learning 4

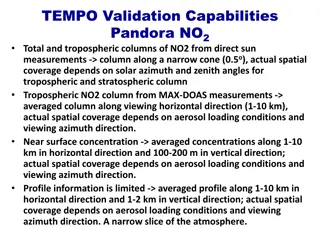

PANDORA Parallelizing sequential code a longtime compiler goal Can approximation unlock more parallelism? Yes, previous work transforms existing code to violate dependencies Provides attractive tradeoffs between error and performance Transforming existing code provides limited improvements Effective approximation can require different algorithms and/or functions Problem: manually creating approximations is difficult PANDORA automatically discovers parallelizing approximations Independent of original code Independent of language Independent of architecture Supports multiple optimization goals and constraints 5

PANDORA Overview Approximations Samples Original Function y=c0*x2 y y=|c0*x| y=sin(x - /2)+1 y y y 1 1 1 1 x x x x - /2 0 /2 - /2 0 /2 - /2 0 /2 - /2 0 /2 2) Discover more efficient functions that nearly coincide with samples 1) Replace function with samples Idea: infinite number of functions that nearly coincide with samples Goal: discover one that is more parallel or efficient than original Primary research challenge: how to discover efficient functions that coincide with samples? 6

Symbolic Regression Definition: search space of all mathematical expressions to discover function that bests fits data i.e. find function that minimizes error PANDORA: perform symbolic regression with additional constraints and optimization goals e.g. maximize performance for error constraint e.g. minimize energy for error constraint e.g. minimize error for performance constraint Genetic programming commonly used for symbolic regression Evolve tree consisting of mathematical primitives See papers for details 7

Results: Recurrence Relations Ideal goal: replace loop-carried dependencies with independent iterations Evaluated with recurrence relations 45 40 35 Parallelism limited by I/O bandwidth 30 Speedup 25 Performance increases considerably with more approximation 20 15 10 Closed-form solution provides single approximation 5 0 1 E-08 1 E-06 1 E-04 1 E-02 1 E+00 Root Mean Squared Error Logistic Map (R=2.5, N=10) Logistic Map (R=2.5, N=20) Logistic Map (R=0.9, N=10) Logistic Map (R=0.9, N=20) Consecutive Sum (1k) Consecutive Sum (10k) Cheby HP Filter Cheby LP Filter Bandpass Filter Notch Filter Single-Pole HP Filter Single-Pole LP Filter 8

Results: Synthetic Loops w/ Dependencies 90 80 70 60 Speedup 50 40 30 20 10 0 0.0000% 0.0001% 0.0010% 0.0100% 0.1000% 1.0000% 10.0000% Mean Absolute Percentage Error Generated synthetic loops Loop-carried dependencies Non-associative operations Range of tradeoffs between speedup and error 2x to 80x speedup 9

Results: FPGA Parallelism 1024 512 256 128 64 Speedup 32 16 8 4 2 1 0.5 0.25 1% 2% 4% 8% 16% 32% 64% 128% Mean Absolute Percentage Error sin sqrt ln exp tan cube_root Speedup between 1x and 10x at 5% error ln had speedup of 757x at 18% error Some errors likely prohibitive But, illustrate tradeoffs between performance and error 10

Conclusions Parallelizing sequential code a longtime compiler goal Parallelism can be significantly increased via approximation Discovering approximations is difficult manually PANDORA automatically discovers parallelizing approximations Language independent Supports different optimization goals and constraints Remaining Challenges: Symbolic regression algorithms are often limited to toy problems Infinite solution space Can t guarantee maximum error Resulting approximations are often hard to understand 11

APPENDIX 12

Approximation Examples Symbolic regression vs. neural nets

Symbolic Regression Given set of points, find mathematical equation that best fits the points Symbolic regression discovers the equation: 5.802(sin(x2)+x) + 4.12

Symbolic Regression Symbolic regression vs. linear regression

Symbolic Regression Classification: symbolic regression vs. neural nets