Computational Physics (Lecture 18)

The basic structure of MPICH and its features in Computational Physics Lecture 18. Understand how MPI functions are used and linked with a static library provided by the software package. Explore how P4 offers functionality and supports parallel computer systems. Discover the concept of clusters in

0 views • 38 slides

Overview of Distributed Systems: Characteristics, Classification, Computation, Communication, and Fault Models

Characterizing Distributed Systems: Multiple autonomous computers with CPUs, memory, storage, and I/O paths, interconnected geographically, shared state, global invariants. Classifying Distributed Systems: Based on synchrony, communication medium, fault models like crash and Byzantine failures. Comp

9 views • 126 slides

Enhancing Healthcare Services in Malawi through the Master Patient Index (MPI)

The Master Patient Index (MPI) plays a crucial role in Malawi's healthcare system by providing a national patient identification system to improve healthcare quality and treatment accuracy. Leveraging the MPI aims to dispense unique patient IDs, connect with existing registries, enhance data managem

4 views • 8 slides

Crash Course in Supercomputing: Understanding Parallelism and MPI Concepts

Delve into the world of supercomputing with a crash course covering parallelism, MPI, OpenMP, and hybrid programming. Learn about dividing tasks for efficient execution, exploring parallelization strategies, and the benefits of working smarter, not harder. Discover how everyday activities, such as p

0 views • 157 slides

Understanding Parallel and Distributed Computing Systems

In parallel computing, processing elements collaborate to solve problems, while distributed systems appear as a single coherent system to users, made up of independent computers. Contemporary computing systems like mobile devices, IoT devices, and high-end gaming computers incorporate parallel and d

1 views • 11 slides

Understanding Remote Method Invocation (RMI) in Distributed Systems

A distributed system involves software components on different computers communicating through message passing to achieve common goals. Organized with middleware like RMI, it allows for interactions across heterogeneous networks. RMI facilitates building distributed Java systems by enabling method i

1 views • 47 slides

Proposal for National MPI using SHDS Data in Somalia

The proposal discusses the creation of a National Multidimensional Poverty Index (MPI) for Somalia using data from the Somali Health and Demographic Survey (SHDS). The SHDS, with a sample size of 16,360 households, aims to provide insights into the health and demographic characteristics of the Somal

0 views • 26 slides

Overview of Nepal MPI 2021 and Multidimensional Poverty Peer Network Meeting

The 8th Annual High-Level Meeting of the Multidimensional Poverty Peer Network (MPPN) was hosted by the Government of Chile on 4-5 October, 2021. Dr. Ram Kumar Phuyal from the Government of Nepal National Planning Commission presented at the event. The meeting discussed poverty, its measurement tech

1 views • 20 slides

Distributed DBMS Reliability Concepts and Measures

Distributed DBMS reliability is crucial for ensuring continuous user request processing despite system failures. This chapter delves into fundamental definitions, fault classifications, and types of faults like hard and soft failures in distributed systems. Understanding reliability concepts helps i

0 views • 58 slides

Distributed Algorithms for Leader Election in Anonymous Systems

Distributed algorithms play a crucial role in leader election within anonymous systems where nodes lack unique identifiers. The content discusses the challenges and impossibility results of deterministic leader election in such systems. It explains synchronous and asynchronous distributed algorithms

2 views • 11 slides

A Handbook for Building National MPIs: Practical Guidance for Ending Poverty

This handbook provides detailed practical guidance on creating a technically rigorous permanent national Multidimensional Poverty Index (MPI). Jointly developed with UNDP, it aims to accelerate progress towards the Sustainable Development Goals by offering insights from countries' experiences in des

3 views • 18 slides

Overview of Distributed Systems, RAID, Lustre, MogileFS, and HDFS

Distributed systems encompass a range of technologies aimed at improving storage efficiency and reliability. This includes RAID (Redundant Array of Inexpensive Disks) strategies such as RAID levels, Lustre Linux Cluster for high-performance clusters, MogileFS for fast content delivery, and HDFS (Had

0 views • 23 slides

Open MPI Project: Updated Version Numbering Scheme & Release Planning

Explore the transition from an odd/even version numbering scheme to an A.B.C version triple for Open MPI project, addressing issues with feature adoption and stability. This update aims to deliver new features efficiently and maintain backward compatibility effectively.

0 views • 36 slides

Distributed Software Engineering Overview

Distributed software engineering plays a crucial role in modern enterprise computing systems where large computer-based systems are distributed over multiple computers for improved performance, fault tolerance, and scalability. This involves resource sharing, openness, concurrency, and fault toleran

0 views • 66 slides

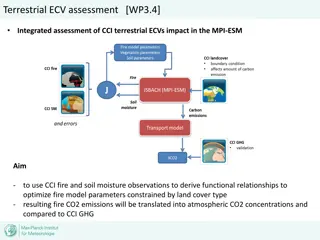

Integrated Assessment of Terrestrial ECV Impact in MPI-ESM

Utilizing CCI fire and soil moisture observations to optimize fire model parameters in MPI-ESM. The study focuses on deriving functional relationships to enhance accuracy in predicting fire CO2 emissions and their impact on atmospheric CO2 concentrations compared to CCI GHG data. JSBACH-SPITFIRE fir

0 views • 7 slides

Understanding Open MPI: A Comprehensive Overview

Open MPI is a high-performance implementation of MPI, widely used in academic, research, and industry settings. This article delves into the architecture, implementation, and usage of Open MPI, providing insights into its features, goals, and practical applications. From a high-level view to detaile

0 views • 33 slides

Introduction to Message Passing Interface (MPI) in IT Center

Message Passing Interface (MPI) is a crucial aspect of Information Technology Center training, focusing on communication and data movement among processes. This training covers MPI features, types of communication, basic MPI calls, and more. With an emphasis on MPI's role in synchronization, data mo

0 views • 29 slides

Challenges in Detecting and Characterizing Failures in Distributed Web Applications

The final examination presented by Fahad A. Arshad at Purdue University in 2014 delves into the complexities of failure characterization and error detection in distributed web applications. The presentation highlights the reasons behind failures, such as limited testing and high developer turnover r

0 views • 53 slides

Developing MPI Programs with Domain Decomposition

Domain decomposition is a parallelization method used for developing MPI programs by partitioning the domain into portions and assigning them to different processes. Three common ways of partitioning are block, cyclic, and block-cyclic, each with its own communication requirements. Considerations fo

0 views • 19 slides

Optimization Strategies for MPI-Interoperable Active Messages

The study delves into optimization strategies for MPI-interoperable active messages, focusing on data-intensive applications like graph algorithms and sequence assembly. It explores message passing models in MPI, past work on MPI-interoperable and generalized active messages, and how MPI-interoperab

0 views • 20 slides

Understanding the CAP Theorem in Distributed Systems

The CAP Theorem, as discussed by Seth Gilbert and Nancy A. Lynch, highlights the tradeoffs between Consistency, Availability, and Partition Tolerance in distributed systems. It explains how a distributed service cannot provide all three aspects simultaneously, leading to practical compromises and re

0 views • 28 slides

Understanding Distributed Hash Table (DHT) in Distributed Systems

In this lecture, Mohammad Hammoud discusses the concept of Distributed Hash Tables (DHT) in distributed systems, focusing on key aspects such as classes of naming, Chord DHT, node entities, key resolution algorithms, and the key resolution process in Chord. The session covers various components of D

0 views • 35 slides

Overview of Peer-to-Peer Systems and Distributed Hash Tables

The lecture discusses Peer-to-Peer (P2P) systems and Distributed Hash Tables, exploring their architecture, benefits, adoption in various areas, and examples such as BitTorrent. It covers the decentralized nature of P2P systems, the challenges they address, and the advantages they offer including hi

0 views • 56 slides

Communication Costs in Distributed Sparse Tensor Factorization on Multi-GPU Systems

This research paper presented an evaluation of communication costs for distributed sparse tensor factorization on multi-GPU systems. It discussed the background of tensors, tensor factorization methods like CP-ALS, and communication requirements in RefacTo. The motivation highlighted the dominance o

0 views • 34 slides

Understanding Collective Communication in MPI Distributed Systems

Explore the importance of collective routines in MPI, learn about different patterns of collective communication like Scatter, Gather, Reduce, Allreduce, and more. Discover how these communication methods facilitate efficient data exchange among processes in a distributed system.

0 views • 6 slides

Dynamic Load Balancing Library Overview

Dynamic Load Balancing Library (DLB) is a tool designed to address imbalances in computational workloads by providing fine-grain load balancing, resource management, and performance measurement modules. With an integrated yet independent structure, DLB offers APIs for user-level interactions, job sc

0 views • 27 slides

Leveraging MPI's One-Sided Communication Interface for Shared Memory Programming

This content discusses the utilization of MPI's one-sided communication interface for shared memory programming, addressing the benefits of using multi- and manycore systems, challenges in programming shared memory efficiently, the differences between MPI and OS tools, MPI-3.0 one-sided memory model

0 views • 20 slides

Distributed Computing Systems Project: Distributed Shell Implementation

Explore the concept of a Distributed Shell in the realm of distributed computing systems, where commands can be executed on remote machines with results returned to users. The project involves building a client-server setup for a Distributed Shell, incorporating functionalities like authentication,

0 views • 14 slides

Challenges and Feedback from 2009 Sonoma MPI Community Sessions

Collected feedback from major commercial MPI implementations in 2009 addressing challenges such as memory registration, inadequate support for fork(), and problematic connection setup scalability. Suggestions were made to improve APIs, enhance memory registration methods, and simplify connection man

0 views • 20 slides

Government Funding Options for Kiwifruit Growers in June 2021

Ministry for Primary Industries (MPI) provides various funding schemes and ongoing projects to support kiwifruit growers, including environmental schemes, Māori landowners' support programs, and Sustainable Food and Fibre Futures. These initiatives offer financial assistance, mediation for financia

0 views • 7 slides

Overview of Ceph Distributed File System

Ceph is a scalable, high-performance distributed file system designed for excellent performance, reliability, and scalability in very large systems. It employs innovative strategies like distributed dynamic metadata management, pseudo-random data distribution, and decoupling data and metadata tasks

0 views • 42 slides

Understanding the Multidimensional Poverty Index (MPI)

The MPI, introduced in 2010 by OPHI and UNDP, offers a comprehensive view of poverty by considering various dimensions beyond just income. Unlike traditional measures, the MPI captures deprivations in fundamental services and human functioning. It addresses the limitations of monetary poverty measur

0 views • 56 slides

Enhancing HPC Performance with Broadcom RoCE MPI Library

This project focuses on optimizing MPI communication operations using Broadcom RoCE technology for high-performance computing applications. It discusses the benefits of RoCE for HPC, the goal of highly optimized MPI for Broadcom RoCEv2, and the overview of the MVAPICH2 Project, a high-performance op

0 views • 27 slides

Distributed Transaction Management in CSCI 5533 Course

Exploring transaction concepts and models in distributed systems, Team 5 comprising Dedeepya, Dodla, Ehtheshamuddin, and Hari Kishore under the guidance of Dr. Andrew Yang delve into the intricacies of distributed transaction management in CSCI 5533 Distributed Information Systems.

0 views • 56 slides

Understanding Message Passing Interface (MPI) Standardization

Message Passing Interface (MPI) standard is a specification guiding the development and use of message passing libraries for parallel programming. It focuses on practicality, portability, efficiency, and flexibility. MPI supports distributed memory, shared memory, and hybrid architectures, offering

0 views • 29 slides

Understanding Master Patient Index (MPI) in Healthcare Systems

Explore the significance of Master Patient Index (MPI) in healthcare settings, its role in patient management, patient identification, and linking electronic health records (EHRs). Learn about the purpose, functions, and benefits of MPI in ensuring accurate patient data and seamless healthcare opera

0 views • 16 slides

Concurrency Control and Coordinator Election in Distributed Systems

This content delves into the key concepts of concurrency control and coordinator election in distributed systems. It covers classical concurrency control mechanisms like Semaphores, Mutexes, and Monitors, and explores the challenges and goals of distributed mutual exclusion. Various approaches such

0 views • 48 slides

PMI: A Scalable Process Management Interface for Extreme-Scale Systems

PMI (Process-Management Interface) is a critical component for high-performance computing, enhancing scalability and performance. It allows independent development of parallel libraries like MPI, ensuring portability across different environments. The PMI system model includes various components suc

0 views • 29 slides

Insights into Pilot National MPI for Botswana

This document outlines the structure, dimensions, and indicators of the Pilot National Multidimensional Poverty Index (MPI) for Botswana. It provides detailed criteria for measuring deprivation in areas such as education, health, social inclusion, living standards, and more. The presentation also in

0 views • 10 slides

Fast Noncontiguous GPU Data Movement in Hybrid MPI+GPU Environments

This research focuses on enabling efficient and fast noncontiguous data movement between GPUs in hybrid MPI+GPU environments. The study explores techniques such as MPI-derived data types to facilitate noncontiguous message passing and improve communication performance in GPU-accelerated systems. By

0 views • 18 slides