Understanding Parallelism in GPU Computing by Martin Kruli

This content delves into different types of parallelism in GPU computing, such as task parallelism and data parallelism, along with discussing unsuitable problems for GPUs and providing solutions like iterative kernel execution and mapping irregular structures to regular grids. The article also touc

1 views • 39 slides

Understanding Memory Allocation in Operating Systems

Memory allocation in operating systems involves fair distribution of physical memory among running processes. The memory management subsystem ensures each process gets its fair share. Shared virtual memory and the efficient use of resources like dynamic libraries contribute to better memory utilizat

1 views • 233 slides

Understanding Memory Organization in Computers

The memory unit is crucial in any digital computer for storing programs and data. It comprises main memory, auxiliary memory, and cache memory, each serving different roles in data storage and retrieval. Main memory directly communicates with the CPU, while cache memory enhances processing speed by

1 views • 37 slides

Understanding Memory Organization in Computers

Delve into the intricate world of memory organization within computer systems, exploring the vital role of memory units, cache memory, main memory, auxiliary memory, and the memory hierarchy. Learn about the different types of memory, such as sequential access memory and random access memory, and ho

0 views • 45 slides

Understanding AARCH64 Linux Kernel Memory Management

Explore the confidential and proprietary details of AARCH64 Linux kernel memory mapping, virtual memory layout, variable configurations, DDR memory layout, and memory allocation techniques. Get insights into the allocation of physically contiguous memory using Continuous Memory Allocator (CMA) integ

0 views • 18 slides

Understanding Cache and Virtual Memory in Computer Systems

A computer's memory system is crucial for ensuring fast and uninterrupted access to data by the processor. This system comprises internal processor memories, primary memory, and secondary memory such as hard drives. The utilization of cache memory helps bridge the speed gap between the CPU and main

2 views • 47 slides

Dynamic Memory Allocation in Computer Systems: An Overview

Dynamic memory allocation in computer systems involves the acquisition of virtual memory at runtime for data structures whose size is only known at runtime. This process is managed by dynamic memory allocators, such as malloc, to handle memory invisible to user code, application kernels, and virtual

0 views • 70 slides

Understanding Coordination and Parallelism in Sentence Structure

This informative content delves into the concepts of coordination and parallelism in sentence structure, highlighting coordinating conjunctions, different types of conjunctions, examples of parallel structure, and the importance of maintaining parallelism in lists, series, comparisons, and contrasti

0 views • 52 slides

Exploring Parallel Computing: Concepts and Applications

Dive into the world of parallel computing with an engaging analogy of picking apples, relating different types of parallelism. Learn about task and data decomposition, software models, hardware architectures, and challenges in utilizing parallelism. Discover the potential of completing multiple part

0 views • 27 slides

Understanding Garbage Collection in Java Programming

Garbage collection in Java automates the process of managing memory allocation and deallocation, ensuring efficient memory usage and preventing memory leaks and out-of-memory errors. By automatically identifying and removing unused objects from the heap memory, the garbage collector frees up memory

14 views • 22 slides

Understanding Memory Management in Operating Systems

Dive into the world of memory management in operating systems, covering topics such as virtual memory, page replacement algorithms, memory allocation, and more. Explore concepts like memory partitions, fixed partitions, memory allocation mechanisms, base and limit registers, and the trade-offs betwe

1 views • 110 slides

Understanding Shared Memory Architectures and Cache Coherence

Shared memory architectures involve multiple CPUs accessing a common memory, leading to challenges like the cache coherence problem. This article delves into different types of shared memory architectures, such as UMA and NUMA, and explores the cache coherence issue and protocols. It also highlights

2 views • 27 slides

Understanding Memory Management and Swapping Techniques

Memory management involves techniques like swapping, memory allocation changes, memory compaction, and memory management with bitmaps. Swapping refers to bringing each process into memory entirely, running it for a while, then putting it back on the disk. Memory allocation can change as processes en

0 views • 17 slides

Enhancing Memory and Concentration Techniques for Academic Success

Explore the fascinating world of memory and concentration through various techniques and processes highlighted in the provided images. Discover how sensory memory, short-term memory, and long-term memory function, along with tips on improving concentration, learning strategies, and the interplay bet

1 views • 34 slides

Understanding Memory Encoding and Retention Processes

Memory is the persistence of learning over time, involving encoding, storage, and retrieval of information. Measures of memory retention include recall, recognition, and relearning. Ebbinghaus' retention curve illustrates the relationship between practice and relearning. Psychologists use memory mod

0 views • 22 slides

Teaching Parallelism in Python-Based CS1 at Small Institution

Explore challenges, technical and non-technical materials, and coverage of CS2013 in teaching parallelism in a Python-based CS1 course at a small institution. Overcome student inexperience with a mix of technical and non-technical content, including coding the multiprocessing module in Python and an

0 views • 7 slides

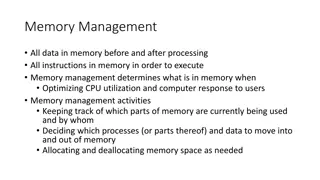

Understanding Memory Management in Computer Systems

Memory management in computer systems involves optimizing CPU utilization, managing data in memory before and after processing, allocating memory space efficiently, and keeping track of memory usage. It determines what is in memory, moves data in and out as needed, and involves caching at various le

1 views • 21 slides

Dynamic Memory Management Overview

Understanding dynamic memory management is crucial in programming to efficiently allocate and deallocate memory during runtime. The memory is divided into the stack and the heap, each serving specific purposes in storing local and dynamic data. Dynamic memory allocators organize the heap for efficie

0 views • 31 slides

Understanding Memory Management in C Programming

The discussion covers various aspects of memory management in C programming, including common memory problems and examples. It delves into memory regions, stack and heap management, and static data. The examples illustrate concepts like static storage, heap allocation, and common pitfalls to avoid.

0 views • 24 slides

Introduction to CSE 332: Data Structures and Parallelism with Richard Anderson

Welcome to CSE 332: Data Structures and Parallelism with Richard Anderson! This course covers fundamental data structures, algorithms, efficiency analysis, and when to use them. Topics include queues, dictionaries, graphs, sorting, parallelism, concurrency, and NP-Completeness. The outline includes

0 views • 29 slides

Understanding Your Memory System: A Guide to Enhancing Recall

Learn about the three components of the memory system - sensory memory, short-term memory, and long-term memory. Discover why we forget and how to improve memory retention through techniques like positive attitude, focus, mnemonic devices, and more. Enhance your memory skills to boost learning effic

0 views • 8 slides

Exploring Hardware SIMD Parallelism Abstraction

Understanding the inherent parallelism in applications can lead to high performance with less effort, but the alignment with how Linux and C++ compilers discover parallelism is crucial. The shift towards making parallel computing more mainstream highlights the importance of SIMD operations and oppor

0 views • 50 slides

Understanding Parallelism in Computer Systems

This content delves into various aspects of parallelism in computer systems, covering topics such as synchronization, deadlock, concurrency vs. parallelism, CPU evolution implications, types of parallelism, Amdahl's Law, and limits of parallelism. It explores the motivations behind parallelism, diff

0 views • 48 slides

Understanding Memory Basics in Digital Systems

Dive into the world of digital memory systems with a focus on Random Access Memory (RAM), memory capacities, SI prefixes, logical models of memory, and example memory symbols. Learn about word sizes, addresses, data transfer, and capacity calculations to gain a comprehensive understanding of memory

1 views • 12 slides

Understanding Different Types of Memory Technologies in Computer Systems

Explore the realm of memory technologies with an overview of ROM, RAM, non-volatile memories, and programmable memory options. Delve into the intricacies of read-only memory, volatile vs. non-volatile memory, and the various types of memory dimensions. Gain insights into the workings of ROM, includi

0 views • 45 slides

Understanding Shared Memory, Distributed Memory, and Hybrid Distributed-Shared Memory

Shared memory systems allow multiple processors to access the same memory resources, with changes made by one processor visible to all others. This concept is categorized into Uniform Memory Access (UMA) and Non-Uniform Memory Access (NUMA) architectures. UMA provides equal access times to memory, w

0 views • 22 slides

Understanding User-Level Memory and Virtual Memory Organization in Operating Systems

Explore the concepts of user-level memory management, virtual memory areas, process address spaces, and /proc/pid/maps in the context of operating systems. Learn about memory organization, address translation, segmentation faults, and the structure of memory areas within processes.

0 views • 24 slides

Understanding Virtual Memory Concepts and Benefits

Virtual Memory, instructed by Shmuel Wimer, separates logical memory from physical memory, enabling efficient utilization of memory resources. By using virtual memory, programs can run partially in memory, reducing constraints imposed by physical memory limitations. This also enhances CPU utilizatio

0 views • 41 slides

Understanding Parallel Software in Advanced Computer Architecture II

Exploring the challenges of parallel software, the lecture delves into identifying and expressing parallelism, utilizing parallel hardware effectively, and debugging parallel algorithms. It discusses functional parallelism, automatic extraction of parallelism, and finding parallelism in various appl

0 views • 86 slides

Understanding Virtual Memory and its Implementation

Virtual memory allows for the separation of user logical memory from physical memory, enabling efficient process creation and effective memory management. It helps overcome memory shortage issues by utilizing demand paging and segmentation techniques. Virtual memory mapping ensures only required par

0 views • 20 slides

Understanding Memory: Challenges and Improvement

Delve into the intricacies of memory with discussions on earliest and favorite memories, a memory challenge, how memory works, stages of memory, and tips to enhance memory recall. Explore the significance of memory and practical exercises for memory improvement.

0 views • 16 slides

Trends in Implicit Parallelism and Microprocessor Architectures

Explore the implications of implicit parallelism in microprocessor architectures, addressing performance bottlenecks in processor, memory system, and datapath components. Prof. Vijay More delves into optimizing resource utilization, diverse architectural executions, and the impact on current compute

0 views • 47 slides

Simplifying Parallelism with Transactional Memory

Concurrency is advancing rapidly, making parallel programming challenging with synchronization complexities. Transactional memory offers a solution by replacing locking with memory transactions, optimizing execution, and simplifying code for enhanced performance. Despite the challenges, transactiona

0 views • 64 slides

User-Level Management of Parallelism: Scheduler Activations

This content delves into the comparison between kernel-level threads and user-level threads in managing parallelism. It discusses the challenges and benefits associated with each threading model, highlighting the trade-offs between system overhead, flexibility, and resource utilization. The concept

0 views • 39 slides

Memory Management Principles in Operating Systems

Memory management in operating systems involves the allocation of memory resources among competing processes to optimize performance with minimal overhead. Techniques such as partitioning, paging, and segmentation are utilized, along with page table management and virtual memory tricks. The concept

0 views • 29 slides

Overview of Nested Data Parallelism in Haskell

The paper by Simon Peyton Jones, Manuel Chakravarty, Gabriele Keller, and Roman Leshchinskiy explores nested data parallelism in Haskell, focusing on harnessing multicore processors. It discusses the challenges of parallel programming, comparing sequential and parallel computational fabrics. The evo

0 views • 55 slides

Understanding Atomics and Parallelism in Programming

Explore the world of atomics, parallelism, memory access optimizations, and sequential consistency in programming. Dive into concepts such as races in multithreading, cache optimizations, and the importance of memory access order before and after compiler optimizations. Witness live demos showcasing

0 views • 46 slides

Enhancing Memory Bandwidth with Transparent Memory Compression

This research focuses on enabling transparent memory compression for commodity memory systems to address the growing demand for memory bandwidth. By implementing hardware compression without relying on operating system support, the goal is to optimize memory capacity and bandwidth efficiently. The a

0 views • 34 slides

Locality-Aware Caching Policies for Hybrid Memories

Different memory technologies present unique strengths, and a hybrid memory system combining DRAM and PCM aims to leverage the best of both worlds. This research explores the challenge of data placement between these diverse memory devices, highlighting the use of row buffer locality as a key criter

0 views • 34 slides

Parallelism and Synchronization in CUDA Programming

In this lecture on CS.179, the focus is on parallelism, synchronization, matrix transpose, profiling, and using AWS clusters in CUDA programming. The content delves into ideal cases for parallelism, synchronization examples, atomic instructions, and warp-synchronous programming in GPU computing. It

0 views • 29 slides