Enhancing Data Reception Performance with GPU Acceleration in CCSDS 131.2-B Protocol

Explore the utilization of Graphics Processing Unit (GPU) accelerators for high-performance data reception in a Software Defined Radio (SDR) system following the CCSDS 131.2-B protocol. The research, presented at the EDHPC 2023 Conference, focuses on implementing a state-of-the-art GP-GPU receiver t

0 views • 33 slides

Overview of GPU Architecture and Memory Systems in NVIDIA Tegra X1

Dive into the intricacies of GPU architecture and memory systems with a detailed exploration of the NVIDIA Tegra X1 die photo, instruction fetching mechanisms, SIMT core organization, cache lockup problems, and efficient memory management techniques highlighted in the provided educational materials.

7 views • 62 slides

Understanding Memory Allocation in Operating Systems

Memory allocation in operating systems involves fair distribution of physical memory among running processes. The memory management subsystem ensures each process gets its fair share. Shared virtual memory and the efficient use of resources like dynamic libraries contribute to better memory utilizat

1 views • 233 slides

Understanding Memory Organization in Computers

The memory unit is crucial in any digital computer for storing programs and data. It comprises main memory, auxiliary memory, and cache memory, each serving different roles in data storage and retrieval. Main memory directly communicates with the CPU, while cache memory enhances processing speed by

1 views • 37 slides

Understanding Memory Organization in Computers

Delve into the intricate world of memory organization within computer systems, exploring the vital role of memory units, cache memory, main memory, auxiliary memory, and the memory hierarchy. Learn about the different types of memory, such as sequential access memory and random access memory, and ho

0 views • 45 slides

Understanding Cache and Virtual Memory in Computer Systems

A computer's memory system is crucial for ensuring fast and uninterrupted access to data by the processor. This system comprises internal processor memories, primary memory, and secondary memory such as hard drives. The utilization of cache memory helps bridge the speed gap between the CPU and main

1 views • 47 slides

Dynamic Memory Allocation in Computer Systems: An Overview

Dynamic memory allocation in computer systems involves the acquisition of virtual memory at runtime for data structures whose size is only known at runtime. This process is managed by dynamic memory allocators, such as malloc, to handle memory invisible to user code, application kernels, and virtual

0 views • 70 slides

Parallel Implementation of Multivariate Empirical Mode Decomposition on GPU

Empirical Mode Decomposition (EMD) is a signal processing technique used for separating different oscillation modes in a time series signal. This paper explores the parallel implementation of Multivariate Empirical Mode Decomposition (MEMD) on GPU, discussing numerical steps, implementation details,

1 views • 15 slides

Understanding Memory Management in Operating Systems

Dive into the world of memory management in operating systems, covering topics such as virtual memory, page replacement algorithms, memory allocation, and more. Explore concepts like memory partitions, fixed partitions, memory allocation mechanisms, base and limit registers, and the trade-offs betwe

1 views • 110 slides

GPU Scheduling Strategies: Maximizing Performance with Cache-Conscious Wavefront Scheduling

Explore GPU scheduling strategies including Loose Round Robin (LRR) for maximizing performance by efficiently managing warps, Cache-Conscious Wavefront Scheduling for improved cache utilization, and Greedy-then-oldest (GTO) scheduling to enhance cache locality. Learn how these techniques optimize GP

0 views • 21 slides

Understanding Shared Memory Architectures and Cache Coherence

Shared memory architectures involve multiple CPUs accessing a common memory, leading to challenges like the cache coherence problem. This article delves into different types of shared memory architectures, such as UMA and NUMA, and explores the cache coherence issue and protocols. It also highlights

2 views • 27 slides

Redesigning the GPU Memory Hierarchy for Multi-Application Concurrency

This presentation delves into the innovative reimagining of GPU memory hierarchy to accommodate multiple applications concurrently. It explores the challenges of GPU sharing with address translation, high-latency page walks, and inefficient caching, offering insights into a translation-aware memory

1 views • 15 slides

Understanding Memory Management and Swapping Techniques

Memory management involves techniques like swapping, memory allocation changes, memory compaction, and memory management with bitmaps. Swapping refers to bringing each process into memory entirely, running it for a while, then putting it back on the disk. Memory allocation can change as processes en

0 views • 17 slides

Understanding Memory Encoding and Retention Processes

Memory is the persistence of learning over time, involving encoding, storage, and retrieval of information. Measures of memory retention include recall, recognition, and relearning. Ebbinghaus' retention curve illustrates the relationship between practice and relearning. Psychologists use memory mod

0 views • 22 slides

GPU-Accelerated Delaunay Refinement: Efficient Triangulation Algorithm

This study presents a novel approach for computing Delaunay refinement using GPU acceleration. The algorithm aims to generate a constrained Delaunay triangulation from a planar straight line graph efficiently, with improvements in termination handling and Steiner point management. By leveraging GPU

0 views • 23 slides

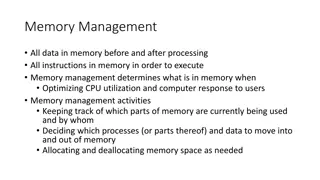

Understanding Memory Management in Computer Systems

Memory management in computer systems involves optimizing CPU utilization, managing data in memory before and after processing, allocating memory space efficiently, and keeping track of memory usage. It determines what is in memory, moves data in and out as needed, and involves caching at various le

1 views • 21 slides

Dynamic Memory Management Overview

Understanding dynamic memory management is crucial in programming to efficiently allocate and deallocate memory during runtime. The memory is divided into the stack and the heap, each serving specific purposes in storing local and dynamic data. Dynamic memory allocators organize the heap for efficie

0 views • 31 slides

Microarchitectural Performance Characterization of Irregular GPU Kernels

GPUs are widely used for high-performance computing, but irregular algorithms pose challenges for parallelization. This study delves into the microarchitectural aspects affecting GPU performance, emphasizing best practices to optimize irregular GPU kernels. The impact of branch divergence, memory co

0 views • 26 slides

Managing DRAM Latency Divergence in Irregular GPGPU Applications

Addressing memory latency challenges in irregular GPGPU applications, this study explores techniques like warp-aware memory scheduling and GPU memory controller optimization to reduce DRAM latency divergence. The research delves into the impact of SIMD lanes, coalescers, and warp-aware scheduling on

0 views • 33 slides

Advanced GPU Performance Modeling Techniques

Explore cutting-edge techniques in GPU performance modeling, including interval analysis, resource contention identification, detailed timing simulation, and balancing accuracy with efficiency. Learn how to leverage both functional simulation and analytical modeling to pinpoint performance bottlenec

0 views • 32 slides

Understanding Parallel Programming and Memory Hierarchy in Triton

Explore the concepts of parallel programming, distributed memory, and memory hierarchy in the context of Triton, focusing on technology trends, processor clock speeds, machine architecture, and memory organization at different levels (chip, node, system).

0 views • 23 slides

Understanding Caches and the Memory Hierarchy in Computer Systems

Delve into the intricacies of memory hierarchy and caches in computer systems, exploring concepts like cache organization, implementation choices, hardware optimizations, and software-managed caches. Discover the significance of memory distance from the CPU, the impact on hardware/software interface

0 views • 84 slides

Understanding Memory Basics in Digital Systems

Dive into the world of digital memory systems with a focus on Random Access Memory (RAM), memory capacities, SI prefixes, logical models of memory, and example memory symbols. Learn about word sizes, addresses, data transfer, and capacity calculations to gain a comprehensive understanding of memory

1 views • 12 slides

Communication Costs in Distributed Sparse Tensor Factorization on Multi-GPU Systems

This research paper presented an evaluation of communication costs for distributed sparse tensor factorization on multi-GPU systems. It discussed the background of tensors, tensor factorization methods like CP-ALS, and communication requirements in RefacTo. The motivation highlighted the dominance o

0 views • 34 slides

GPU Acceleration in ITK v4 Overview

This presentation by Won-Ki Jeong from Harvard University at the ITK v4 winter meeting in 2011 discusses the implementation and advantages of GPU acceleration in ITK v4. Topics covered include the use of GPUs as co-processors for massively parallel processing, memory and process management, new GPU

0 views • 33 slides

Understanding Different Types of Memory Technologies in Computer Systems

Explore the realm of memory technologies with an overview of ROM, RAM, non-volatile memories, and programmable memory options. Delve into the intricacies of read-only memory, volatile vs. non-volatile memory, and the various types of memory dimensions. Gain insights into the workings of ROM, includi

0 views • 45 slides

Understanding Shared Memory, Distributed Memory, and Hybrid Distributed-Shared Memory

Shared memory systems allow multiple processors to access the same memory resources, with changes made by one processor visible to all others. This concept is categorized into Uniform Memory Access (UMA) and Non-Uniform Memory Access (NUMA) architectures. UMA provides equal access times to memory, w

0 views • 22 slides

Understanding Virtual Memory Concepts and Benefits

Virtual Memory, instructed by Shmuel Wimer, separates logical memory from physical memory, enabling efficient utilization of memory resources. By using virtual memory, programs can run partially in memory, reducing constraints imposed by physical memory limitations. This also enhances CPU utilizatio

0 views • 41 slides

GPU Computing and Synchronization Techniques

Synchronization in GPU computing is crucial for managing shared resources and coordinating parallel tasks efficiently. Techniques such as __syncthreads() and atomic instructions help ensure data integrity and avoid race conditions in parallel algorithms. Examples requiring synchronization include Pa

0 views • 22 slides

Understanding Virtual Memory and its Implementation

Virtual memory allows for the separation of user logical memory from physical memory, enabling efficient process creation and effective memory management. It helps overcome memory shortage issues by utilizing demand paging and segmentation techniques. Virtual memory mapping ensures only required par

0 views • 20 slides

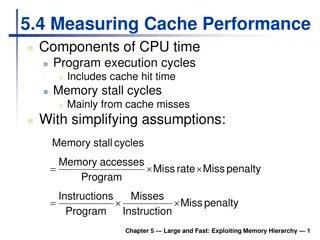

Understanding Cache Performance Components and Memory Hierarchy

Exploring cache performance components, such as hit time and memory stall cycles, is crucial for evaluating system performance. By analyzing factors like miss rates and penalties, one can optimize CPU efficiency and reduce memory stalls. Associative caches offer flexible options for organizing data

0 views • 22 slides

Understanding Memory: Challenges and Improvement

Delve into the intricacies of memory with discussions on earliest and favorite memories, a memory challenge, how memory works, stages of memory, and tips to enhance memory recall. Explore the significance of memory and practical exercises for memory improvement.

0 views • 16 slides

GPU Acceleration in ITK v4: Overview and Implementation

This presentation discusses the implementation of GPU acceleration in ITK v4, focusing on providing a high-level GPU abstraction, transparent resource management, code development status, and GPU core classes. Goals include speeding up certain types of problems and managing memory effectively.

0 views • 32 slides

Understanding Memory Hierarchy and Different Computer Architecture Styles

Delve into the concepts of memory hierarchy, cache optimizations, RISC architecture, and other architecture styles in embedded computer architecture. Learn about Accumulator and Stack architectures, their characteristics, advantages, and example code implementations. Explore the differences between

0 views • 52 slides

Memory Management Principles in Operating Systems

Memory management in operating systems involves the allocation of memory resources among competing processes to optimize performance with minimal overhead. Techniques such as partitioning, paging, and segmentation are utilized, along with page table management and virtual memory tricks. The concept

0 views • 29 slides

Understanding Memory Hierarchy in Parallel Computer Architecture

This content delves into the intricacies of memory hierarchy, caches, and the management of virtual versus physical memory in parallel computer architecture. It discusses topics such as cache compression, the programmer's view of memory, virtual versus physical memory, and the ideal pipeline for ins

0 views • 86 slides

Synchronization and Shared Memory in GPU Computing

Synchronization and shared memory play vital roles in optimizing parallelism in GPU computing. __syncthreads() enables thread synchronization within blocks, while atomic instructions ensure serialized access to shared resources. Examples like Parallel BFS and summing numbers highlight the need for s

0 views • 21 slides

Enhancing Memory Bandwidth with Transparent Memory Compression

This research focuses on enabling transparent memory compression for commodity memory systems to address the growing demand for memory bandwidth. By implementing hardware compression without relying on operating system support, the goal is to optimize memory capacity and bandwidth efficiently. The a

0 views • 34 slides

Locality-Aware Caching Policies for Hybrid Memories

Different memory technologies present unique strengths, and a hybrid memory system combining DRAM and PCM aims to leverage the best of both worlds. This research explores the challenge of data placement between these diverse memory devices, highlighting the use of row buffer locality as a key criter

0 views • 34 slides

Fast Noncontiguous GPU Data Movement in Hybrid MPI+GPU Environments

This research focuses on enabling efficient and fast noncontiguous data movement between GPUs in hybrid MPI+GPU environments. The study explores techniques such as MPI-derived data types to facilitate noncontiguous message passing and improve communication performance in GPU-accelerated systems. By

0 views • 18 slides