Computational Physics (Lecture 18)

Neural networks explained with the example of feedforward vs. recurrent networks. Feedforward networks propagate data, while recurrent models allow loops for cascade effects. Recurrent networks are less influential but closer to the brain's function. Introduction to handwritten digit classification

0 views • 55 slides

Introduction to Deep Learning: Neural Networks and Multilayer Perceptrons

Explore the fundamentals of neural networks, including artificial neurons and activation functions, in the context of deep learning. Learn about multilayer perceptrons and their role in forming decision regions for classification tasks. Understand forward propagation and backpropagation as essential

3 views • 74 slides

Understanding Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM)

Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) are powerful tools for sequential data learning, mimicking the persistent nature of human thoughts. These neural networks can be applied to various real-life applications such as time-series data prediction, text sequence processing,

15 views • 34 slides

Understanding Mechanistic Interpretability in Neural Networks

Delve into the realm of mechanistic interpretability in neural networks, exploring how models can learn human-comprehensible algorithms and the importance of deciphering internal features and circuits to predict and align model behavior. Discover the goal of reverse-engineering neural networks akin

6 views • 31 slides

Localised Adaptive Spatial-Temporal Graph Neural Network

This paper introduces the Localised Adaptive Spatial-Temporal Graph Neural Network model, focusing on the importance of spatial-temporal data modeling in graph structures. The challenges of balancing spatial and temporal dependencies for accurate inference are addressed, along with the use of distri

3 views • 19 slides

Graph Neural Networks

Graph Neural Networks (GNNs) are a versatile form of neural networks that encompass various network architectures like NNs, CNNs, and RNNs, as well as unsupervised learning models such as RBM and DBNs. They find applications in diverse fields such as object detection, machine translation, and drug d

2 views • 48 slides

Recent Advances in RNN and CNN Models: CS886 Lecture Highlights

Explore the fundamentals of recurrent neural networks (RNNs) and convolutional neural networks (CNNs) in the context of downstream applications. Delve into LSTM, GRU, and RNN variants, alongside CNN architectures like ConvNext, ResNet, and more. Understand the mathematical formulations of RNNs and c

1 views • 76 slides

Exploring a Cutting-Edge Convolutional Neural Network for Speech Emotion Recognition

Human speech is a rich source of emotional indicators, making Speech Emotion Recognition (SER) vital for intelligent systems to understand emotions. SER involves extracting emotional states from speech and categorizing them. This process includes feature extraction and classification, utilizing tech

1 views • 15 slides

Understanding Artificial Neural Networks From Scratch

Learn how to build artificial neural networks from scratch, focusing on multi-level feedforward networks like multi-level perceptrons. Discover how neural networks function, including training large networks in parallel and distributed systems, and grasp concepts such as learning non-linear function

1 views • 33 slides

Understanding Back-Propagation Algorithm in Neural Networks

Artificial Neural Networks aim to mimic brain processing. Back-propagation is a key method to train these networks, optimizing weights to minimize loss. Multi-layer networks enable learning complex patterns by creating internal representations. Historical background traces the development from early

1 views • 24 slides

A Deep Dive into Neural Network Units and Language Models

Explore the fundamentals of neural network units in language models, discussing computation, weights, biases, and activations. Understand the essence of weighted sums in neural networks and the application of non-linear activation functions like sigmoid, tanh, and ReLU. Dive into the heart of neural

0 views • 81 slides

Assistive Speech System for Individuals with Speech Impediments Using Neural Networks

Individuals with speech impediments face challenges with speech-to-text software, and this paper introduces a system leveraging Artificial Neural Networks to assist. The technology showcases state-of-the-art performance in various applications, including speech recognition. The system utilizes featu

1 views • 19 slides

Advancing Physics-Informed Machine Learning for PDE Solving

Explore the need for numerical methods in solving partial differential equations (PDEs), traditional techniques, neural networks' functioning, and the comparison between standard neural networks and physics-informed neural networks (PINN). Learn about the advantages, disadvantages of PINN, and ongoi

0 views • 14 slides

Understanding Convolutional Codes in Digital Communication

Convolutional codes provide an efficient alternative to linear block coding by grouping data into smaller blocks and encoding them into output bits. These codes are defined by parameters (n, k, L) and realized using a convolutional structure. Generators play a key role in determining the connections

0 views • 19 slides

Exploring Neural Quantum States and Symmetries in Quantum Mechanics

This article delves into the intricacies of anti-symmetrized neural quantum states and the application of neural networks in solving for the ground-state wave function of atomic nuclei. It discusses the setup using the Rayleigh-Ritz variational principle, neural quantum states (NQSs), variational pa

0 views • 15 slides

Automated Melanoma Detection Using Convolutional Neural Network

Melanoma, a type of skin cancer, can be life-threatening if not diagnosed early. This study presented at the IEEE EMBC conference focuses on using a convolutional neural network for automated detection of melanoma lesions in clinical images. The importance of early detection is highlighted, as exper

0 views • 34 slides

Learning a Joint Model of Images and Captions with Neural Networks

Modeling the joint density of images and captions using neural networks involves training separate models for images and word-count vectors, then connecting them with a top layer for joint training. Deep Boltzmann Machines are utilized for further joint training to enhance each modality's layers. Th

4 views • 19 slides

Understanding Spiking Neurons and Spiking Neural Networks

Spiking neural networks (SNNs) are a new approach modeled after the brain's operations, aiming for low-power neurons, billions of connections, and high accuracy training algorithms. Spiking neurons have unique features and are more energy-efficient than traditional artificial neural networks. Explor

5 views • 23 slides

Introduction to Neural Networks in IBM SPSS Modeler 14.2

This presentation provides an introduction to neural networks in IBM SPSS Modeler 14.2. It covers the concepts of directed data mining using neural networks, the structure of neural networks, terms associated with neural networks, and the process of inputs and outputs in neural network models. The d

0 views • 18 slides

Overview of Neural Network Architectures for Machine Learning

This content provides an overview of feed-forward neural networks and recurrent networks, including their structures, functions, and applications in machine learning. It discusses the differences between the two architectures and their practical implications. Additionally, it highlights the challeng

0 views • 32 slides

Understanding U-Net: A Convolutional Network for Image Segmentation

U-Net is a convolutional neural network designed for image segmentation. It consists of a contracting path to capture context and an expanding path for precise localization. By concatenating high-resolution feature maps, U-Net efficiently handles information loss and maintains spatial details. The a

0 views • 8 slides

EEG Conformer: Convolutional Transformer for EEG Decoding and Visualization

This study introduces the EEG Conformer, a Convolutional Transformer model designed for EEG decoding and visualization. The research presents a cutting-edge approach in neural systems and rehabilitation engineering, offering advancements in EEG analysis techniques. By combining convolutional neural

1 views • 6 slides

Detecting Image Steganography Using Neural Networks

This project focuses on utilizing neural networks to detect image steganography, specifically targeting the F5 algorithm. The team aims to develop a model that is capable of detecting and cleaning hidden messages in images without relying on hand-extracted features. They use a dataset from Kaggle co

0 views • 23 slides

Understanding Convolutional Neural Networks: Architectural Characterizations for Accuracy Inference

This presentation by Duc Hoang from Rhodes College explores inferring the accuracy of Convolutional Neural Networks (CNNs) based on their architectural characterizations. The talk covers the MINERvA experiment, deep learning concepts including CNNs, and the significance of predicting CNN accuracy be

0 views • 21 slides

Convolutional Neural Networks for Sentence Classification: A Deep Learning Approach

Deep learning models, originally designed for computer vision, have shown remarkable success in various Natural Language Processing (NLP) tasks. This paper presents a simple Convolutional Neural Network (CNN) architecture for sentence classification, utilizing word vectors from an unsupervised neura

0 views • 15 slides

Understanding Advanced Classifiers and Neural Networks

This content explores the concept of advanced classifiers like Neural Networks which compose complex relationships through combining perceptrons. It delves into the workings of the classic perceptron and how modern neural networks use more complex decision functions. The visuals provided offer a cle

0 views • 26 slides

Neural Networks for Learning Relational Information

Explore how neural networks can be used to learn relational information, such as family trees and connections, through examples and tasks presented by Geoffrey Hinton and the team. The content delves into predicting relationships, capturing knowledge, and representing features within neural networks

0 views • 34 slides

Machine Learning and Artificial Neural Networks for Face Verification: Overview and Applications

In the realm of computer vision, the integration of machine learning and artificial neural networks has enabled significant advancements in face verification tasks. Leveraging the brain's inherent pattern recognition capabilities, AI systems can analyze vast amounts of data to enhance face detection

0 views • 13 slides

Understanding Network Analysis: Whole Networks vs. Ego Networks

Explore the differences between Whole Networks and Ego Networks in social network analysis. Whole Networks provide comprehensive information about all nodes and links, enabling the computation of network-level statistics. On the other hand, Ego Networks focus on a sample of nodes, limiting the abili

0 views • 31 slides

Exploring Efficient Hardware Architectures for Deep Neural Network Processing

Discover new hardware architectures designed for efficient deep neural network processing, including SCNN accelerators for compressed-sparse Convolutional Neural Networks. Learn about convolution operations, memory size versus access energy, dataflow decisions for reuse, and Planar Tiled-Input Stati

0 views • 23 slides

Evolution of Neural Networks through Neuroevolution by Ken Stanley

Ken Stanley, a prominent figure in neuroevolution, has made significant contributions to the field, such as co-inventing NEAT and HyperNEAT. Through neuroevolution, complex artifacts like neural networks evolve, with the most complex known to have 100 trillion connections. The combination of evoluti

0 views • 47 slides

Introduction to Neural Networks in Machine Learning

Explore the fundamentals of neural networks in machine learning, covering topics such as activation functions, architecture, training techniques, and practical applications. Discover how neural networks can approximate continuous functions with hidden units and understand the biological inspiration

0 views • 45 slides

Exploring Variability and Noise in Neural Networks

Understanding the variability of spike trains and sources of variability in neural networks, dissecting if variability is equivalent to noise. Delving into the Poisson model, stochastic spike arrival, and firing, and biological modeling of neural networks. Examining variability in different brain re

0 views • 71 slides

Exploring Google's Tensor Processing Unit (TPU) and Deep Neural Networks in Parallel Computing

Delve into the world of Google's TPU and deep neural networks as key solutions for speech recognition, search ranking, and more. Learn about domain-specific architectures, the structure of neural networks, and the essence of matrix multiplication in parallel computing.

0 views • 17 slides

Unveiling Convolutional Neural Network Architectures

Delve into the evolution of Convolutional Neural Network (ConvNet) architectures, exploring the concept of "Deeper is better" through challenges, winner accuracies, and the progression from simpler to more complex designs like VGG patterns and residual connections. Discover the significance of layer

0 views • 22 slides

Understanding Neural Network Watermarking Technologies

Neural networks are being deployed in various domains like autonomous systems, but protecting their integrity is crucial due to the costly nature of machine learning. Watermarking provides a solution to ensure traceability, integrity, and functionality of neural networks by allowing imperceptible da

0 views • 15 slides

Understanding Convolutional Neural Networks (CNN) in Depth

CNN, a type of neural network, comprises convolutional, subsampling, and fully connected layers achieving state-of-the-art results in tasks like handwritten digit recognition. CNN is specialized for image input data but can be tricky to train with large-scale datasets due to the complexity of replic

0 views • 22 slides

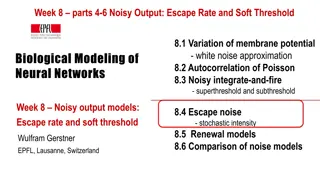

Exploring Noisy Output in Neural Networks: From Escape Rate to Soft Threshold

Delve into the intricacies of noisy output in neural networks through topics such as the variation of membrane potential with white noise approximation, autocorrelation of Poisson processes, and the effects of noise on integrate-and-fire systems, both superthreshold and subthreshold. This exploratio

0 views • 34 slides

New Approaches in Learning Complex-Valued Neural Networks

This study explores innovative methods in training complex-valued neural networks, including a model of complex-valued neurons, network architecture, error analysis, Adam optimizer, gradient calculation, and activation function selection. Simulation results compare real-valued and complex-valued net

0 views • 12 slides

Understanding Deep Generative Bayesian Networks in Machine Learning

Exploring the differences between Neural Networks and Bayesian Neural Networks, the advantages of the latter including robustness and adaptation capabilities, the Bayesian theory behind these networks, and insights into the comparison with regular neural network theory. Dive into the complexities, u

0 views • 22 slides