Overview of Grammar Types and Chomsky Hierarchy

The four types of grammars are General, Context-Sensitive, Context-Free, and Linear grammars, each recognizing a specific set of languages. Chomsky Hierarchy categorizes these grammars into four levels, indicating subsets of languages they can recognize. Context-free grammars have specific production rules, while Linear grammars can be left or right linear. Examples illustrate the structures and languages produced by these grammars, highlighting their relationships to Regular Languages and Context-Free Languages.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

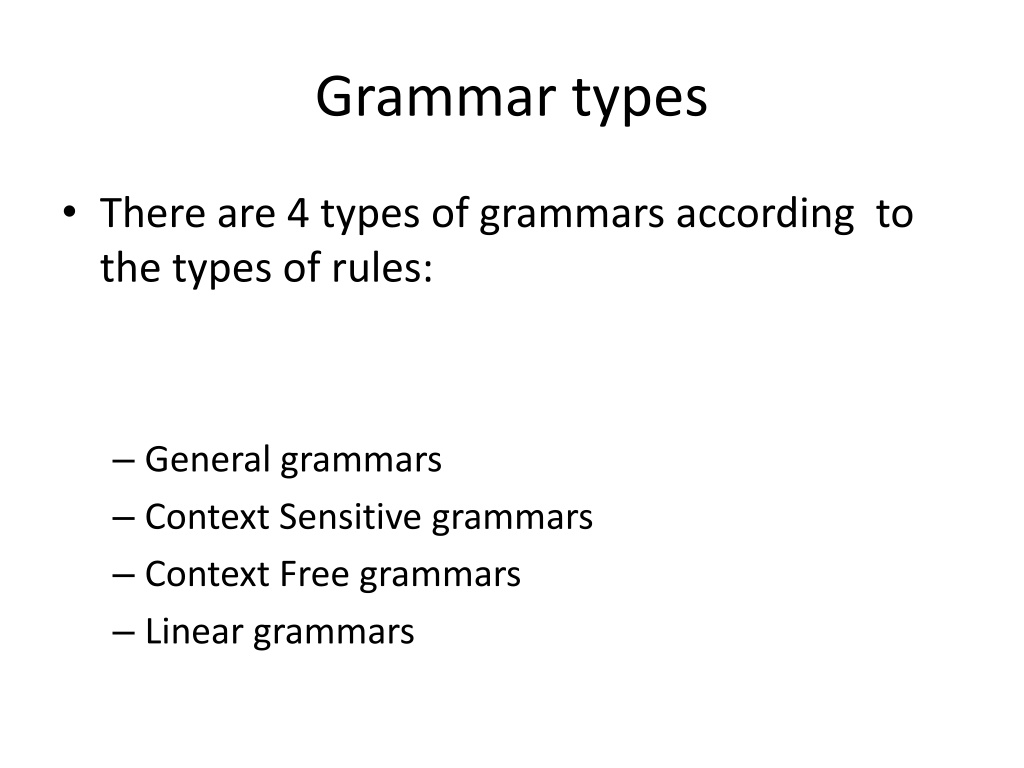

Grammar types There are 4 types of grammars according to the types of rules: General grammars Context Sensitive grammars Context Free grammars Linear grammars

Grammar types There are 4 types of grammars according to the types of rules: Each type recognizes a set of languages. General grammars RE languages Context Sensitive grammars CS languages Context Free grammars CF languages Linear grammars Regular languages

Chomsky Hierarchy There are 4 types of grammars according to the types of rules: Each type recognizes a set of languages. Each language is a subset of the above Recursively Enumerable languages Context Sensitive languages Context Free languages Regular languages

Context free grammars The production rules must be of the form A A is in V is in (V T)*

Linear grammars The Linear Grammars are either left or right: Right Linear Grammars: Rules of the forms A A a A aB Left Linear Grammars: Rules of the forms A A a A Ba A,B: variables and a: terminal A,B: variables and A: terminal

Example S aS | bA A cA |

Example S aS | bA A cA | S aS aaS a aS a abA a abcA a abccA a abc c

Example S aS | bA A cA | This grammar produces the language L(a*bc*) S aS aaS a aS a abA a abcA a abccA a abc c

Regular Languages CF Languages A Linear Grammar is also a CF grammar: Every production rule is of the form A , with A being a variable and being either , a or Ba (a string of variables and terminals). Thus the language produced by a linear grammar is a Context Free languages. We will see that linear grammars actually produce the Regular Languages, which means that all the Regular Languages are also CF. The converse is not true!!! For example LR = {wwR: w in {a, b}* } is not a regular language.

Right Linear Grammars produce exactly the Regular Languages Two directions: 1. Given a Right Linear grammar construct an NFA that recognizes the same language with the Right Linear grammar. 2. Given an NFA construct a Right Linear grammar that describes the same language with the NFA.

1. Right Linear Grammar NFA Suppose that I have a right linear grammar (V, , S, P). I construct an NFA (Q, , , S, {f}). The set of states Q will be the set V U {f}, where f is a new symbol denoting a final state Productions in P have three possible forms: A : add the transition ( , ) = f A a : add the transition (A,a) = f A aB : add the transition ( ,a) = B

Examples 1) Transform the following Right Linear grammar in an equivalent NFA . S aS | bA A cA | Solution: a c b f S

2. NFA Right Linear Grammar Suppose that I have an NFA (Q, , , q0, F,). I construct a right linear grammar (Q, , q0, P). For each transition (qi ,a) = qj, I construct the rule qi aqj in P. Furthermore, for every state qi in F I add the rule qi in P.

Examples 2) Transform the following DFA to a right linear grammar b a q0 q1 b a Solution: q0 aq1 | bq0 q1 aq1 | bq0 |

Left Linear Grammars It can be shown that Left Linear Grammars also produce the Regular Languages but this is not so straightforward. Actually, a Left Linear grammar produces the reverse of the language produced by the Right Linear grammar in which we reversed the rules A Ba to A aB. But the set of the reverse languages of all the Regular Languages is exactly the set of the Regular Languages. So the Left Linear Grammars produce the Regular Languages.

Example C Bc B Ab A a The derivation of abc is: C Bc Abc abc, or abc Abc Bc C So I should start creating the string abc from right to left. But this is equivalent with creating the reverse of cba. C cB cbA cba and then take the reverse.

Example (continued) The Right Linear grammar with the rules A Ba reversed is C cB B bA A a and it produces the reverse language. So, just create the NFA for the language produced by the Right Linear grammar and then compute the reverse (change start with final state and reverse the arrows). This is an NFA for the Left Linear grammar.