Intelligent Information Retrieval: Models, Ranking, and Algorithms

Explore the intricacies of retrieval models, ranking systems, and algorithms in the field of Intelligent Information Retrieval. Learn about the construction of indices, matching and scoring processes, distinguishing between exact-match and best-match retrieval, ranking algorithms like Boolean matching and cosine similarity, and the concept of Boolean retrieval with logic expressions and document features. Dive deep into the world of information retrieval to enhance your understanding of how documents are matched with queries and ranked for optimal retrieval effectiveness.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Retrieval Models and Ranking Systems CSC 575 Intelligent Information Retrieval

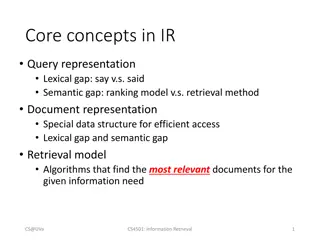

Retrieval Models Model is an idealization or abstraction of an actual process in this case, the process is matching of documents with queries, i.e., retrieval Mathematical models are used to study the properties of the process, draw conclusions, make predictions Conclusions derived from a model depend on whether the model is a good approximation to the actual situation Retrieval models can describe the computational process e.g. how documents are ranked note that inverted file is an implementation not a model Intelligent Information Retrieval 2

Lexical analysis and stop words Information need Collections Pre-process text input Parse Index Query How is the index constructed? Rank How is the matching and scoring done? Result Sets

Retrieval Models Customary to distinguish between exact-match and best-match retrieval Exact-match query specifies precise retrieval criteria every document either matches or fails to match query result is a set of documents Best-match query describes good or best matching document result is ranked list of documents result may include estimate of quality Best-match models: better retrieval effectiveness good documents appear at top of ranking but efficiency is better in exact match (e.g., Boolean) Intelligent Information Retrieval 4

Ranking Algorithms Assign weights to the terms in the query Assign weights to the terms in the documents Compare the weighted query terms to the weighted document terms Boolean matching (exact match) simple (coordinate level) matching (dot product) cosine similarity other normalized similarity measures (Dice, Jaccard, overlap, etc.) probabilistic models Rank order the results pure Boolean has no ordering Intelligent Information Retrieval 5

Boolean Retrieval Boolean retrieval most common exact-match model queries are logic expressions with document features as operands retrieved documents are generally not ranked query formulation difficult for novice users Pure Boolean operators: AND, OR, NOT Some systems have proximity operators Most systems support simple regular expressions as search terms to match spelling variants Intelligent Information Retrieval 6

Boolean Logic AND and OR in a Boolean query represent intersection and union of the corresponding documents sets, respectively NOT represents the complement of the corresponding set = C A = C A = C A B = C DeMorgan' A B B A Law s : = A B A B = A B A B Intelligent Information Retrieval 7

Boolean Queries Boolean queries are Boolean combination of terms Cat Cat OR Dog Cat AND Dog (Cat AND Dog) OR Collar (Cat AND Dog) OR (Collar AND Leash) (Cat OR Dog) AND (Collar OR Leash) (Cat OR Dog) AND (Collar OR Leash) Each of the following combinations works: x x x x x x x x x x x x x x x x Cat Dog Collar Leash x x x x Intelligent Information Retrieval 8

Boolean Matching t1 t2 m1 = t1 t2 t3 D9 D2 D1 m2 = t1 t2 t3 m3 = t1 t2 t3 m5 m3 m6 m4 = t1 t2 t3 m5 = t1 t2 t3 D11 D4 D5 m1 D3 m2 m6 = t1 t2 t3 m7 = t1 t2 t3 D6 m4 D10 m8 = t1 t2 t3 m7 m8 D8 D7 t3 Hit list for the query t1 AND t2 {D1, D3, D5, D9, D10, D11} {D1, D2, D4, D5, D6} = {D1, D5} Intelligent Information Retrieval 9

Psuedo-Boolean Queries A new notation, from web search +cat dog +collar leash Does not mean the same thing! Need a way to group combinations Phrases: stray cat AND frayed collar + stray cat + frayed collar Intelligent Information Retrieval 10

Faceted Boolean Query Strategy: break query into facets conjunction of disjunctions (conjunctive normal form) a1 OR a2 OR a3 b1 OR b2 c1 OR c2 OR c3 OR c4 AND each facet expresses a topic or concept rain forest OR jungle OR amazon medicine OR remedy OR cure research OR development AND Intelligent Information Retrieval 11

Faceted Boolean Query Query still fails if one facet missing Alternative: a form of Coordination level ranking Order results in terms of how many facets (disjuncts) are satisfied Also called Quorum ranking Problem: Facets still undifferentiated Alternative: assign weights to facets Intelligent Information Retrieval 12

Boolean Model Advantages simple queries are easy to understand relatively easy to implement structured queries queries can be automatically translated into CNF or DNF Disadvantages difficult to specify what is wanted too much returned, or too little (acceptable precision generally means unacceptable recall) ordering not well determined query formulation difficult for novice users Dominant language in commercial systems until the WWW Intelligent Information Retrieval 13

Vector Space Model(revisited) Documents are represented as bags of words Represented as vectors when used computationally A vector is an array of floating point (or binary in case of bit maps) Has direction and magnitude Each vector has a place for every term in collection (most are sparse) Document Ids a document vector nova galaxy heat actor film role A 1.0 0.5 0.3 B 0.5 1.0 C 1.0 0.8 0.7 D 0.9 1.0 0.5 E 1.0 F G 0.5 0.7 H 0.6 1.0 0.3 0.2 I 0.7 0.5 1.0 0.7 0.9 0.3 Intelligent Information Retrieval 14

Documents & Query in n-dimensional Space Documents are represented as vectors in term space Queries represented the same as documents Query and Document weights are based on length and direction of their vector A vector distance measure between the query and documents is used to rank retrieved documents Intelligent Information Retrieval 15

The Notion of Similarity in IR The notion of similarity is central to many aspects of information retrieval and filtering: measuring similarity of the query to documents is the primary factor in determining what is returned (and how they are ranked) similarity measures can also be used in clustering documents (i.e., grouping together documents with similar content) the same similarity measures can also be used to group together related terms (based on their occurrence patterns across documents in the collection) Intelligent Information Retrieval 16

Vector-Based Similarity Measures Simple Matching and Cosine Similarity Simple matching = dot product of two vectors = , , , X x x x i 1 2 n = = ( , ) sim X Y X Y x y i i = , , , Y y y y 1 2 n Cosine Similarity = normalized dot product i = 2 X ix the norm of a vector X is: In other words, divide the dot product by the norms of the two vectors the cosine similarity of vectors X and Y is: i ( ) x y i i X Y = = ( , ) sim X Y i i X y 2 2 x y i i Intelligent Information Retrieval 17

Vector-Based Similarity Measures Why divide by the norm? i = 2 X ix = , , , X x x x 1 2 n Example: X = <2, 0, 3, 2, 1, 4> ||X|| = SQRT(4+0+9+4+1+16) = 5.83 X* = X / ||X|| = <0.343, 0, 0.514, 0.343, 0.171, 0.686> Now, note that ||X*|| = 1 So, dividing a vector by its norm, turns it into a unit-length vector Cosine similarity measures the angle between two unit length vectors (i.e., the magnitudes of the vectors are ignored). Intelligent Information Retrieval 18

Computing a similarity score 2D Example = Say we have query vect D or ) 8 . 0 , 4 . 0 ( Q = Also, document ) 7 . 0 , 2 . 0 ( 2 What does their similarity comparison yield? 8 . 0 ( + 4 . 0 ( ) 2 . 0 * ) 7 . 0 * = ( , ) sim Q D 2 ) 8 . 0 ( + ) 7 . 0 ( + 2 2 2 2 ) 4 . 0 [( * ] ) 2 . 0 [( ] . 0 64 = = . 0 98 . 0 42 Intelligent Information Retrieval 19

Computing Similarity Scores = ) 3 . 0 , 8 . 0 ( = D 1 ) 7 . 0 , 2 . 0 ( D 1.0 Q 2 D = ) 8 . 0 , 4 . 0 ( = Q 2 0.8 cos . 0 = 74 1 0.6 2 cos . 0 98 2 0.4 D 1 1 0.2 0.2 0.4 0.6 0.8 1.0 Intelligent Information Retrieval 20

Similarity Measures for Sets Q D T7 T1 T4 Document D and Query Q as sets of terms (i.e. not frequency information) T8 T5 T9 T2 T6 T10 T3 Simple Matching: ? ? ? Dice s ? ? ? ? + ? ? ? ? + ?= ?.?? Coefficient: ? ? ? Jaccard Coefficient: ? + ? ?= ?.? ? + ? ? ? Intelligent Information Retrieval 21

Other Vector Space Similarity Measures Dot Product (Simple Matching): t Cosine Coefficient: Dice s Coefficient: ( ) Jaccard s Coefficient: w w Intelligent Information Retrieval 22 q d j j = = 1 j ( , ) sim Q D t t 2 2 ( ) ( ) w w q d j j = = 1 1 j j

Vector Space Similarity Measures Again consider the following two document and the query vectors: D1 = (0.8, 0.3) D2 = (0.2, 0.7) Q = (0.4, 0.8) Computing similarity using Jaccard s Coefficient: 04 08 + 08 03 + [( . ( . ) ] [( . ) 08 + . ) ( . 08 + . )] 03 = = sim Q D ( , ) 058 . 1 2 2 2 2 [( . ) 04 ( . ) ] 056 . 04 02 + 08 07 + [( . ( . ) ] [( . ) 08 + . ) ( . 02 + . )] 07 = = sim Q D ( , ) 093 . 2 2 2 2 2 [( . ) 04 ( . ) ] 064 . Computing similarity using Dice s Coefficient: sim(Q, D2) = 0.96 sim(Q, D1) = 0.73 Intelligent Information Retrieval 23

Vector Space Similarity Measures Example docs D1 D2 D3 D4 D5 D6 D7 D8 D9 D10 D11 Q t1 2 1 0 4 1 2 0 0 2 0 6 1 t2 0 0 2 0 2 1 3 1 0 4 1 2 t3 3 0 1 0 3 0 1 0 1 2 Q.Di 2 1 4 4 5 4 6 2 2 8 8 5 |Di| 13 1 5 16 14 5 10 1 5 20 37 5 Dice 0.22 0.33 0.80 0.38 0.53 0.80 0.80 0.67 0.40 0.64 0.38 Jaccard Cosine 0.13 0.20 0.67 0.24 0.36 0.67 0.67 0.50 0.25 0.47 0.24 0.25 0.45 0.80 0.45 0.60 0.80 0.85 0.89 0.40 0.80 0.59 0 Intelligent Information Retrieval 24

Vector Space Similarity Measures Example Ranking Using Jaccard Ranking Using Cosine D3 D6 D7 D8 D10 D5 D9 D11 D4 D2 D1 0.67 0.67 0.67 0.50 0.47 0.36 0.25 0.24 0.24 0.20 0.13 D8 D7 D10 D3 D6 D5 D2 D4 D9 D1 D11 0.89 0.85 0.80 0.80 0.80 0.60 0.45 0.45 0.40 0.25 0.59 Intelligent Information Retrieval 25

Probabilistic Models Attempts to be more theoretically sound than the vector space model try to predict the probability of a document s being relevant, given the query there are many variations usually more complicated to compute than v.s. usually many approximations are required Relevance information is required from a random sample of documents and queries (training examples) Works about the same (sometimes better) than vector space approaches Intelligent Information Retrieval 26

Basic Probabilistic Retrieval Retrieval is modeled as a classification process Two classes for each query: the relevant and non-relevant documents (with respect to a given query) Given a particular document D, calculate the probability of belonging to the relevant class retrieve if greater than probability of belonging to non-relevant class i.e. retrieve if P(R|D) > P(NR|D) Equivalently, rank by a discriminant value (also called likelihood ratio) P(R|D) / P(NR|D) Different ways of estimating these probabilities lead to different models Intelligent Information Retrieval 27

Basic Probabilistic Retrieval A given query divides the document collection into two sets: relevant and non-relevant If a document set D has been selected in response to a query, retrieve the document if Relevant Documents P(R|D) dis(D) > 1 where P(NR|D) dis(D) = P(R|D) / P(NR|D) is the discriminant of D Non-Relevant Documents Document This criteria can be modified by weighting the two probabilities Intelligent Information Retrieval 28

Estimating Probabilities Bayes Rule can be used to invert conditional probabilities: ( | ). ( ) P B A P A P B ( ) = P A B ( | ) Applying that to discriminant function: ( | ) ( | ). ( ) ). ( P R D P NR D ( P D R P R P D NR P NR ( | = = ( ) dis D | ) ) Note that P(R) is the probability that a random document is relevant to the query, and P(NR) = 1 - P(R) P(R) = n / N and P(NR) = 1 - P(R) = (N - n) / N where n = number of relevant documents, and N = total number of documents in the collection Intelligent Information Retrieval 29

Estimating Probabilities ( | ) ( | ). ( ) ). ( P R D P NR D ( P D R P R P D NR P NR ( | = = ( ) dis D | ) ) Now we need to estimate P(D|R) and P(D|NR) If we assume that a document is represented by terms t1, . . ., tn, and that these terms are statistically independent, then = ( | ) ( | ) ( | ) P t R P t ( | ) n P t P D R R R 1 2 and similarly we can compute P(D|NR) Note that P(ti|R) is the probability that a term ti occurs in a relevant document, and it can be estimated based on previously available sample (e.g., through relevance feedback) So, based on the probability of the distribution of terms in relevant and non-relevant documents we can estimate whether the document should be retrieved (i.e, if dis(D) > 1) Note that documents that are retrieved can be ranked based on the value of the discriminant Intelligent Information Retrieval 30

Probabilistic Retrieval (cont.) In practice, can t build a model for each query Instead a general model is built based on query-document pairs in the historical (training) data Then for a given query Q, the discriminant is computed only based on the conditional probabilities of the query terms If query term t occurs in D, take P(t|R) and P(t|NR) If query term t does not appear in D, take 1-P(t|R) and 1- P(t|NR) Q = t1, t3, t4 D = t1, t4, t5 ( | 1 NR ).( 1 ( | 3 t )). ( | 4 t ) ( NR ) P t R P R P R P R = ( , ) dis D Q ( | 1 t ).( 1 ( | 3 t )). ( | 4 t ) ( ) P P NR P NR P Intelligent Information Retrieval 31

Probabilistic Retrieval - Example t1 1 0 1 1 0 0 0 1 0 1 1 t2 1 1 0 1 1 0 1 1 0 0 0 t3 0 1 1 1 0 0 0 0 1 1 0 t4 1 0 0 1 1 1 0 1 1 0 1 t5 0 0 1 0 0 1 0 0 1 1 1 Relevance R R NR NR NR R NR NR R NR Term t1 t2 t3 t4 t5 P(t|R) 1/4 2/4 2/4 3/4 2/4 P(t|NR) 4/6 4/6 3/6 3/6 2/6 D1 D2 D3 D4 D5 D6 D7 D8 D9 D10 D Q = t1, t3, t4 D = t1, t4, t5 ( | 1 NR ).( 1 ( | 3 t )). ( | 4 t ) ( NR ) P t R P R P R P R = ( , ) dis D Q ( | 1 t ).( 1 ( | 3 t )). ( | 4 t ) ( ) P P NR P NR P 1 ( ) 5 . 0 . 0 . 0 25 75 4 . 0 = = ( , ) . 0 373 dis D Q 1 ( ) 5 . 0 5 . 0 . 0 67 6 . 0 Since the discriminant is less than one, document D should not be retrieved Intelligent Information Retrieval 32

Probabilistic Retrieval (cont.) In practice, can t build a model for each query Instead a general model is built based on query-document pairs in the historical (training) data Then for a given query Q, the discriminant is computed only based on the conditional probabilities of the query terms If query term t occurs in D, take P(t|R) and P(t|NR) If query term t does not appear in D, take 1-P(t|R) and 1- P(t|NR) Term t1 t2 t3 t4 t5 P(t|R) 1/4 2/4 2/4 3/4 2/4 P(t|NR) 4/6 4/6 3/6 3/6 2/6 Q = t1, t3, t4 D = t1, t4, t5 ( | 1 NR ).( 1 ( | 3 t )). ( | 4 t ) ( NR ) P t R P R P R P R = ( , ) dis D Q ( | 1 t ).( 1 ( | 3 t )). ( | 4 t ) ( ) P P NR P NR P 1 ( ) 5 . 0 . 0 . 0 25 75 4 . 0 = = ( , ) . 0 373 dis D Q 1 ( ) 5 . 0 5 . 0 . 0 67 6 . 0 Intelligent Information Retrieval 33

Probabilistic Models Advantages Disadvantages Strong theoretical basis In principle should supply the best predictions of relevance given available information Can be implemented similarly to Vector Space model Relevance information is required -- or is guestimated as part of training the model Optimally requires on-going collection of relevance information Intelligent Information Retrieval 34

Vector and Probabilistic Models Support natural language queries Treat documents and queries the same Support relevance feedback searching Support ranked retrieval Differ primarily in theoretical basis and in how the ranking is calculated Vector assumes relevance Probabilistic relies on relevance judgments or estimates Intelligent Information Retrieval 35

Retrieval Models and Ranking Systems CSC 575 Intelligent Information Retrieval