Memory Resource Management in VMware ESX Server Overview

This presentation discusses the background of memory resource management in VMware ESX Server, focusing on server consolidation, memory abstractions, and memory reclamation techniques. It covers topics such as page sharing, memory allocation policies, and related work in the field. The use of VMware ESX Server in virtualizing the Intel IA-32 architecture without the need for OS modification is highlighted. Various memory reclamation approaches and the concept of ballooning are also explained in detail.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Memory Resource Management in VMware ESX Server By Carl A. Waldspurger Presented by Clyde Byrd III (some slides adapted from C. Waldspurger) EECS 582 W16 1

Overview Background Memory Reclamation Page Sharing Memory Allocation Policies Related Work Conclusion EECS 582 W16 2

Background Server consolidation needed Many physical servers underutilized Consolidate multiple workloads per machine Using Virtual Machines VMware ESX Server: a thin software layer designed to multiplex hardware resources efficiently among virtual machines Virtualizes the Intel IA-32 architecture Runs existing operating systems without modification EECS 582 W16 3

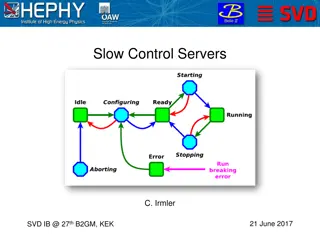

ESX Server EECS 582 W16 4

Memory Abstractions in ESX Terminology Machine address: actual hardware memory Physical address: a software abstraction used to provide the illusion of hard ware memory to a virtual machine Pmap: for each VM to translate physical page numbers (PPN) to machine page numbers (MPN) Shadow page tables: contain virtual-to-machine page mappings EECS 582 W16 5

Memory Abstractions in ESX (cont.) EECS 582 W16 6

Memory Reclamation Traditional approach Introduce another level of paging, moving some VM physical pages to a swap area on disk Disadvantages: Requires a meta-level page replacement policy Introduces performance Double paging problem EECS 582 W16 7

Memory Reclamation (cont.) The hip approach: Implicit cooperation Coax guest into doing the page replacement Avoid meta-level policy decisions EECS 582 W16 8

Ballooning EECS 582 W16 9

Ballooning (cont.) The black bars plot the performance when the VM is configured with main memory sizes ranging from 128 MB to 256 MB The gray bars plot the performance of the same VM configured with 256 MB, ballooned down to the specified size Throughput of a Linux VM running dbench with 40 clients EECS 582 W16 10

Ballooning (cont.) Ballooning is not available all the time: OS boot time, driver explicitly disabled Ballooning does not respond fast enough for certain situations Guest OS might have limitations to upper bound on balloon size EECS 582 W16 11

Page Sharing ESX Server exploits redundancy of data across VMs Multiple instances of the same guest OS can and do share some of the same data and applications Sharing across VMs can reduce total memory usage The system allows tries to share a page before swapping out pages EECS 582 W16 12

Page Sharing (cont.) Content-Based Page Sharing Identify page copies by their contents. Pages with identical contents can be shared regardless of when, where or how those contents were generated Background activity saves memory over time Advantages: Eliminates the need to modify, hook or even understanding guest OS code Able to identify more opportunities for sharing EECS 582 W16 13

Page Sharing (cont.) Scan Candidate PPN EECS 582 W16 14

Page Sharing (cont.) Successful Match EECS 582 W16 15

Page Sharing (cont.) EECS 582 W16 16

Memory Allocation policies ESX allows proportional memory allocation for VMs With maintained memory performance With VM isolation Admin configurable { min, max, shares } EECS 582 W16 17

Proportional allocation Resource rights are distributed to clients through TICKETS Clients with more tickets get more resources relative to the total resources in the system In overloaded situations client allocation degrades gracefully Proportional-share can be unfair, ESX uses an idle memory tax to be more reasonable EECS 582 W16 18

Idle Memory Tax Tax on idle memory Charge more for idle page than active page Idle-adjusted shares-per-page ratio Tax rate Explicit administrative parameter 0% is too unfair, 100% is too aggressive, 75% is the default High default rate Reclaim most idle memory Some buffer against rapid working-set increases EECS 582 W16 19

Idle Memory Tax (cont.) The tax rate specifies the max number of idle pages that can be reallocated to active clients When an idle paging client starts increasing its activity the pages can be reallocated back to full share ESX statistically samples pages in each VM to estimate active memory usage ESX by default samples 100 pages every 30 seconds EECS 582 W16 20

Idle Memory Tax (cont.) Experiment: 2 VMs, 256 MB, same shares. VM1: Windows boot+idle. VM2:Linux boot+dbench. Solid: usage, Dotted:active. Change tax rate 0% 75% After: high tax. Redistribute VM1 VM2. VM1 reduced to min size. VM2 throughput improves 30% EECS 582 W16 21

Dynamic Reallocation ESX uses thresholds to dynamically allocate memory to VMs ESX has 4 levels from high, soft, hard and low The default levels are 6%, 4%, 2% and 1% ESX can block a VM above target allocations when levels are at low Rapid state fluctuations are prevented by changing back to higher level only after higher threshold is significantly exceeded EECS 582 W16 22

Dynamic Reallocation EECS 582 W16 23

I/O Page Remapping IA-32 supports PAE to address up to 64GB of memory over a 36bit address space ESX can remap hot pages in high physical memory addresses to lower machine addresses EECS 582 W16 24

Related Work Disco and Cellular Disco Virtualized servers to run multiple instances of IRIX Vmware Workstation Type 2 hypervisor; ESX is type 1 Self-paging of the Nemesis system Similar to Ballooning Requires applications to handle their own virtual memory operations Transparent page sharing work in Disco IBM s MXT memory compression technology Hardware approach; but can be achieved through page sharing EECS 582 W16 25

Conclusion Key features Flexible dynamic partitioning Efficient support for overcommitted workloads Novel mechanisms Ballooning leverages guest OS algorithms Content-based page sharing Integrated policies Proportional-sharing with idle memory tax Dynamic reallocation EECS 582 W16 26