Understanding Maximum Likelihood Estimation in Physics

Maximum likelihood estimation (MLE) is a powerful statistical method used in nuclear, particle, and astro physics to derive estimators for parameters by maximizing the likelihood function. MLE is versatile and can be used in various problems, although it can be computationally intensive. MLE estimators are typically consistent but may exhibit bias, which can be corrected. The method is invariant under parameter transformations and efficient in saturating the minimum variance bound. In addition, MLE allows for unbiased estimators through calibration and is applicable to a wide range of scenarios.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

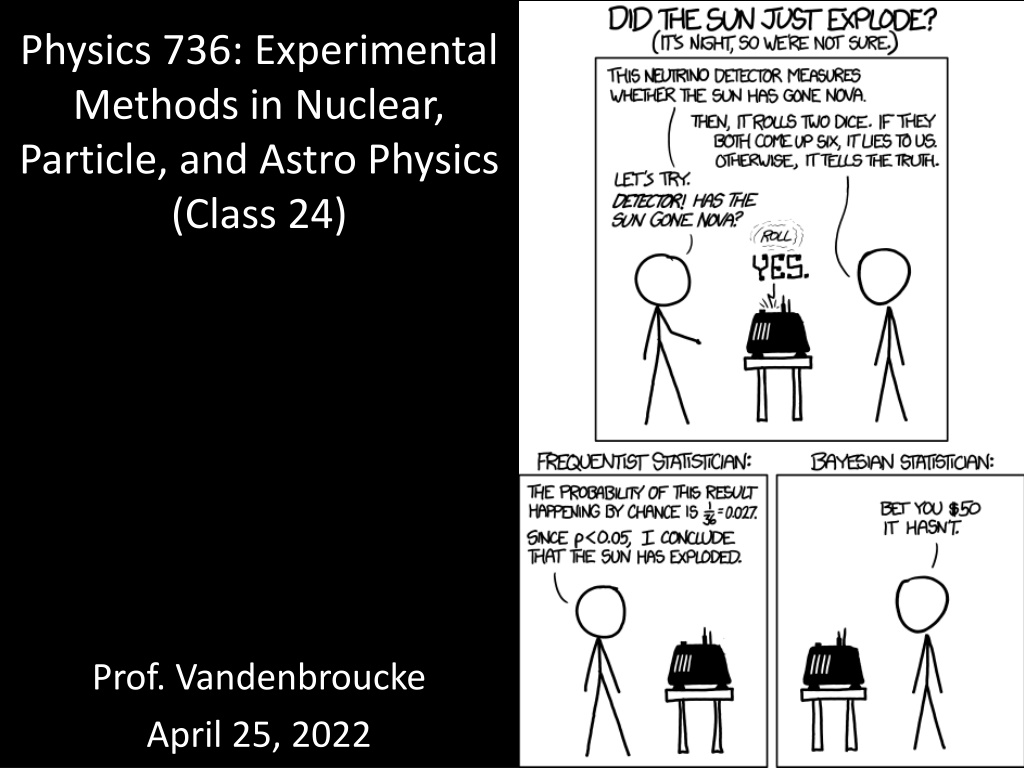

Physics 736: Experimental Methods in Nuclear, Particle, and Astro Physics (Class 24) Prof. Vandenbroucke April 25, 2022

Announcements Slides from Meunchmeyer & Bechtol guest classes are posted No more problem sets! For Wed Apr 27: Read Tavernier 8.1-8.4 For Mon May 2: Read Tavernier 8.5-8.7 For Wed May 4: Read Barlow 8.5-10.4 Project papers due Mon May 2 at 5:00pm (1 week from today!) Project oral presentations at last day of class: Wed May 4 Office hours Wed 3:45-4:45 I encourage you to come discuss the final steps of your project

Maximum likelihood estimation The likelihood function describes the probability of a certain data set occurring, given a particular model determined by a particular set of parameter values We can use this to derive estimators for the parameters, by maximizing the likelihood As for least squares estimation, maximum likelihood estimation can sometimes provide an analytical estimator, or in general the maximization can be done numerically (Maximum) likelihood method is very general: can apply to a wide range of problems Can be computationally intensive: term included for each data point (number of data points can be reduced with binning, at the price of losing information) Least squares estimation is actually a special case of maximum likelihood estimation and can be derived by analytically maximizing the likelihood

Maximum likelihood estimation ML estimators are usually consistent They are sometimes biased The bias can be calculated and corrected to determine an unbiased estimator (calibration) ML estimation is invariant under parameter transformation: a function of the estimator of a parameter is equal to the estimator of the function of the parameter This is useful: a transformation can be applied if a different variable is easier to work with (may introduce bias which then needs to be corrected) Maximum likelihood estimators are efficient (they saturate the minimum variance bound)

Back to probability basics Example 1 Mary draws a card from a deck of 52. What is the probability it is a spade? 13/52 = 25.0% Example 2 After drawing a spade as the first card from a deck of 52, Mary draws a second card from the same deck. What is the probability it is a spade? 12/51 = 23.5% Example 3 Mary draws two cards from a deck of 52. What is the probability that both are spades? (13/52)*(12/51) = P(A) * P(B|A) = 5.9% P(A and B) = P(A)*P(B|A) This is conditional probability

Conditional probability Define two events A and B P(A) is the probability of A occurring P(B) is the probability of B occurring P(A|B) is the probability of A occurring given that B occurs P(A and B) = P(B)*P(A|B) In the special case that A and B are independent, P(A|B) = P(A), so P(A and B) = P(B)*P(A): multiplication rule

Example: Cyprus Population of Cyprus is 70% Greek and 30% Turkish 20% of Greeks speak English 10% of Turks speak English What fraction of Cypriots speak English? P(E) = P(E|G)*P(G) + P(E|T)*P(T) P(E) = 0.2*0.7 + 0.1*0.3 = 17% This is partitioning, a powerful technique: enumerate all possible contributions (paths) and their probabilities

Example: medical testing, false and true positives 0.1% of children are born with spina bifida There is a fetal protein test for it 100% of babies with spina bifida test positive (true positive) 5% of babies without spina bifida test positive (false positive) If a baby tests positive, what is the probability it has spina bifida? S = has spina bifida; N = does not; + = tests positive; - = tests negative P(S) = 0.001 P(N) = 0.999 P(+|S) = 1 P(+|N) = 0.05 P(S|+) = ? P(S and +) = P(S|+)P(+) = P(+|S)P(S) P(S|+) = P(+|S)P(S) / P(+) P(S|+) = P(+|S) P(S) / [ P(+|S)P(S) + P(+|N)P(N) ] P(S|+) = 1 * 0.001 / [1 * 0.001 + 0.05*0.999] = 2.0% (!) Prior probability (base rate) was 0.1% Posterior probability is 2.0% The posterior probability is still small! The posterior probability is 20 times the size of the prior probability This is still valuable, because it can be used to indicate that a more accurate/expensive test should be done This is known as the base rate fallacy / false positive paradox

Bayess Theorem When we do not directly know P(B), which happens often, it is useful to partition P(B) using two or more routes to B, each of which is calculable P(A) is the prior probability P(A|B) is the posterior probability Our degree of belief that A is true changes with the addition of more information (the knowledge that B is true)

Example: polygraph The NSA is hiring and gives you a lie detector test If you are lying, the machine has a 99% probability of beeping If you are telling the truth, the machine has a 99% probability of being silent 0.5% of people lie to the NSA during a lie detector test If the machine beeps, what is the probability that you are lying? L = lying; T = telling truth; B = beeps; S = silent P(B|L) = 0.99 P(S|T) = 0.99 P(L) = 0.005 P(L and B) = P(L|B)*P(B) = P(B|L) * P(L) P(L|B) = P(B|L) * P(L) / P(B) P(B) = P(B|L)*P(L) + P(B|T)*P(T) = 0.99*0.005 + 0.01*0.995 = P(L|B) = 0.99 * 0.005 / (0.99*0.005 + 0.01*0.995) P(L|B) = 33.2%

Back to the cards example A card is drawn from a fair deck of 52. What is the probability it is a spade? P(A) = 13/52 = 1/4 = 25% Two cards are drawn from a deck. What is the probability the second is a spade? If we do not know what the first card was, it is P(B) = 13/52 = 25% If we know the first card was a spade, it is 12/51 = 23.5% What is the probability that the first two cards are both spades? P(A and B) = P(A) * P(B|A) = (13/52)*(12/51) = 5.9% Note that the probability we assign to an event depends not only on the event itself but on any other information we have that may be relevant: external/prior knowledge modifies our degree of belief that the event will occur

Bayesian statistics Bayes Theorem can be used fully within frequentist statistics when A and B are both events When A or B is a hypothesis rather than an event, the two schools of thought diverge In Bayesian/subjective statistics (specifically, Bayesian inference), we can assign a probability to a hypothesis and interpret it as degree of belief that the hypothesis is true Bayesian inference: quantitative method for updating our degree of belief in a hypothesis as more information is acquired

Bayesian parameter estimation In Bayesian estimation, the parameters have a prior probability distribution which may be peaked at a particular value This is a useful method of including constraints on parameters due to knowledge other than the experiment itself (such as results of other measurements, or well supported models) The likelihood function is the joint PDF for the data (x) given one or more model parameters ( ): With a measurement, we wish to invert this: given a data set, what are the parameters? We can do this with Bayes s Theorem: ( ) is the prior probability density (or just prior) for , quantifying the state of knowledge of before running the experiment p( |x) is the posterior probability density for , given the experimental results: a PDF giving our estimation of theta after running the experiment We can quote the most likely value (mode) of the posterior PDF If ( ) is constant, this reduces to maximum likelihood estimation If ( ) is not constant, it will weight the result toward our prior best estimate

Notes on Bayesian estimation A prior PDF that is uniform in a particular parameter is not necessarily uniform under a transformation of that parameter! So it is difficult to choose a prior that universally expresses ignorance, e.g. choosing a uniform prior for means that the prior for 2 is not uniform, and there is no clear reason that either one is the natural parameter for the problem Maximum likelihood estimation is invariant under parameter transformation, but Bayesian estimation is not

Hypothesis testing We ve discussed a lot about parameter estimation: given a data set and a model, find the best estimate for the parameters of the model Another class of questions (sometimes even more important) is to answer a yes or no question Do the data fit the model? Is there signal present (or only background)? Are these two samples from the same distribution (e.g.: are these two experimental results consistent with one another)? Does this vaccination perform better than a placebo? Procedure: state a hypothesis as clearly and simply as possible, then devise a test that will accept or reject the hypothesis based on the data Due to statistical uncertainty, you cannot always (with 100% certainty) answer the question correctly You can quantify the probability of answering incorrectly (in both possible ways) You can design your statistical test to choose a tradeoff between these possible incorrect answers

Hypothesis testing: 4 possible outcomes A hypothesis is true, and you accept it (correctly) A hypothesis is false, and you reject it (correctly) A hypothesis is true, and you reject it (incorrectly) A hypothesis is false, and you accept it (incorrectly)

Hypothesis testing for determining whether there is signal present in background/noise It is often not possible to prove that a signal does not exist, only that it is small (e.g., below some value at some confidence level) Define a null hypothesis: The measured data are consistent with background The alternative hypothesis is then The measured data are inconsistent with background In a simple experiment, the alternative hypothesis is identical to the hypothesis signal is present Often there are multiple alternative hypotheses To discover a signal, we need to reject the null hypothesis (at some confidence level) We need to quantify What is the probability of falsely rejecting the null hypothesis (false discovery?) What is the probability of falsely accepting the null hypothesis (missing a discovery?)

Quantifying a decision Critical value some observable metric Distribution of some (test) statistic is shown assuming either hypothesis is true Type I error: claim signal when there is only background (false positive) Type II error: miss a signal when it is present (false negative) The likelihood of a Type I error corresponds to the significance of the test Quantified as p value: prob. of claiming signal due to background fluctuation (blue area) Increasing the critical value increases the significance but decreases the power (sensitivity) Prob. of missing a signal is red area; power is 1- red area (function of signal strength) A good test is one with high significance and high power (in realistic experiments: tradeoff)

Point estimation and interval estimation The estimators we have discussed are point estimators: the best single estimate of what the true value is Often the uncertainty of the estimate (measurement) is as important as the best estimate Simple symmetric error bars are a common example of interval estimation: within which range does the true value of the quantity I am measuring lie? By necessity you will not always be correct (that the true value lies within the quoted range) It is necessary to specify a confidence level (probability of being correct) in order to construct and state a confidence interval Higher confidence level intervals cover a larger range (are less constraining) than lower confidence level intervals

Confidence intervals Example: we measure the mass of a person to be 78 kg, with a one-sigma uncertainty of 0.5 kg We quote this as 78 0.5 kg If the error is Gaussian, this means: The true value has a 68% probability of being between 77.5 and 78.5 kg (within 1 sigma of our best estimate): this is our 68% confidence (central) interval The true value has a 95% probability of being between 77 and 79 kg (within 2 sigma of our best estimate): this is our 95% confidence (central) interval The true value has a 99.7% probability of being between 76.5 and 79.5 (within 3 sigma of our best estimate): this is our 99.7% confidence interval We can say e.g. at 95% confidence level, the mass is between 77 and 79 kg We can construct a central interval at whichever confidence we like, e.g. 90% (1.64 sigma)

Choice of confidence interval At 95% CL, x lies between x- and x+ : this is still not sufficient For a given confidence level (e.g. 95%), there are many possible ranges to quote, each of which covers 95% of the cases Three standard choices: Central (aka equal-tailed) 95% interval: probability of being above x+ equals probability of being below x- Symmetric 95% CL interval (x- and x+ are equidistant from the mean ) Shortest 95% CL interval (x+ - x- is the minimum possible while still covering 95%) For symmetric PDFs such as Gaussian, the three are identical, but in general a choice must be made and stated In general, two choices must be made: confidence level and which interval construction method at that level Complete statement: The 95% confidence level central interval for x is (x-, x+) Central interval is most widely used