Overview of Compiler Technology and Related Terminology

Compiler technology involves software that translates high-level language programs into lower-level languages, such as machine or assembly language. It also covers decompilers, assemblers, interpreters, linkers, loaders, language rewriters, and preprocessing steps used in compilation. Understanding these terms is crucial in the field of programming and software development.

Uploaded on Sep 23, 2024 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

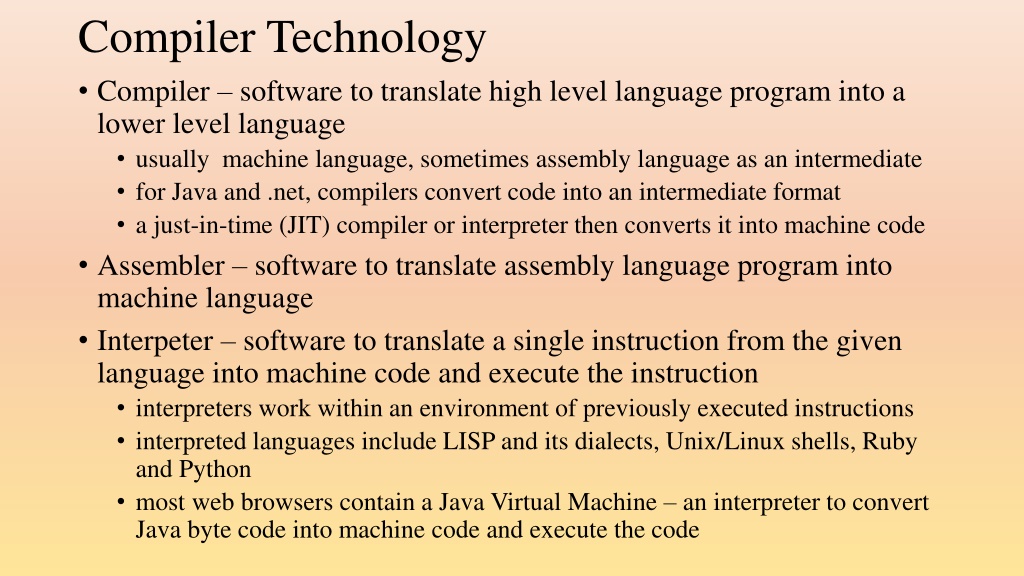

Compiler Technology Compiler software to translate high level language program into a lower level language usually machine language, sometimes assembly language as an intermediate for Java and .net, compilers convert code into an intermediate format a just-in-time (JIT) compiler or interpreter then converts it into machine code Assembler software to translate assembly language program into machine language Interpeter software to translate a single instruction from the given language into machine code and execute the instruction interpreters work within an environment of previously executed instructions interpreted languages include LISP and its dialects, Unix/Linux shells, Ruby and Python most web browsers contain a Java Virtual Machine an interpreter to convert Java byte code into machine code and execute the code

More Terminology Decompiler program that translates from a lower level language (usually assembly) into a higher-level language this process is ambiguous because there are many ways a series of low level instructions can be represented in a high level way Linker program that connects the external references of source code to their definitions in a library linking is often part of the compilation process although compilers allow you to separate this process for the translation process Loader program that loads executable code into memory a linker/loader locates the external definitions and loads them with the linker a dynamic linker/loader loads at run-time only the external definitions needed a static linker/loader will load all definitions found in the program Language rewriter program that translates a program in a language to a new program in the same language aspect-oriented programming relies on language rewriters for instance it could also be used to replace deprecated instructions

Yet More Terminology Preprocessing a step taken by some compilers prior to compilation to handle various simple chores in C for instance, # statements are preprocessor operations like textual substitution for #define Symbol table collection of user-defined entities (variables, subroutines, classes, constants) for each entity, the symbol table records the name, type, size/internal structure this is used by the compiler to bind references in the source code to a memory location and to determine the amount of memory that needs to be allocated to run the given subroutine (note that constants may be stored separately in a literal table ) Tokens the reserved words and syntactic constructs that are defined in the language for instance, ; is the semicolon operator and == is the equality operator

One-Pass Compilers A compiler operates on the source program instruction-by-instruction In a one-pass compiler, the compiler only makes one pass through the program, translating each instruction into machine code this drastically limits the complexity of the source language in that there can be no forward references subroutine calls cannot be to code listed beneath the call within the file variable declarations must be placed in one location so that a symbol table can be generated this would be the case in languages like Pascal and C Java s for loop would not be permissible because the compiler needs to first scan the for loop to determine if it is a counting loop or iterator loop before it can start translating the code Pascal uses a one-pass compiler C may be implemented in a one-pass compiler but gcc and Visual Studio are multi- pass compilers

Multi-pass Compilers The multi-pass compiler makes multiple passes through the source code usually, each pass corresponds to a different process of compilation lexical analysis breaking code into lexical units syntactic parsing identifying the type of instruction and its component parts, generating a parse tree semantic parsing applies semantic rules to the parse tree to ensure that the code meets the semantic requirements of the language code generation generating either the machine code or an intermediate form (e.g., assembly code, byte code) code optimization rearranging instructions to better first the parallel processing/pipelining aspects of the hardware a variation is the two-pass compiler one pass to perform lexical and syntactic analysis and form the symbol table a second pass to perform semantic analysis and translation

Why One-Pass? Such compilers are smaller and execute much faster they are also far easier to write than multi-pass compilers Pascal and C were both written with one-pass compilers in mind thus we have restrictions in these languages that we won t have in C++, Java, etc Most languages today use multi-pass compilers because the language is too complex for a single pass this allows for better language features declarations anywhere characters whose usage depends on context (for instance the period in COBOL used as the end of a sentence or a decimal point) forward referencing instructions (such as subroutines defined lower in the file) in addition, multiple passes allows for errors generated only for the given pass rather than either all found errors or the next error found (in the case of Pascal) also the ability to optimize code

Compiler Passes: Preprocessing LISP offers macros to replace code before substitution this is more an interpreter operation than a compiler preprocessing step PL/I was the first language to offer preprocessing three types %INCLUDE include text into the source file (%XINCLUDE includes text that is not already in the file) %DECLARE declare preprocessing variable (character or fixed only) %assignment assign a variable a value %ACTIVATE/%DEACTIVATE activate/deactivate a variable %DO/%END start and end of a block of several preprocessor statements %PROCEDURE define a preprocessor subroutine %SELECT conditional statement for preprocessing, also %IF, %THEN, %ELSE %REPLACE textual substitution C was the next language to offer this and simplified what PL/I had OCAML (predecessor of F#) has preprocessing known as Camlp4

Compiler Passes: Lexical Analysis for for-operator ( open-paren-operator i identifier = assignment-operator 0 literal-number ; semicolon-operator i identifier < relational-operator n identifier ; semicolon-operator i identifier ++ increment-operator ) close-paren-operator Treat the source file as a stream of characters Break the stream into meaningful units tokens (or language primitives) example: for(i=0;i<n;i++) analysis shown to the right Simple left-to-right parsing compare each grouping with the available tokens apply rules for identifier names, literal numbers, special syntactic structures like i++ As we are recognizing regular expressions, we can use a finite state automata Errors caught in this pass are lexical errors other errors may still exist in the code but won t be caught during this pass

Example Lexical Analysis

Example FSA Let s see how to recognize a legal identifier in C rule: consist of letters, digits, underscores but starts with a letter or underscore The FSA starts in state 1: start state there are two links from state 1 one link is traversed if the input character is a letter or underscore and leads to state 2 the other link is traversed for any other character and leads to an error state state 2 is reached if the first character is legal and has three links one link loops back to state 2 and is traversed if the next input character is a letter, digit or underscore one link leads to the end state (meaning a legal identifier) and taken if we have reached the end of this token one link leads to an error state and is taken under any other situation code from this FSA is given on the next slide

Implementing the Identifier FSA c = getChar(); if(!isAlpha(c)&&c!= _ ) return false; while((c=getChar())!=EOF) if(!isalnum(c)&&c!= _ ) return false; // error return true; // error // reached the end of token, return true We make the assumption that there is a way to determine the end of this token (denoted as EOF) but in actuality, this is more challenging and requires recursion This code works as follows get the first character if not legal, return false if legal, continue while there are more characters if a character is not legal, return false when done, return true

Constructing a Lexical Analyzer Define all of the tokens for the language if a token can vary (e.g., identifier, literal value) further define it as a regular expression build a FSA for each token connect the FSAs together into a single, large FSA The program LEX, built in 1975, is a tool for generating the lexical analyzer given the tokens (and their rules) of the language an example input for LEX is given on the next slide of a language that has numbers (integers only) simple identifiers (consist only of lower case letters) reserved words WHILE, DO, IF, THEN, ELSE, FI, OD and PRINT punctuation marks of :=, ;, =, !=, <=, >=, <, >, =, +, -, *, /, (, )

%{ #include "y.tab.h" extern int yylval; %} %% "=" { return EQ; } "!=" { return NE; } "<" { return LT; } "<=" { return LE; } ">" { return GT; } ">=" { return GE; } "+" { return PLUS; } "-" { return MINUS; } "*" { return MULT; } "/" { return DIVIDE; } ")" { return RPAREN; } "(" { return LPAREN; } ":=" { return ASSIGN; } ";" { return SEMICOLON; } "IF" { return IF; } "THEN" { return THEN; } "ELSE" { return ELSE; } "FI" { return FI; } "WHILE" { return WHILE; } "DO" { return DO; } "OD" { return OD; } "PRINT" { return PRINT; } [0-9]+ { yylval = atoi(yytext); return NUMBER; } [a-z]+ { yylval = sum of all ASCII chars; return NAME; } . { yyerror("illegal token"); } %% #ifndef yywrap yywrap() { return 1; } #endif

Syntactic Analysis Given a sequence of lexical items, do they form a syntactically valid construct e.g., a C for loop will be: for-operator open-paren-operator optionally: identifier assignment-operator expression (or literal number or identifier) semicolon operator optionally: boolean expression (or literal number or identifier) semicolon operator optionally: identifier assignment-operator expression (or identifier with prefix/postfix increment operator) close-paren-operator optionally: open-curly-brace-operator statement(s) optionally: close-curly-brace-operator we need to write a parser (recognizer) piece of code for every construct

Parsers The parser code will all be divided into functions, one per syntactic unit these functions can be called recursively functions will return true (valid parse) or false (error) on error, the parser must identify what rule failed and generate a syntax error message based on that rule these messages are hardcoded into the parser, one per rule functions will have a side effect of building a parse tree for the unit A parser function will contain function calls to retrieve the next token and then test that token against the expected token e.g., in the function to recognize a for loop, the first token must be the for-operator followed by the open-paren-operator, etc the parser s logic is based on the grammar and can be automatically generated from the grammar but then we need to add other rules (such as balancing { })

Recursive Decent Parsing The approach described is called recursive decent parsing because grammar rules can have multiple right hand sides, parsing is O(2^n) for instance, imagine the follow two rules A bB | C B bD if we are currently in a state of A and our next token is b , do we select bB or C and then bD? if we pick the wrong one, we have to backtrack and this is where the complexity becomes exponential in nature in reality, our programming languages do not have the type of ambiguity that we find in human languages (e.g., English) so there are few situations where we see rules like the above and thus the parsers will usually operate at a much better complexity To use a RDP, we need the grammar to be non-left-recursive If the grammar has left-recursive rules, we instead opt for a bottom-up parser, or chart parser as we already examined these in chapter 4, we skip the details here

YACC Yet Another Compiler Compiler this is a tool, often used with LEX, to generate a parser given the grammar of a language YACC generates a shift-reduce parsers that performs 1 look ahead this means that the parser looks ahead by 1 token before deciding which rule to employ although this makes the compiler more complicated, it reduces the amount of backtracking Input into YACC is the lexemes from LEX the root node of the grammar (the start state) all of the grammar rules example input shown on the next several slides

Input into YACC: Lexemes %start ROOT %token ASSIGN %token SEMICOLON %token IF %token THEN %token ELSE %token FI %token WHILE %token DO %token OD %token PRINT %token NUMBER %token NAME %token EQ %token NE %token LT %token LE %token GT %token GE %token PLUS %token MINUS %token MULT %token DIVIDE %token RPAREN %token LPAREN %%

Input into YACC: Grammar ROOT: stmtseq { execute($1); } ; statement: designator ASSIGN expression { $$ = assignment($1, $3); } | PRINT expression { $$ = print($2); } | IF expression THEN stmtseq ELSE stmtseq FI { $$ = ifstmt($2, $4, $6); } | IF expression THEN stmtseq FI { $$ = ifstmt($2, $4, empty()); } | WHILE expression DO stmtseq OD { $$ = whilestmt($2, $4); } ; stmtseq: stmtseq SEMICOLON statement { $$ = seq($1, $3); } | statement { $$ = $1; } ;

expression: expr2 { $$ = $1; } | expr2 EQ expr2 { $$ = eq($1, $3); } | expr2 NE expr2 { $$ = ne($1, $3); } | expr2 LT expr2 { $$ = le($1, $3); } | expr2 LE expr2 { $$ = le($1, $3); } | expr2 GT expr2 { $$ = gt($1, $3); } | expr2 GE expr2 { $$ = gt($1, $3); } ; expr2: expr3 { $$ == $1; } | expr2 PLUS expr3 { $$ = plus($1, $3); } | expr2 MINUS expr3 { $$ = minus($1, $3); } ;

expr3: expr4 { $$ = $1; } | expr3 MULT expr4 { $$ = mult($1, $3); } | expr3 DIVIDE expr4 { $$ = divide ($1, $3); } ; expr4: PLUS expr4 { $$ = $2; } | MINUS expr4 { $$ = neg($2); } | LPAREN expression RPAREN { $$ = $2; } | NUMBER { $$ = number($1); } | designator { $$ = $1; } ; designator: NAME { $$ = name($1); } ;

Parse Tree For the expression b *b 4 * a * c

Parsing void add_to_array(int *x, int y, int n) { int i = 0; for (i = 0; i < n; i++) { x[i] = x[i] + y; } }

Semantic Analysis With a parse available, attributes are added to the stored content in the parse for instance, types are added to identifier names rules are then applied to ensure that the semantics of the language are being upheld, for example proper types are used on the left and right hand side of an assignment statement proper number and type of parameters between the actual and formal parameter list proper operator(s) applied in the given context (+ used for numbers or Strings, ++ used for ordinal values, < and > used on comparable types of values) type coercions, object binding (if possible), initializing local variables (if possible) are handled in this step the compiler must implement the attribute grammar rules for semantic analysis

Code Generation This is a planning step given a parse, there are many ways to convert this into assembly code, for instance which data are preloaded into registers which addressing mode is used when referencing a datum in memory which instruction format will be used (if the platform has multiple instruction formats for a type of instruction) often, compilers use implement a solution to the graph coloring problem to determine variable to register loaded (this is an NP complete problem!)

Example How should a = b * c + d * e; be compiled? Assume the hardware has 8 registers, R0-R7, and ALU operations can reference up to 1 memory location below are two solutions the one on the left is more efficient if b, c, d and e are not referenced again, otherwise preloading all data into variables is more advantageous load R1, b load R2, c mult R3, R1, R2 load R4, d load R5, e mult R6, R4, R5 add R7, R3, R6 store R7, a load R1, b mult R2, R1, c load R3, d mult R4, R3, e add R5, R2, R4 store R5, a

Table of Costs To determine what addressing modes to use, the compiler may utilize a table of costs like the one below Mode Direct Register Indexed Indirect Register Indirect Indirect Indexed (c + (R)) Literal Address M R c + (R) (M) (R) Example Add M Add R1 Add 100(R1) Add (M) Add (R1) Cost 1 0 1 2 1 Add *100(R1) Add #5 2 0 #V

Code Optimization There are many strategies that can reduce the run-time cost of executing code code rearranging fill what would be stalls in the pipeline with neutral instructions algebraic simplification move a result that is used again into a register x = 7 * y + 5 a; // move 7 * y into a register z = 7 * y * c; dead code elimination remove code that cannot be reached function inlining replace the function call with the actual function body to save runtime overhead of using the run-time stack loop optimization rearrange nested loops that process data in non-contiguous order into one that processes data in contiguous order (this might improve cache performance) loop unrolling used to fill branch penalty spots with additional instructions We explore many of these concepts in CSC 462/562