Universal Approximation Theorem in Neural Networks

Explore the Universal Approximation Theorem, which states that a feedforward single hidden layer network can approximate continuous functions under mild assumptions on the activation function. Learn about practical and theoretical uses, including replicating black box functions, visualization tools, and optimization through backpropagation.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

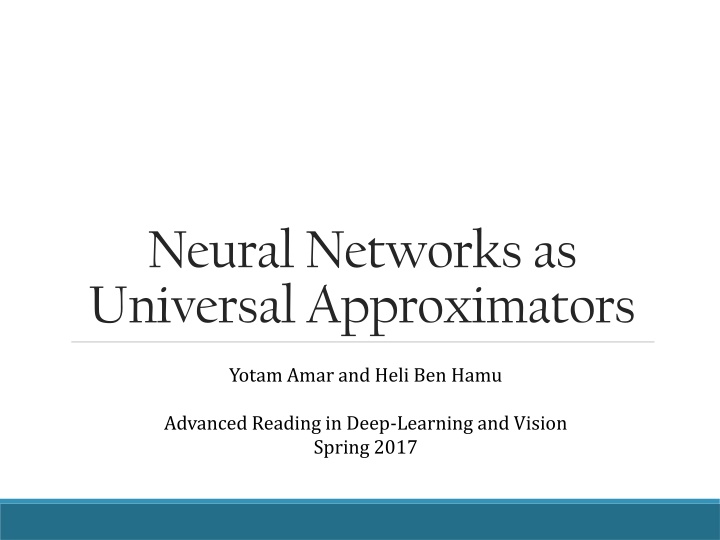

Neural Networks as Universal Approximators Yotam Amar and Heli Ben Hamu Advanced Reading in Deep-Learning and Vision Spring 2017

Motivation Practical uses: Practical uses: Replicating black box functions: decryption functions More effective implementation Visualization tools of neural networks Optimization of functions using backpropagation of the approximation Theoretical uses: Theoretical uses: Understanding NN as hypothesis class What NN can be used for? (except vision)

Universal Approximation Theorem

Universal Approximation Theorem ? ? ? ? ?(?) ? Theorem Theorem. A feedforward single hidden layer network with finite width can approximate continuous functions on compact subsets of n under mild assumptions on the activation function.

Single Hidden Layer NN ?1 ?2 ? = [?1,?2, ??] Output Neuron ?? ? ?? + ??) G X = ?=1 ???(?? Input Layer Hidden Layer ?? + ??) ?? neuron: ?(??

Quick Review: Measure Theory ( , ,?) a measure space Set of all outcomes Measure ? algebra A measure ? takes a set from and returns the measure of the set . Measure of A = ???(?) Measurable function Measurable function a function defined on a measurable set. Borel Borel algebra algebra the smallest ?- -algebra containing all open sets. Borel Borel measure measure a measure defined over a Borel ?- -algebra.

Notations ?? - ?-dimensional unit cube ?(??) space of continuous functions on ?? ?(??) space of finite, signed regular Borel measures on ??

Definitions Discriminatoy Function Discriminatoy Function We say that ? is discriminatory if for a measure ? ? ?? ? ??? + ? ??(?) = 0 ? ? ??? ? ?? Then ? = 0 Sigmoidal Function Sigmoidal Function We say that ? is sigmoidal if ? ? 1 ?? ? + 0 ?? ?

Theorem 1 [Cybenko 89] Let ? be any continuous discriminatory function. Then finite sums of the form: ? ?? + ??) G X = ?=1 ???(?? are dense in ?(??). In other words, given any ? ?(??) and ? > ?, there is a sum, ?(?), of the above form, for which ? ? ? ? < ?

Theorem 1 [Cybenko 89] ? discriminatory ? ? ?(?) ? continuous

Proof of Theorem 1 [Cybenko 89] Let ? ?(??) be the set of functions of the form ?(?). ? is linear. Claim: Claim: The closure of ? is all ?(??) ?(??) ?

Proof of Theorem 1 [Cybenko 89] Let ? ?(??) be the set of functions of the form ?(?). ? is linear. Claim: Claim: The closure of ? is all ?(??) Corollary of Hahn Corollary of Hahn- -Banach Banach Theorem Theorem Let ?, be a normed linear space, ? a subspace of V and ? ?. ? ??(?) bounded linear functional ?:? s.t.: ? ? = 0 ? ? and ? ? 0

Proof of Theorem 1 [Cybenko 89] In our case: V = ? ?? , U=S For some ? ?? assume: bounded linear ?:? ?? ,?( ) 0 s.t ? ? = 0 By Hahn-Banach - ??(?) ?(??) ??(?) ? ??(?) ?

Proof of Theorem 1 [Cybenko 89] Riesz Representation Theorem (Relaxed Version) Riesz Representation Theorem (Relaxed Version) Any bounded linear functional ? on ? ?? can be written as an integration against a measure ? ? ?? ? ? ??(?) , ? ?(??) ? ? = ?? Therefore, ? can be represented as: ? ? = ? ? ??(?) , ? ?(??) ??

Proof of Theorem 1 [Cybenko 89] In particular ? ?,? : ? ??? + ? ? Therefore ?,b ? ??? + ? ??(?) ? ? ??? + ? = 0 = ?? ????? However, by our assumption that ? is discriminatory ? = 0 And hence ? = 0 Contradiction! Contradiction! ? is dense in ? ??.

Theorem 1 [Cybenko 89] ? discriminatory ? ? ?(?) ? continuous

Lemma 1 [Cybenko 89] Any bounded, measurable sigmoidal function, ?, is discriminatory. In particular, any continuous sigmoidal function is discriminatory.

Theorem 2 [Cybenko 89] ? ? ? ?(?) ? continuous sigmoidal

Good Bad

Can We Classify? The previous result shows that we can approximate continuous functions up to a desired precision. However, classification is a task which involves approximating a discontinuous function - a decision function. YES!

What is the catch? No guaranty of layer size The curse of dimensionality: the number of nodes needed is usually dependent on the dimension No optimization guarantees Are there functions with better guarantees? Are there functions with better guarantees? What about multilayer? What about multilayer? New results for Barron function: The approximation does not depend on the dimension 1 function can be approximated with 1 hidden layer A composition of n functions can be approximated by n hidden layers Still no optimization guarantees

Barron Functions ??,? Theorem

Barron functions Let ? ? be a bounded set, function ? is called C C- -Barron Barron on B on B if: ?:? ?, ?: ? , ?|?= ?, ?? ? ? Fourier transform for ?: ? : 1 ? ? ? ? ? ? ?,??? 2?? ? For ?: ? ? the Fourier transform is component-wise. Inverse Fourier transform: 1? ? ? ? ?? ?,??? = 2?? ? ?

Barron functions Let ? ? be a bounded set, function ? is called C C- -Barron Barron on B on B if: ?:? ?, ?: ? , ?|?= ?, ?? ? ?? For ? ? a bounded set and function ?: ? , we will define: ??= sup ?,? ? ? ?? ?? ?(?) ?? ? ??= ?: ? ? ?,? ? = ? 0 + ?? ?,? 1 ? ? ??

Barron functions Let ? ? be a bounded set, function ? is called C C- -Barron Barron on B on B if: ?:? ?, ?: ? , ?|?= ?, ?? ? ? We will mark by ??? the set of C-Barron functions on B. For ?:? we will define ??,? to be its Barron constant: ??,? inf ?? ?|?=?,? ?

Barron functions Some facts: Some facts: If ??,?< then ? is continuously differentiable ??,? is larger the more ?is spread out Calculating ??,? is an open question. It is easy to get upper bounds (by choosing some extension ?) Its hard to get lower bounds, but there are ways

Barron Theorem [Bar93] Let ? ? be a bounded set ?:? that is C-Barron on ? ? a probability measure on ? ?: a sigmoidal function Then there exist ?? ?,?? ,?? with ?=1 ?? 2? we will mark ??(?) = ?=1 Such that: ? ? ?? + ?? ??? ?? 2?2 ? 2??(?) 2 ? ?? ? ? ? ??? ?

Common Activation Functions Name Name Plot Plot Equation Equation Range Range ? ? = 0 ,? < 0 1 ,? 0 Binary Step {0,1} 1 Logistic (0,1) ? ? = 1 + ? ? TanH ( 1,1) ? ? = tanh(?) ? 2,? ? ? = tan 1(?) Arctan 2 ? ? = 0 ,? < 0 ? ,? 0 Relu [0, )

Multilayer Barron ??,? Theorem

Multilayer Barron Theorem [2017] 0 ? ?, ??: ?? 1 ??, ?? , We want to have: ?~?1 ?2 ??= ??:1 g X ~?1~?1 ?2 ~?? 1:1

Multilayer Barron Theorem [2017] Let ?,? > 0 be parameters. for 0 ? ?, we will have: ?? , ??: ?? 1 ??, ?? ?? set ?0 probability distribution on ?0. Suppose that: 1. ???? ?0 ?0 (Support of initial distribution) 2. ?? is 1-Lipschitz 3. ?1 ?0(?0) and for 1 ? ? , ?? ?? 1+???? 1(??) (?? is Barron) 4. ???? 1 ?? (?? takes each set to the next) We will also mark the diameter of ?? by ?. 2?? ?2 4?? Then there exists a neural network ? with ? hidden layers, with nodes on the ?th layer such that: 1 2 ? ??:1 ?2?? ?? 1 + 2?? ??+ ?2 3?2 ?0

Multilayer Barron Theorem [2017] Why is this important? Why is this important? Because there are complex functions that are combination of baron functions ? ,? = ? ? ?? ??,??,?? ????(?) ?? ????(?)

Multilayer Barron Theorem [2017] What What s next? s next? Is there a separation between composition of ? functions and ? + 1 functions? What natural transformations can be represented by composition of baron functions? Can we learn the approximation? how many examples do we need? (optimization)

Matlab Demo

fitnet Vs. parametric curve fitting Curve Fitting Curve Fitting Advantages: if the model of the function is known, can just estimate the parameters. Disadvantages: need to have a model to begin with fitting the function. fitnet fitnet Advantages: does not need a model of the function in order to approximate well. Disadvantages: sometimes, we want to learn from the parameters of the model, however the NN is a black box.

References George Cybenko, 1989, Approximation by superpositions of a sigmoidal function Rudin, Walter (1991).Functional analysis. McGraw-Hill Science/Engineering/Math (Hahn Banach Theorem) Riesz Representation Theorem - Wolfram Holden Lee, Rong Ge, Andrej Risteski, Tengyu Ma, Sanjeev Arora, On the ability of neural nets to express distributions

Theorem 2 [Cybenko 89] Let ? be any continuous sigmoidal form: sigmoidal function. Then finite sums of the ? ???(???? + ??) G X = ?=1 are dense in ?(??). In other words, given any ? ?(??) and ? > ?, there is a sum, ?(?), of the above form, for which ? ? ? ? < ? ? ??

Proof of Theorem 1 [Cybenko 89] Hahn Hahn- -Banach Banach Theorem Suppose 1. ? is a subspace of a real linear space ?. 2. ?:? is sublinear, i.e. ? ? + ? ? ? + ? ? and ? ?? = ?? ? if ?,? ? and ? 0. 3. ?:? is linear and ? ? ?(?) on ?. Theorem Then, a linear functional ?:? such that: ? ? = ? ? ? ? ? ? ? ? ? ? ? ?

Theorem 3 [Cybenko 89] Let ? be a continuous sigmoidal function. Let ? be any decision function of finite measurable partition of ??. For any ? > 0, there is a finite sum of the form: ? ???(???? + ??) G X = ?=1 and a set ? ?? with measure ? ? 1 ? such that: ? ? ? ? < ? ? ? Proof idea: use Lusin s theorem and theorem 2.

Multilayer Barron Theorem [2017] Why is this important? Why is this important? because there are functions that are combination of ????(?)-Barron functions, but they are not ????(?)-Barron themselves: In the article they so an example for function ?: ? that is a composition of two ????(?)-Barron functions, But is not ? ??- Barron for some ? > 1. A network with multiple hidden layers of poly(n) width can A network with multiple hidden layers of poly(n) width can approximate functions that need exponential number of nodes with approximate functions that need exponential number of nodes with 1 1 hidden layer. hidden layer.