Understanding L3 and VXLAN Networking for OpenStack Deployments

Today, many OpenStack deployments rely on L2 networks, but there are limitations with this approach, including scalability issues and wasted capacity. The solution lies in transitioning to L3 networking designs, which offer benefits such as increased availability, simplified feature sets, and better scalability. VXLAN, a network overlay technology, encapsulates L2 frames as UDP packets, providing a solution for maximizing connectivity and segregation of projects in OpenStack environments.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

Who We Are Nolan Leake Cofounder, CTO Cumulus Networks Chet Burgess Vice President, Engineering Metacloud OpenStack Summit Spring 2014

Today, most non-SDN controller based OpenStack deployments use L2 networks.

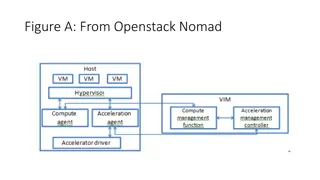

Traditional Enterprise Network Design Core ECMP L3 Aggregation VRRP VRRP L2 STP STP Access OpenStack Summit Spring 2014

Whats wrong with L2? Aggregation tier must be highly available/redundant Aggregate/Core scalability MAC/ARP table limits, VLAN exhaustion, East-West choke points Wasted capacity (STP blocking ports) Proprietary protocols/extensions MLAG, vPC, etc OpenStack Summit Spring 2014

L3: A better design IP Fabrics Are Ubiquitous Proven at scale (The Internet, massive datacenter clusters) Simple Feature Set no alphabet soup of L2 protocols Scalable L2/L3 Boundary ECMP Equal Cost Multi-Path Each link is active at all times Maximize link utilization Predictable latency Better failure handling OpenStack Summit Spring 2014

L3: A better design SPINE LEAF OpenStack Summit Spring 2014

Pure L3 is great for maximizing connectivity, but what about segregation of projects?

VXLAN: Virtual eXtensible LAN IETF Draft Standard http://www.ietf.org/id/draft-mahalingam-dutt-dcops- vxlan-09.txt A type of network overlay technology that encapsulates L2 frames as UDP packets OpenStack Summit Spring 2014

VXLAN: Virtual eXtensible LAN Outer DMAC Outer SMAC Outer 802.1Q Outer DIP Outer SIP Outer UDP VXLAN Header Inner DMAC Inner SMAC Inner 802.1Q Original Payload Outer UDP/IP Packet Inner Ethernet Frame OpenStack Summit Spring 2014

VXLAN: Virtual eXtensible LAN VNI VXLAN Network Identifier 24 bit number (16M+ unique identifiers) Part of the VXLAN Header Similar to VLAN ID Limits broadcast domain VTEP VXLAN Tunnel End Point Originator and/or terminator of VXLAN tunnel for a specific VNI Outer DIP/Outer SIP OpenStack Summit Spring 2014

VXLAN: Virtual eXtensible LAN Sending a packet ARP table is checked for IP/MAC/Interface mapping L2 FDB is checked to determine IP of destination VTEP for destination MAC on source VTEP OpenStack Summit Spring 2014

VXLAN: Virtual eXtensible LAN Sending a packet Packet is encapsulated for destination VTEP with configured VNI and sent to destination Destination VTEP un-encapsulates the packet and the inner packet is then processed by the receiver OpenStack Summit Spring 2014

How do VTEPs handle BUM (Broadcast, Unknown Unicast, Multicast)?

BUM All BUM type packets (ex. ARP, DHCP, multicast) are flooded to all VTEPs associated with the same VNI. Flooding can be handled 2 ways Packets are sent to a multicast address that all VTEPs are subscribers of Packets are sent to a central service node that then floods the packets to all VTEPs found in its local DB for the matching VNI OpenStack Summit Spring 2014

VXLAN: Virtual eXtensible LAN Well supported in most modern Linux Distros Linux Kernel 3.10+ Linux uses UDP port 8472 instead of IANA issued 4789 iproute2 3.7+ Configured using ip link command OpenStack Summit Spring 2014

How do we use this with OpenStack?

nova-network Clients needed L3+VXLAN for their existing nova- network based big data deployments (hadoop). Neutron already supports VXLAN and should work with L3 as well (we didn t have time to test it). Full VXLAN support in nova-network Unicast VXLAN service node for BUM flooding OpenStack Summit Spring 2014

VXLAN Service Node Unicast service for BUM flooding Eliminates the need for multicast Python based 2 Components VXSND VXLAN Service Node Daemon VXRD VXLAN Registration Daemon Will be open sourced in the near future. OpenStack Summit Spring 2014

VXSND Listens for VXLAN BUM packets from VTEPs Learns VTEP and VNI endpoints from BUM packets Relays BUM packets to all known VTEPs for given VNI Supports registration/replication from other VXSND daemons or VXRD OpenStack Summit Spring 2014

VXRD Monitors local interfaces on hypervisors Sends VTEP+VNI registration packet to VXSND node for all local VTEPs. OpenStack Summit Spring 2014

Software Gateway We re still getting in/out of the VXLAN network using a software gateway Lower performance Extra servers All nova-net (or neutron s l3agent) is doing is configuring VXLANs, bridges and iptables NAT. What if we had a hardware switch that could accelerate these standard Linux network features with an ASIC? OpenStack Summit Spring 2014

Cumulus Linux Cumulus Linux Linux Distribution for HW switches (Debian based) Hardware accelerated Linux kernel forwarding using ASICs Just like a Linux server with 32 40G NICs, but ~100x faster Standard Linux Tools Ifconfig, ip route, iptables, brctl, dnsmasq, etc OpenStack Summit Spring 2014

Demo OpenStack Summit Spring 2014

Next Steps (nova-network VXLAN) nova-network Blueprint to add VXLAN support to nova-network Juno coming soon. VXSND/VXRD Update VXRD to monitor netlink for VTEP add/delete Improve concurrency and scalability of VXSND Support for tiered replication (TOR, spine, etc) Goal is to open source the product before Paris summit. OpenStack Summit Spring 2014

Next Steps (nova-network on Switches) Hack: ASIC can t route in/out of VXLAN tunnel Next gin ASICs can Worked around by looping a cable between two ports Packets take a second trip through the switch Hack: Cumulus Linux doesn t support NAT I hacked in just enough NAT support for floating IPs =) Limitation: ASIC can only NAT 512 IPs. /23 Next gen ASICs will likely have larger tables OpenStack Summit Spring 2014

Q&A OpenStack Summit Spring 2014