Understanding Data Munging and Pre-Processing in R

Learn about data munging and pre-processing in R through the insights shared by Louise Francis at the 2021 CAMAR Meeting. Explore the importance of data tasks in actuarial work, time spent on data munging tasks, types of projects involved, data wrangling process, and the overview of data handling tools in R. Dive into CRISP-DM methodology and its six phases for effective data mining.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

DATA MUNGING AND DATA PRE- PROCESSING IN R SPRING 2021 CAMAR MEETING Louise Francis, FCAS, CSPA, MAAA Francis Analytics and Actuarial Data Mining, Inc Louise_francis@msn.com

POLL: TIME SPENT ON DATA MUNGING TASKS How Much Time do you spend on data as a percentage of your time on your projects? 0 10% 11 - 25% 25 - 50% 50 - 75% > 75%

FOLLOW-UP : WHAT TYPE OF PROJECTS DO YOU DO? What type of work do you do? Traditional Actuarial Predictive Modeling Both Other

DATA COMPONENT OF ACTUARIAL WORK Data Tasks are a key part of Actuarial Work According to a survey about 1/3 of actuarial time is spent on data From Dirty Data on Both Sides of the Pond , CAS 2008 winter e- forum The percentage of time for data scientists is more like 80%

DATA WRANGLING FromWikipedia Data wrangling, sometimes referred to as data munging, is the process of transforming and mapping data from one "raw" data form into another format with the intent of making it more appropriate and valuable for a variety of downstream purposes such as analytics. The goal of data wrangling is to assure quality and useful data. Data analysts typically spend the majority of their time in the process of data wrangling compared to the actual analysis of the data. -https://en.wikipedia.org/wiki/Data_wrangling

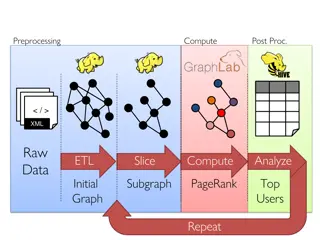

OVERVIEW Where data is in the predictive modeling cycle CRISP-DM What is tidy data? What R tools help tidy data? Introduce the tidyverse packages Introduce Other Packages useful for data wrangling

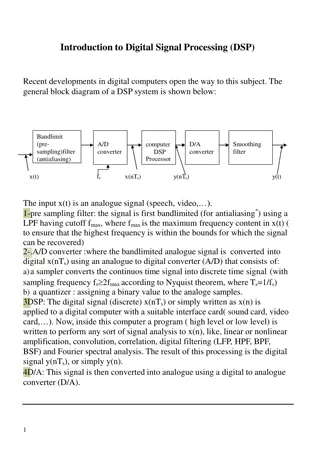

CRISP DM o Describes life cycle for data mining See: https://www.ibm.com/support/knowledgecenter/en/SS3RA7_15.0 .0/com.ibm.spss.crispdm.help/crisp_overview.htm oHas six phases with feedback loop

6 PHASES OF CRISP DM 1. Business understanding 2. Data Understanding 3. Data Preprocessing 4. Modeling 5. Evaluation 6. Deployment

CRISP DM o Describes life cycle for data mining See: https://www.ibm.com/support/knowledgecenter/en/SS3RA7_15.0 .0/com.ibm.spss.crispdm.help/crisp_overview.htm oHas six phases with feedback loop

6 PHASES OF CRISP DM 1. Business understanding 2. Data Understanding 3. Data Preprocessing 4. Modeling 5. Evaluation 6. Deployment

HADLEY WICKHAM Hadley Wickham: http://hadley.nz/ Well known R programmer of R libraries Chief scientist at Rstudio One of his first packages was reshape package for aggregating and organizing data

ANOTHER RESOURCE: R FOR ACTUARIES Recommended: Chapter on Data Wrangling in R for Actuaries by Brian Fannin

HADLEY WICKHAM Developed ggplot2 package Developed dplyr package Books include R for Data Science and ggplot2 Proposed tidy data framework Described in paper Tidy Data in Journal of Statistical Software

HADLEY WICKHAM Hadley Wickham: http://hadley.nz/ Well known R programmer of R libraries Chief scientist at Rstudio One of his first packages was reshape package for aggregating and organizing data

READING DATA READR LIBRARY read.csv read_csv Both read comma delimited files read_csv has some capabilities read.csv does not have Creates tibble Reads larger data sets Faster readr also has read.xls

EXAMPLE: OZONE DATA From EPA Download from https://aqs.epa.gov/aqsweb/airdata/download_files.html Over 5,000,000 records County Code Site Num Parameter Code 10 10 10 10 10 10 POC Latitude 1 30.49748 -87.8803 NAD83 1 30.49748 -87.8803 NAD83 1 30.49748 -87.8803 NAD83 1 30.49748 -87.8803 NAD83 1 30.49748 -87.8803 NAD83 1 30.49748 -87.8803 NAD83 Longitude Datum Parameter Name Ozone Ozone Ozone Ozone Ozone Ozone Date LocalTime LocalDate GMTTime GMTSample Measurement 3/1/2016 15:00 3/1/2016 3/1/2016 16:00 3/1/2016 3/1/2016 17:00 3/1/2016 3/1/2016 18:00 3/2/2016 3/1/2016 19:00 3/2/2016 3/1/2016 20:00 3/2/2016 3 3 3 3 3 3 44201 44201 44201 44201 44201 44201 21:00 22:00 23:00 0:00 1:00 2:00 0.041 0.041 0.042 0.041 0.038 0.038

CODE TO RUN BOTH AND TIME THEM file= "D:/CAS/CAMAR/hourly_44201_2016.csv" # read in the file with read.csv # time the procedure function read.csv read_csv total time start.time<- proc.time() 44.62 23.66 Ozone.data=read.csv(file) read.time=proc.time()-start.time read.time # print time # use read_csv start.time2<- proc.time() Ozone.data=read_csv(file) read.time2=proc.time()-start.time2 read.time2

TIDY DATA PRINCIPLES Provides principles on how to organize data To make data cleaning easier Which led to tools to make the process easier and more efficient

MULTIPLE WAYS TO ORGANIZE DATA Two views of data Database form Spread out form columns names could represent data Both can be tidy depending on the application

TRIANGLE DATA The spread out form Cumulative Paid Losses Accident Year 1974 1975 1976 1977 1978 1979 1980 1981 1982 1983 1984 1985 1986 1987 1988 1989 1990 1991 Months of Development 108 $18,541 $18,937 22,529 22,772 25,819 26,049 29,656 29,839 31,240 31,598 34,185 34,290 35,482 35,607 34,985 35,122 34,227 34,317 37,571 37,681 37,099 12 24 $1,975 2,809 2,744 3,877 4,518 5,763 8,066 9,378 11,256 10,628 11,538 10,939 13,053 18,086 239,383 34,171 33,392 36 $4,587 5,686 7,281 9,612 12,067 16,372 17,518 18,034 20,624 21,015 21,549 21,357 27,869 38,099 52,054 59,232 48 $7,375 9,386 13,287 16,962 21,218 24,105 26,091 26,652 27,857 29,014 29,167 28,488 38,560 51,953 66,203 60 $10,661 14,884 19,773 23,764 27,194 29,091 31,807 31,253 31,360 33,788 34,440 32,982 44,461 58,029 72 $15,232 20,654 23,888 26,712 29,617 32,531 33,883 33,376 33,331 36,329 36,528 35,330 45,988 84 $17,888 22,017 25,174 28,393 30,854 33,878 34,820 34,287 34,061 37,446 36,950 36,059 96 120 $19,130 22,821 26,180 29,944 31,889 34,420 35,937 35,161 34,378 132 $19,189 23,042 26,268 29,997 32,002 34,479 35,957 35,172 144 $19,209 23,060 26,364 29,999 31,947 34,498 35,962 156 $19,234 23,127 26,371 29,999 31,965 34,524 168 $19,234 23,127 26,379 30,049 31,986 180 $19,246 23,127 26,397 30,049 192 $19,246 23,127 26,397 204 $19,246 23,159 216 $19,246 $267 310 370 577 509 630 1,078 1,646 1,754 1,997 2,164 1,922 1,962 2,329 3,343 3,847 6,090 5,451 https://www.casact.org/publications-research/research/research- resources/loss-reserving-data-pulled-naic-schedule-p

LONG DATABASE FORMAT All paid values in one column, one variable Year Development Age (Years) Cumulative paid 1974 1975 1976 1977 1978 1979 1980 1981 1982 1983 1984 1985 1986 1 1 1 1 1 1 1 1 1 1 1 1 1 267 310 370 577 509 630 1,078 1,646 1,754 1,997 2,164 1,922 1,962

TIDYVERSE Set of related libraries that Read data efficiently (readr) Tidy the data through reorganization (tidyr) Perform database management functions (diplyr) Perform string functions stringr() Perform EDA (ggplot2) Use code: >library(tidyverse) Make sure you have installed tidyverse

CLAIM DATA USED FOR ILLUSTRATIONS WC Claim dataset From Crowd Analytics competition Average Weekly Wage Claimant Gender Code Claimant Marital Status Body Part Code Cause Code Claimant Age Claimant Gender Claimant Hire Date Obs_ID Dependent Body Part Cause Struck or Injured By Strain or Injury By Strain or Injury By Obs_1 98679 500 Pelvis Low Back Area Low Back Area Multiple Body Parts Other Facial Soft Tissue 46 1700 21 Female F 4/3/2001 Obs_2 55727 1,037.00 42 1500 Male M 5/15/2001 Obs_3 185833 929 42 1500 63 Male M 5/15/2001 Married Strain or Injury By Obs_4 98615 1,226.00 90 1500 49 Male M Miscellaneo us Causes Obs_5 51396 18 1900 51 Male M

TIDYVERSE Set of related libraries that Read data efficiently (readr) Create tibble data (tibble) Tidy the data through reorganization (tidyr) Perform database management functions (diplyr) Perform string functions stringr() Perform EDA (ggplot2) Use code: >library(tidyverse) Make sure you have installed tidyverse

MAKE.NAMES oThe make.names function can be used to eliminate the spaces oThis makes the variables easier to work with in R myfile="D:/RPM Data/CompClaimsTrain.csv wcdata<-read_csv(myfile) names(wcdata) names(wcdata)=make.names(names(wcdata),unique=TRUE)

BASE R - BASIC DESCRIPTIVE STATISTICS: SUMMARY() oUse summary function osummary(wcdata) Obs_ID Dependent Average.Weekly.Wage Body.Part Length:15407 Min. : 0 Min. : 2 Length:15407 Class :character 1st Qu.: 152 1st Qu.: 500 Class :character Mode :character Median : 446 Median :1000 Mode :character Mean : 10285 Mean :1148 3rd Qu.: 1704 3rd Qu.:1529 Max. :3774290 Max. :9999 NA's :9535

GET METADATA: STR() > str(wcdata) Classes spec_tbl_df , tbl_df , tbl and 'data.frame': 8 variables: $ Obs_ID : chr "Obs_1" "Obs_2" "Obs_3" "Obs_4" ... $ Dependent : num 98679 55727 185833 98615 51396 ... $ Average.Weekly.Wage : num 500 1037 929 1226 NA ... $ Body.Part : chr "Pelvis" "Low Back Area" "Low Back A rea" "Multiple Body Parts" ... $ Body.Part.Code : num 46 42 42 90 18 30 42 42 42 54 ... $ Cause : chr "Struck or Injured By" "Strain or In jury By" "Strain or Injury By" "Strain or Injury By" ... $ Cause.Code : num 1700 1500 1500 1500 1900 1500 1500 1 500 1500 1500 ... $ Claimant.Age : num 21 NA 63 49 51 55 49 36 45 45 ... $ Claimant.Atty.Firm.Name : chr NA NA "TROBINSON & CHUR ATTORNEYS AT LAW" "IBARRY STEVENS;;M;;" ... $ Claimant.Gender : chr "Female" "Male" "Male" "Male" ... $ Claimant.Gender.Code : chr "F" "M" "M" "M" ... $ Claimant.Hire.Date : chr "4/3/2001" "5/15/2001" "5/15/2001" N A ... $ Claimant.Marital.Status : chr NA NA "Married" NA ... $ Claimant.Marital.Status.Code : chr NA NA "M" NA ... $ Claimant.State : chr "California" "California" "Hawaii" " Idaho" ... $ Claimant.State.Code : chr "CA" "CA" "HI" "ID" ... $ Department.Code : logi NA NA NA NA NA NA ... $ Detail.Cause : chr "Struck by Falling/Flying Object" "S train/Injury by Lifting" "Strain/Injury by Carrying" "Strain/Injury by Repeti tive Motion" ... $ Detail.Cause.Code : num 75 56 55 97 90 97 60 97 97 97 ... $ Domestic.vs..Foreign. : chr "Domestic" "Domestic" "Domestic" "Do mestic" ... $ Domestic.vs..Foreign..Code : chr "D" "D" "D" "D" ... $ Dt.Reported.to.Carrier.TPA : Date, format: "2001-04-17" "2001-05-25" . .. $ Dt.Reported.to.Employer : Date, format: "2001-04-13" "2001- 05-24" . 15407 obs. of 4

DPLYR USED FOR DATA MANAGEMENT oOptimized version of plyr package oUse to subset, summarize, filter and join data oR has functions such as subset and operators such as [ ] that can perform data management functions but they can be difficult to use oWe will use dplyr on Schedule P data downloaded from CAS web site oFirst read in the data oNote that read_csv provides some metadata

DPLYR GRAMMAR oselect() selects variables/columns from data frame ofilter() subsets rows of data frame based on logical conditions oarrange() reorders rows of data frame orename() renames variables in data frame omutate() performs variable transformations and adds variables osummarize() summarizes/aggregates data from a data frame o%>% pipe operators used to link multiple verb actions together

DPLYR FUNCTIONS oFirst argument is a data frame oSubsequent arguments describe what is to be done oYou can refer to columns without using dollar operator oReturn result is a new data frame oThe dataframe needs to be tidy

WE DO NOT NEED ALL THE COLUMNS [1] "Obs_ID" "Dependent" [3] "Average Weekly Wage" "Body Part" [5] "Body Part Code" "Cause" [7] "Cause Code" "Claimant Age" [9] "Claimant Atty Firm Name" "Claimant Gender" [11] "Claimant Gender Code" "Claimant Hire Date" [13] "Claimant Marital Status" "Claimant Marital Status Code" [15] "Claimant State" "Claimant State Code" [17] "Department Code" "Detail Cause" [19] "Detail Cause Code" "Domestic vs. Foreign?" [21] "Domestic vs. Foreign? Code" "Dt Reported to Carrier/TPA" [23] "Dt Reported to Employer" "Employment Status" [25] "Employment Status Code" "Date of Injury/Illness" [27] "Handling Office Name" "How Injury Occurred" [29] "Injury/Illness City" "Injury/Illness Postal" [31] "Injury/Illness State" "Injury/Illness State Code" [33] "Jurisdiction" "Jurisdiction Code" [35] "Lost Time or Medical Only?" "Lost Time or Medical Only? Code" [37] "Nature of Injury/Illness" "Nature of Injury/Illness Code" [39] "Number of Dependents" "OSHA Injury Type" [41] "OSHA Injury Type Code" "Severity Index Code" [43] "Severity Index Code Code" "Time of Injury/Illness" [45] "Type of Loss" "Type of Loss Code" [47] "Policy Year" "Reforms_dummy"

SELECT() oWe do not need all the columns oOnly keep those relevant to analysis oUse select() to extract only needed columns oLet s take the first 10 columns wcdata2<-select(wcdata, 1:10) names(wcdata2) > names(wcdata2) [1] "Obs_ID" "Dependent" [3] "Average.Weekly.Wage" "Body.Part" [5] "Body.Part.Code" "Cause" [7] "Cause.Code" "Claimant.Age" [9] "Claimant.Atty.Firm.Name" "Claimant.Gender"

SELECT BY ELIMINATION oLeave out variables we don t want by using a - within select function oIn this example, we eliminate variables that are duplicates of other variables, such as Jurisdiction and Jurisdiction.Code oWe will keep the Code variable wcdata2<-select(wcdata, -Body.Part,-Cause, -Claimant.Hire.Date,-Detail.Cause,-Injury.Illness.State, -Jurisdiction, -Nature.of.Injury.Illness, -OSHA.Injury.Type,-Type.of.Loss)

SELECT() Can select columns based on patterns in name starts_with ends_with contains matches

FILTER() oSelects rows based on filter applied to values in rows oSimilar to subset but faster oCan be used to eliminate records with clearly erroneous values oSuch as selecting either lost time or medical only claims for modeling wcdata3<-filter(wcdata2, Lost.Time.or.Medical.Only..Code=="MO") oCan have multiple conditions (using &, ||)

ARRANGE() Use arrange() for sorting wcdata2<-arrange(subset,desc(Dependent)) wcdata4<-arrange(wcdata3, desc(Dependent)) wcdata4[1:5,1:4] It is easier to use than sort() Use desc(varname) to sort descending Can use .by_group to sort by a group

SORTED DATA wcdata4[1:5,1:4] # A tibble: 5 x 4 Obs_ID Dependent Average.Weekly.Wage Body.Part.Code <chr> <dbl> <dbl> <dbl> 1 Obs_2673 3774290 403 90 2 Obs_14692 1159631 3138 43 3 Obs_14673 1086522 939 51 4 Obs_13477 1083067 2303 12 5 Obs_578 1076883 1050 41

MUTATE() oUsed to perform variable transformations oMost Predictive Modeling projects need a variety of transformations: oFor instance, we may want to log highly skewed variables

MUTATE DEPENDENT VARIABLE wcdata4<-mutate(wcdata4,logDependent=log(Dependent+1)) qplot(logDependent,data=wcdata4)

OTHER TRANSFORMATIONS oIndicator variables to Identify missing's in variables oCompute time lag variables (Report lag to employer, report lag to carrier)

GROUP_BY(), SUMMARIZE() oCan be used to group data by a variable or variables and then summarize oLets compute some state level statistics using claimant state: oGet size in database by counting the number of records for each state oUse result to reduce cardinality by grouping all low count states into one small category byState=group_by(wcdata4,Claimant.State) StateSize=summarize(byState,count=n()) StateSize=mutate(StateSize,rankState=min_rank(desc(count))) head(StateSize) head(StateSize) # A tibble: 6 x 3 Claimant.State count rankState <chr> <int> <int> 1 Alabama 63 24 2 Arizona 65 22 3 California 8912 1 4 Canada - British Columbia 4 44 5 Colorado 65 22 6 Connecticut 71 21

STRING FUNCTIONS Nearly all languages used by actuaries contain string functions Some simple string functions can help with data preprocessing in actuarial analyses Example Identification of multiple occurrence claims Claim_Number 112375-119959-WC-01 112375-119959-WC-02 112375-128321-WC-01 112375-128321-WC-02 112375-128321-WC-03 112375-128321-WC-04 112375-050140-WC-01 112375-050182-WC-01 112375-050218-WC-01

SIMPLE STRING FUNCTIONS LET US: Tabulate how many claims there are for each occurrence Compute the occurrence number, so data can be aggregated to the occurrence level Claim_Number 112375-119959-WC-01 112375-119959-WC-02 112375-128321-WC-01 112375-128321-WC-02 112375-128321-WC-03 112375-128321-WC-04 112375-050140-WC-01 112375-050182-WC-01 Claimant Number Claim_Number 112375-119959-WC-01 112375-119959-WC 112375-119959-WC-02 112375-119959-WC 112375-128321-WC-01 112375-128321-WC 112375-128321-WC-02 112375-128321-WC 112375-128321-WC-03 112375-128321-WC 112375-128321-WC-04 112375-128321-WC 112375-050140-WC-01 112375-050140-WC Occurrence Number =right(A2,2) =left(A2,15) 01 02 01 02 03 04 01 01

REGULAR EXPRESSIONS There are various shorthand characters to denote types of strings including: 0-9 a digit \d for digit \b for border (blank or punctuation before or after a word a-z lowercase letters and A-Z uppercase letters. Also :alpha: for letters \w for an alphanumeric character \n end of line ^ at beginning denotes beginning of string, $ at the end denotes the end of a string + one or more of the previous pattern * zero or more of the pattern

STRINGR LIBRARY stringr is an R library for processing strings Functions include str_locate locates the position of a pattern str_detect used to detect a pattern str_extract extracts string matching a pattern str_count counts number of matches to a pattern str_sub subsets a string using start and end position str_subset subsets a string using a regular expression pattern str_replace replaces a string with another string str_c combines strings

STRINGR LIBRARY stringr is an R library for processing strings Functions include str_locate locates the position of a pattern str_detect used to detect a pattern str_extract extracts string matching a pattern str_count counts number of matches to a pattern str_sub subsets a string using start and end position str_subset subsets a string using a regular expression pattern str_replace replaces a string with another string str_c combines strings

USE REGULAR EXPRESSION TO GET CLAIMANT NUMBER At end of string pattern="([\\d]{2})$ Escape symbol 2 digits Use str_extract to extract last 2 digits ClaimantNo=str_extract(ClaimData$Claim_Number,pattern) Must be character, not factor head(ClaimantNo) [1] "01" "02" "01" "02" "03" "04"

USE REGULAR EXPRESSION TO GET LOB pattern="([:alpha:]{2}) or pattern="([A-Z]{2})" 2 alphas (the first 2) LOB=str_extract(Claim_Number,pattern) head(LOB) [1] "WC" "WC" "WC" "WC" "WC" "WC"

SUBSET CLAIM NUMBER FOR OCCURRENCE NUMBER OccurrenceNo=str_sub(Claim_Number,1,16) Start and end position [1] "112375-119959-WC" "112375-119959-WC" [3] "112375-128321-WC" "112375-128321-WC" [5] "112375-128321-WC" "112375-128321-WC"