Turing Machine Variants and Equivalence Theorems Summary

Explore different variants of Turing machines, such as stay-put TMs and multi-tape TMs, along with key results like the equivalence theorems. Understand the idea behind simulating multi-tape TMs with single-tape TMs and how different models are related. Dive into the proofs and implications of these variants in the context of computational theory.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Turing Machine Variants Chuck Cusack Based (very heavily) on notes by Edward Okie (Radford University) and Introduction to the Theory of Computation , 3rdedition, Michael Sipser. (Original version at http://www.radford.edu/~nokie/classes/420/)

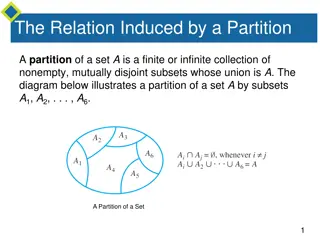

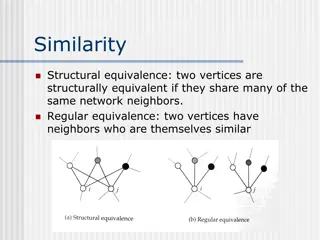

Equivalence of models Two variants of a TM are equivalent if each one can be simulated on the other. Recall that a language is Turing-recognizable if some single-tape Turing machine recognizes it. Thus, if a TM variant is equivalent to a single-tape TM, then a language is Turing-recognizable if and only if some Turing machine of that type recognizes it.

Main Results Theorem 3.13: Every multitape Turing machine has an equivalent single-tape Turing machine. Theorem 3.16: Every nondeterministic Turing machine has an equivalent deterministic Turing machine. Theorem 3.21: A language is Turing-Recognizable iff some enumerator enumerates it. (We won t focus on this one)

Stay-Put TMs One of the simplest variations is a TM that allows the head to stay put as well as more left or right Notice that a stay-put TM can simulate a regular TM In fact, you can view a regular TM as a stay-put TM that just happens to not use the stay-put feature You can simulate a stay-put TM on a TM by replacing every transition that stays with two transitions: Write the symbol as normal and go right Read and write the same symbol and go left Thus, these two variants are equivalent

Multitape Turing Machine Multiple tapes Transition function Reads from all heads Writes to all heads Each head goes left, right or stays We can simulate it with a single-tape TM as illustrated to the right. Let s look a little closer. Image from Sipser s book

Proof Idea for Theorem 3.13 Theorem 3.13: Every multitape Turing machine has an equivalent single-tape Turing machine. Simulate an n-tape machine using a one tape machine: Store information from all tapes on the single tape. Use new symbol (e.g. #) to separate information from each tape Use a dotted symbol to represent the location of each tape head When the tape head moves to a location, replace the symbol there (e.g. symbol x) with the equivalent dotted symbol (i.e. with dotted x) When the tape head moves from a location, write a regular (i.e. non- dotted) symbol at that location Requires adding dotted symbols to tape alphabet Develop new transition function: A step of the n-tape machine will represent steps on all n of the parts of the single tape A right movement onto the # results in shifting all of the symbols that are to the right of the # one symbol to the right

Equivalence of Multi- and single-tape TMs Corollary 3.15: A language is Turing-recognizable if and only if some multitape Turing machine recognizes it. Proof: If L is Turing-recognizable, then some single-tape TM recognizes it. A single-tape TM is a multitape TM (with just one tape), so some multitape Turing machine recognizes it. If some multitape Turing machine recognizes L, Theorem 3.13 implies that some single-tape Turing machine recognizes L. Therefore L is Turing recognizable (by definition).

Nondeterministic Turing Machine Transition function: : Q x P(Q x x {L,R}) As expected, multiple (0r no) transitions allowed Machine N accepts w if some branch accepts w Theorem 3.16: Every nondeterministic Turing machine has an equivalent deterministic Turing machine. Let s sketch a proof

Theorem 3.16: How to Prove? How did we prove DFA and NFA were equivalent? Defined an algorithm for creating a DFA that is equivalent to an given NFA The states of the DFA were power sets of the NFA states The DFA accepted the same strings as the NFA How to prove that a Nondeterministic TM has an equivalent deterministic TM? Create a deterministic TM that executes the possible computations on the nondeterministic TM Basic Approach: Specify possible nondeterministic choices made by the NTM Continue trying more and more choices If the NTM accepts, the DTM will find the accepting computation If the NTM rejects, the DTM continues forever, looking for an accepting computation If the NTM loops, the DTM will continuing trying computations forever

Representing Nondeterministic Choices Let N be our NTM Let b be the largest number of choices from N s transition function. Let b= {1, 2, ..., b} Represent a sequence of n choices with a string from bof length n Example: 534 represents taking choice 5, then choice 3, then choice 4

Simulating a Nondeterministic Machine Simulate N with a 3-tape deterministic machine D as follows: Tape 1 of D is initialized with a copy of the input tape for N and never changes after initialization. Tape 2 of D has a copy of what N's tape would be at that point of the computation that is currently specified by tape 3 Tape 3 of D successively contains a sequence of numbers that represent every possible path through N. Each path represents (part of) a computation in N Each number represents a choice at a nondeterministic branch in the computation Tape 3 successively contains the numbers in lexicographic order (i.e. in alphabetic order, shortest first) Example: if b=4 (i.e. 4 is the maximum number of choices), then tape 3 would hold 1, 2, 3, 4, 11, 12, 13, 14, 21, 22, 23, 24, 31, 32, 33, 34, 41, 42, 43, 44, 111, ..., 114, 121, 122, 123, 124, ..., 443, 444, 1111, ...

Simulating N on D Initialize Tape 1. Initialize Tape 3 with the number for the first possible computation N Loop Copy Tape 1 to Tape 2 Execute N using Tape 2 as input and following the computation choices specified in Tape 3 If sequences of choices represent an accepting computation for N (i.e. N enters an Accept state), then D halts and accepts Else (i.e. a number represents an infeasible or rejecting computation) continue with the next step Initialize Tape 3 with the string that represents the next possible sequence of choices of N Repeat loop

N and D are Equivalent N and D accept the same language That is, N accepts L iff D accepts L because If N accepts w, then D will accept w (eventually) If N rejects or loops on w, then D will loop (as it continues trying more and more choices of the transition function) Can we avoid looping in D? If N always accepts or rejects every string, then D can be modified to accept or reject every string If N loops on some strings, then D will loop on the strings on which N rejects or loops

Simulation order Why is the order we simulate the NTM important? Here we are essentially doing a breadth-first search of the tree of possible choices the NTM can make What if we did a depth-first search instead?