Portable Inter-workgroup Barrier Synchronisation for GPUs

"This presentation discusses the implementation of portable inter-workgroup barrier synchronisation for GPUs, focusing on barriers provided as primitives, GPU programming threads and memory management, and challenges such as scheduling and memory consistency. Experimental results and occupancy-bound execution scenarios are also presented, showcasing various chip vendors, compute units, and types. The importance of inter-workgroup shared memory and building blocks for barrier synchronisation is emphasized."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

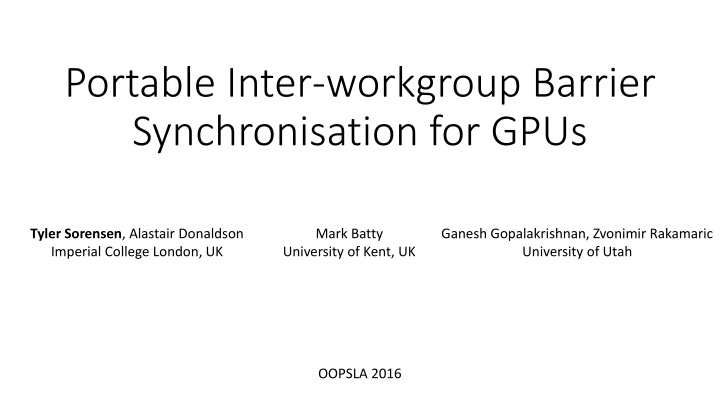

Portable Inter-workgroup Barrier Synchronisation for GPUs Tyler Sorensen, Alastair Donaldson Imperial College London, UK Mark Batty University of Kent, UK Ganesh Gopalakrishnan, Zvonimir Rakamaric University of Utah OOPSLA 2016

Barriers Provided as primitives T0 T1 T2 T3 MPI OpenMP barrier Pthreads GPU (intra-workgroup)

GPU programming Threads Global Memory 3

GPU programming Workgroup 0 Workgroup 1 Workgroup n Threads Local memory for WG0 Local memory for WG1 Local memory for WGn Global Memory 4

Inter-workgroup barrier Not provided as primitive Building blocks provided: Inter-workgroup shared memory

Experimental results Chip Vendor Compute Units OpenCL Version Type GTX 980 Nvidia 16 1.1 Discrete Quadro K500 Nvidia 12 1.1 Discrete Iris 6100 Intel 47 2.0 Integrated HD 5500 Intel 24 2.0 Integrated Radeon R9 Radeon R7 AMD AMD 28 8 2.0 2.0 Discrete Integrated T628-4 ARM 4 1.2 Integrated T628-2 ARM 2 1.2 integrated

Challenges Scheduling Memory consistency

Occupancy bound execution Program with 5 workgroups w5 w4 w2 w1 w0 workgroup queue CU CU CU GPU with 3 compute units

Occupancy bound execution Program with 5 workgroups w5 w4 w2 w1 w0 workgroup queue CU CU CU GPU with 3 compute units

Occupancy bound execution Program with 5 workgroups w5 w4 w4 workgroup queue w0 w1 w2 CU CU CU GPU with 3 compute units

Occupancy bound execution Program with 5 workgroups w5 w4 w4 workgroup queue w0 w1 CU CU CU w2 GPU with 3 compute units finished workgroups

Occupancy bound execution Program with 5 workgroups w5 w4 workgroup queue w0 w4 w1 CU CU CU w2 GPU with 3 compute units finished workgroups

Occupancy bound execution Program with 5 workgroups w5 w4 workgroup queue w4 w1 CU CU CU w2 w0 GPU with 3 compute units finished workgroups

Occupancy bound execution Program with 5 workgroups w4 workgroup queue w5 w4 w1 CU CU CU w2 w0 GPU with 3 compute units finished workgroups

Occupancy bound execution Program with 5 workgroups w4 workgroup queue Finished! CU CU CU w2 w0 w5 w1 w4 GPU with 3 compute units finished workgroups

Occupancy bound execution Program with 5 workgroups w5 w4 w2 w1 w0 workgroup queue CU CU CU GPU with 3 compute units

Occupancy bound execution Program with 5 workgroups w5 w4 w2 w1 w0 workgroup queue CU CU CU GPU with 3 compute units

Occupancy bound execution Program with 5 workgroups w5 w4 w4 workgroup queue w0 w1 w2 Cannot synchronise with workgroups in queue CU CU CU Barrier gives deadlock! GPU with 3 compute units

Occupancy bound execution Program with 5 workgroups w5 w4 w4 workgroup queue w0 w1 w2 Barrier is possible if we know the occupancy CU CU CU GPU with 3 compute units

Occupancy bound execution Launch as many workgroups as compute units?

Occupancy bound execution Launch as many workgroups as compute units? Program with 5 workgroups w5 w4 w2 w1 w0 workgroup queue CU CU CU GPU with 3 compute units

Occupancy bound execution Launch as many workgroups as compute units? Program with 5 workgroups w4 workgroup queue w0 w5 w1 w4 w2 Depending on resources, multiple wgs can execute on CU CU CU CU GPU with 3 compute units

Recall of occupancy discovery Chip Compute Units Occupancy Bound GTX 980 16 Quadro K500 12 Iris 6100 47 HD 5500 24 Radeon R9 Radeon R7 28 8 T628-4 T628-2 4 2

Recall of occupancy discovery Chip Compute Units Occupancy Bound GTX 980 16 32 Quadro K500 12 12 Iris 6100 47 6 HD 5500 24 3 Radeon R9 Radeon R7 28 8 48 16 T628-4 T628-2 4 2 4 2

Our approach (scheduling) wa w9 w8 w7 w6 Program w5 w4 w2 w1 w0 workgroup queue CU CU CU GPU with 3 compute units

Our approach (scheduling) w8 w6 Program w5 w4 w1 workgroup queue w2 w9 w7 w0 w4 wa CU CU CU GPU with 3 compute units

Our approach (scheduling) w8 w6 Program w5 w4 w1 workgroup queue w2 w9 w7 w0 w4 wa Dynamically estimate occupancy CU CU CU GPU with 3 compute units

Our approach (scheduling) w8 w6 Program w5 w4 w1 workgroup queue w2 w9 w7 w0 w4 wa Dynamically estimate occupancy CU CU CU GPU with 3 compute units

Finding occupant workgroups Executed by 1 thread per workgroup Two phases: Polling Closing

Finding occupant workgroups Executed by 1 thread per workgroup Three global variables: Mutex: m Bool poll flag: poll_open Integer counter: count

Finding occupant workgroups lock(m) if (poll_open) { count++; unlock(m); } else { unlock(m); return false; } Executed by 1 thread per workgroup Three global variables: Mutex: m Bool poll flag: poll_open lock(m) if (poll_open) { poll_open = false } unlock(m) return true; Integer counter: count

Finding occupant workgroups Polling phase lock(m) if (poll_open) { count++; unlock(m); } else { unlock(m); return false; } Executed by 1 thread per workgroup Three global variables: Mutex: m Bool poll flag: poll_open lock(m) if (poll_open) { poll_open = false } unlock(m) return true; Integer counter: count

Finding occupant workgroups Polling phase lock(m) if (poll_open) { count++; unlock(m); } else { unlock(m); return false; } Executed by 1 thread per workgroup Three global variables: Mutex: m Bool poll flag: poll_open lock(m) if (poll_open) { poll_open = false } unlock(m) return true; Integer counter: count Closing phase

Finding occupant workgroups lock(m) if (poll_open) { count++; unlock(m); } else { unlock(m); return false; } Executed by 1 thread per workgroup Three global variables: Mutex: m Bool poll flag: poll_open lock(m) if (poll_open) { poll_open = false } unlock(m) return true; Integer counter: count

Finding occupant workgroups lock(m) if (poll_open) { count++; unlock(m); } else { unlock(m); return false; } Executed by 1 thread per workgroup Three global variables: Mutex: m Bool poll flag: poll_open lock(m) if (poll_open) { poll_open = false } unlock(m) return true; Integer counter: count

Recall of occupancy discovery Chip Compute Units Occupancy Bound Spin Lock Ticket Lock GTX 980 16 32 Quadro K500 12 12 Iris 6100 47 6 HD 5500 24 3 Radeon R9 Radeon R7 28 8 48 16 T628-4 4 4 T628-2 2 2

Recall of occupancy discovery Chip Compute Units Occupancy Bound Spin Lock Ticket Lock GTX 980 16 32 3.1 Quadro K500 12 12 2.3 Iris 6100 47 6 3.5 HD 5500 24 3 2.7 Radeon R9 Radeon R7 28 8 48 16 7.7 4.6 T628-4 4 4 3.1 T628-2 2 2 2.0

Recall of occupancy discovery Chip Compute Units Occupancy Bound Spin Lock Ticket Lock GTX 980 16 32 3.1 32.0 Quadro K500 12 12 2.3 12.0 Iris 6100 47 6 3.5 6.0 HD 5500 24 3 2.7 3.0 Radeon R9 Radeon R7 28 8 48 16 7.7 4.6 48.0 16.0 T628-4 4 4 3.1 4.0 T628-2 2 2 2.0 2.0

Challenges Scheduling Memory consistency

Our approach (memory consistency) T2 T0 T1 m0 barrier m1

Our approach (memory consistency) T2 T0 T1 m0 HB barrier m1

Our approach (memory consistency) T2 T0 T1 Barrier implementation creates HB web Using OpenCL 2.0 atomic operations

Barrier implementation Start with an implementation from Xiao and Feng (2010) Written in CUDA (ported to OpenCL) No formal memory consistency properties

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0 barrier barrier spin(x0 != 1) spin(x1 != 1)

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0 barrier barrier spin(x0 != 1) spin(x1 != 1) barrier barrier

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0 barrier barrier x1 = 1 x0 = 1 spin(x0 != 1) spin(x1 != 1) barrier barrier

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0 barrier barrier x1 = 1 x0 = 1 spin(x0 != 1) spin(x1 != 1) barrier spin(x1 != 0) spin(x0 != 0) barrier barrier

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0 barrier barrier x1 = 1 x0 = 1 spin(x0 != 1) spin(x1 != 1) barrier x0 = 0 x1 = 0 spin(x1 != 0) spin(x0 != 0) barrier barrier

Master Workgroup Slave Workgroup 0 Slave Workgroup 1 T0 T0 T1 T1 T1 T0 barrier barrier x1 = 1 x0 = 1 spin(x0 != 1) spin(x1 != 1) barrier x0 = 0 x1 = 0 spin(x1 != 0) spin(x0 != 0) barrier barrier