Performance Characterization of a 10-Gigabit Ethernet TOE

The Network Based Computing Laboratory at Ohio State University and Los Alamos National Lab conducted a study on the performance of a 10-Gigabit Ethernet TCP Offload Engine (TOE). The research highlights the advancements and technology trends in Ethernet infrastructure, focusing on achieving higher performance levels while keeping costs low. The study emphasizes the role of TCP Offload Engines in enhancing network and transport functionality, with comparisons between different types of adapters. The presentation covers an overview of TOEs, experimental evaluations, and future considerations in this field.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

NETWORK BASED COMPUTING LABORATORY Performance Characterization of a 10-Gigabit Ethernet TOE W. Feng C. Baron P. Balaji L. N. Bhuyan D. K. Panda Advanced Computing Lab, Network Based Computing Lab, CARES Group, Los Alamos National Lab Ohio State University U. C. Riverside

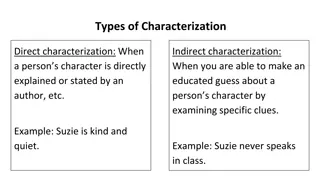

NETWORK BASED COMPUTING LABORATORY Ethernet Overview Ethernet is the most widely used network infrastructure today Traditionally Ethernet has been notorious for performance issues Near an order-of-magnitude performance gap compared to IBA, Myrinet, etc. Cost conscious architecture Most Ethernet adapters were regular (layer 2) adapters Relied on host-based TCP/IP for network and transport layer support Compatibility with existing infrastructure (switch buffering, MTU) Used by 42.4% of the Top500 supercomputers Key: Reasonable performance at low cost TCP/IP over Gigabit Ethernet (GigE) can nearly saturate the link for current systems Several local stores give out GigE cards free of cost ! 10-Gigabit Ethernet (10GigE) recently introduced 10-fold (theoretical) increase in performance while retaining existing features

NETWORK BASED COMPUTING LABORATORY 10GigE: Technology Trends Broken into three levels of technologies Regular 10GigE adapters Layer-2 adapters Rely on host-based TCP/IP to provide network/transport functionality Could achieve a high performance with optimizations [feng03:hoti, feng03:sc] TCP Offload Engines (TOEs) [Evaluation based on the Chelsio T110 TOE adapters] Layer-4 adapters Have the entire TCP/IP stack offloaded on to hardware Sockets layer retained in the host space RDDP-aware adapters Layer-4 adapters Entire TCP/IP stack offloaded on to hardware Support more features than TCP Offload Engines No sockets ! Richer RDDP interface ! E.g., Out-of-order placement of data, RDMA semantics

NETWORK BASED COMPUTING LABORATORY Presentation Overview Introduction and Motivation TCP Offload Engines Overview Experimental Evaluation Conclusions and Future Work

NETWORK BASED COMPUTING LABORATORY What is a TCP Offload Engine (TOE)? TOE stack Traditional TCP/IP stack Application or Library User Application or Library User Sockets Interface Sockets Interface TCP TCP IP Kernel IP Device Driver Kernel Device Driver Offloaded TCP Offloaded IP Network Adapter (e.g., 10GigE) Hardware Hardware Network Adapter (e.g., 10GigE)

NETWORK BASED COMPUTING LABORATORY Interfacing with the TOE High Performance Sockets TCP Stack Override Application or Library Application or Library High Performance Sockets Sockets Layer User-level Protocol toedev Traditional Sockets Interface Control Path Data Path TCP/IP TOM TCP/IP Device Driver Device Driver Network Features (e.g., Offloaded Protocol) Network Features (e.g., Offloaded Protocol) High Performance Network Adapter High Performance Network Adapter Kernel needs to be patched No changes required to the core kernel Some of the TCP functionality duplicated Some of the sockets functionality duplicated No duplication in the sockets functionality

NETWORK BASED COMPUTING LABORATORY What does the TOE (NOT) provide? Compatibility: Network-level 1. User Application or Library compatibility with existing TCP/IP/Ethernet; Application-level Traditional Sockets Interface Kernel compatibility with the sockets interface Performance: Application performance 2. Transport Layer (TCP) Kernel or Hardware no longer restricted by the performance Network Layer (IP) of traditional host-based TCP/IP stack Device Driver Feature-rich interface: Application 3. interface restricted to the sockets Hardware Network Adapter (e.g., 10GigE) [rait05] interface ! [rait05]: Support iWARP compatibility and features for regular network adapters. P. Balaji, H. W. Jin, K. Vaidyanathan and D. K. Panda. In the RAIT workshop; held in conjunction with Cluster Computing, Aug 26th, 2005.

NETWORK BASED COMPUTING LABORATORY Presentation Overview Introduction and Motivation TCP Offload Engines Overview Experimental Evaluation Conclusions and Future Work

NETWORK BASED COMPUTING LABORATORY Experimental Test-bed and the Experiments Two test-beds used for the evaluation Two 2.2GHz Opteron machines with 1GB of 400MHz DDR SDRAM Nodes connected back-to-back Four 2.0GHz quad-Opteron machines with 4GB of 333MHz DDR SDRAM Nodes connected with a Fujitsu XG1200 switch (450ns flow-through latency) Evaluations in three categories Sockets-level evaluation Single-connection Micro-benchmarks Multi-connection Micro-benchmarks MPI-level Micro-benchmark evaluation Application-level evaluation with the Apache Web-server

NETWORK BASED COMPUTING LABORATORY Latency and Bandwidth Evaluation (MTU 9000) Ping-pong Latency (MTU 1500) 9000) Uni-directional Bandwidth (MTU 9000) 18 8000 Non-TOE TOE 16 7000 14 Non-TOE TOE 6000 Bandwidth (Mbps) 12 Latency (us) 5000 10 4000 8 3000 6 2000 4 1000 2 0 0 1 8 4K 64 512 256K 32K 1 4 1K 16 64 256 Message Size (bytes) Message Size (bytes) TOE achieves a latency of about 8.6us and a bandwidth of 7.6Gbps at the sockets layer Host-based TCP/IP achieves a latency of about 10.5us (25% higher) and a bandwidth of 7.2Gbps (5% lower) For Jumbo frames, host-based TCP/IP performs quite close to the TOE

NETWORK BASED COMPUTING LABORATORY Latency and Bandwidth Evaluation (MTU 1500) Ping-pong Latency (MTU 1500) Uni-directional Bandwidth (MTU 1500) 18 8000 Non-TOE TOE 16 7000 14 Non-TOE TOE 6000 Bandwidth (Mbps) 12 Latency (us) 5000 10 4000 8 3000 6 2000 4 1000 2 0 0 1 8 64 512 4K 32K 256K 1 4 16 64 256 1K Message Size (bytes) Message Size (bytes) No difference in latency for either stack The bandwidth of host-based TCP/IP drops to 4.9Gbps (more interrupts; higher overhead) For standard sized frames, TOE significantly outperforms host-based TCP/IP (segmentation offload is the key)

NETWORK BASED COMPUTING LABORATORY Multi-Stream Bandwidth Multi-Stream Bandwidth 8000 7000 Aggregate Bandwidth (Mbps) 6000 5000 4000 3000 Non-TOE TOE 2000 1000 0 1 2 3 4 5 6 7 8 9 10 11 12 Number of Streams The throughput of the TOE stays between 7.2 and 7.6Gbps

NETWORK BASED COMPUTING LABORATORY Hot Spot Latency Test (1 byte) Hot-Spot Latency 60 50 Hot-Spot Latency (us) Non-TOE TOE 40 30 20 10 0 1 2 3 4 5 6 7 8 9 10 11 12 Number of Client Processes Connection scalability tested up to 12 connections; TOE achieves similar or better scalability as the host-based TCP/IP stack

NETWORK BASED COMPUTING LABORATORY Fan-in and Fan-out Throughput Tests Fan-in Throughput Test Fan-out Throughput Test 8000 8000 Aggregate Throughput (Mbps) Aggregate Throughput (Mbps) 7000 7000 6000 6000 5000 5000 4000 4000 3000 3000 2000 2000 1000 1000 0 0 1 3 5 7 9 11 1 3 5 7 9 11 Number of Client Processes Number of Client Processes Non-TOE TOE Non-TOE TOE Fan-in and Fan-out tests show similar scalability

NETWORK BASED COMPUTING LABORATORY MPI-level Comparison MPI Latency (MTU 1500) MPI Bandwidth (MTU 1500) 8000 20 Non-TOE TOE 18 7000 Non-TOE TOE 16 6000 14 Bandwidth (Mbps) Latency (us) 5000 12 4000 10 8 3000 6 2000 4 1000 2 0 0 1 12288 49155 29 128 515 3069 1 3E+05 1E+06 4E+06 8 21 35 64 125 195 384 765 Message Size (bytes) Message Size (bytes) MPI latency and bandwidth show similar trends as socket-level latency and bandwidth

NETWORK BASED COMPUTING LABORATORY Application-level Evaluation: Apache Web-Server Apache Web-server Web Client Web Client Web Client We perform two kinds of evaluations with the Apache web-server: 1. Single file traces All clients always request the same file of a given size Not diluted by other system and workload parameters 2. Zipf-based traces The probability of requesting the Ith most popular document is inversely proportional to I is constant for a given trace; it represents the temporal locality of a trace A high value represents a high percent of requests for small files

NETWORK BASED COMPUTING LABORATORY Apache Web-server Evaluation Single File Trace Performance ZipF Trace Performance 7000 35000 Non-TOE TOE Transactions per Second (TPS) 30000 6000 Non-TOE TOE Transactions per Second 25000 5000 20000 4000 15000 3000 10000 2000 5000 1000 0 1K 0 1M 4K 16K 64K 256K 0.9 0.75 0.5 Alpha 0.25 0.1 File Size

NETWORK BASED COMPUTING LABORATORY Presentation Overview Introduction and Motivation TCP Offload Engines Overview Experimental Evaluation Conclusions and Future Work

NETWORK BASED COMPUTING LABORATORY Conclusions For a wide-spread acceptance of 10-GigE in clusters Compatibility Performance Feature-rich interface Network as well as Application-level compatibility is available On-the-wire protocol is still TCP/IP/Ethernet Application interface is still the sockets interface Performance Capabilities Significant performance improvements compared to the host-stack Close to 65% improvement in bandwidth for standard sized (1500byte) frames Feature-rich interface: Not quite there yet ! Extended Sockets Interface iWARP offload

NETWORK BASED COMPUTING LABORATORY Continuing and Future Work Comparing 10GigE TOEs to other interconnects Sockets Interface [cluster05] MPI Interface File and I/O sub-systems Extending the sockets interface to support iWARP capabilities [rait05] Extending the TOE stack to allow protocol offload for UDP sockets

NETWORK BASED COMPUTING LABORATORY Web Pointers Network Based Computing Laboratory NOWLAB http://public.lanl.gov/radiant http://nowlab.cse.ohio-state.edu feng@lanl.gov balaji@cse.ohio-state.edu