Non-uniformly Communicating Data: A PETSc Case Study

Developing parallel applications involves discretizing physical equations, representing domains as data points, and abstracting details using numerical libraries like PETSc. PETSc provides tools for solving PDEs, creating vectors and matrices, and utilizing parallel data layout for efficient processing. Handling non-uniform and non-contiguous data communication poses challenges in optimizing MPI operations. This case study explores the impact of PETSc data layout and processing on MPI, discussing enhancements, optimizations, and future implications.

Uploaded on Feb 26, 2025 | 0 Views

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Non-uniformly Communicating Non-contiguous Data: A Case Study with PETSc and MPI P. Balaji, D. Buntinas, S. Balay, B. Smith, R. Thakur and W. Gropp Mathematics and Computer Science Argonne National Laboratory

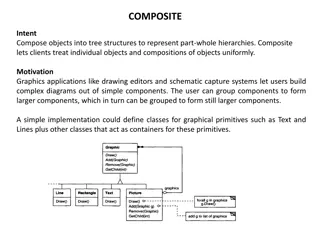

Numerical Libraries in HEC Developing parallel applications is a complex task Discretizing physical equations to numerical forms Representing the domain of interest as data points Libraries allow developers to abstract low-level details E.g., Numerical Analysis, Communication, I/O Numerical libraries (e.g., PETSc, ScaLAPACK, PESSL) Parallel data layout and processing Tools for distributed data layout (matrix, vector) Tools for data processing (SLES, SNES)

Overview of PETSc Portable, Extensible Toolkit for Scientific Computing Software tools for solving PDEs Suite of routines to create vectors, matrices and distributed arrays Sequential/parallel data layout Linear and nonlinear numerical solvers Widely used in Nanosimulations, Molecular dynamics, etc. Uses MPI for communication Application Codes Level of Abstraction PDE Solvers TS (Time Stepping) SNES SLES (Nonlinear Equation Solvers) (Linear Equation Solvers) KSP PC Draw (Krylov subspace Methods) (Preconditioners) Matrices Vectors Index Sets BLAS LAPACK MPI

Handling Parallel Data Layouts in PETSc Grid layout exposed to the application Structured or Unstructured (1D, 2D, 3D) Internally managed as a single vector of data elements Representation often suited to optimize its operations Impact on communication: Data representation and communication pattern might not be ideal for MPI communication operations Non-uniformity and Non-contiguity in communication are the primary culprits

Presentation Layout Introduction Impact of PETSc Data Layout and Processing on MPI MPI Enhancements and Optimizations Experimental Evaluation Concluding Remarks and Future Work

Data Layout and Processing in PETSc Grid layouts: data is divided among processes Ghost data points shared Non-contiguous Data Communication 2nd dimension of the grid Non-uniform communication Structure of the grid Stencil type used Sides larger than corners Process Boundary Local Data Point Ghost Data Point Proc 0 Proc 1 Proc 0 Proc 1 Box-type stencil Star-type stencil

Non-contiguous Communication in MPI MPI Derived Datatypes Application describes noncontiguous data layout to MPI Data is either packed to contiguous buffers and pipelined (sparse layouts) or sent individually (dense layouts) Non-contiguous Data layout Packing Buffer Send Data Save Context Save Context Good for simple algorithms, but very restrictive Lookup upcoming content to predecide algorithm to use Multiple parses on the datatype loses context!

Issues with Lost Datatype Context Rollback of context not possible Datatypes could be recursive Duplication of context not possible Context information might be large When datatype elements are small, context could be larger than the datatype itself Search of context possible, but very expensive Quadratically increasing search time with increasing datatype size Currently used mechanism!

Non-uniform Collective Communication Non-uniform communication algorithms are optimized for uniform communication Case Studies Allgatherv uses a ring algorithm Large Message Small Message Causes idleness if data volumes are very different Alltoallw sends data to nodes in round-robin manner MPI processing is sequential 1 6 2 0 5 3 4

Presentation Layout Introduction Impact of PETSc Data Layout and Processing on MPI MPI Enhancements and Optimizations Experimental Evaluation Concluding Remarks and Future Work

Dual-context Approach for Non-contiguous Communication Previous approaches are in-efficient in complex designs E.g., if a look-ahead is performed to understand the structure of the upcoming data, the saved context is lost Dual-context approach retains the data context Look-aheads are performed using a separate context Completely eliminates the search time Non-contiguous Data layout Packing Buffer Send Data Save Context Save Context Look-ahead

Non-Uniform Communication: AllGatherv Single point of distribution is the primary bottleneck Identify if a small fraction of messages are very large Floyd and Rivest Algorithm Linear time detection of Large Message Small Message outliers Binomial Algorithms Recursive doubling or Dissemination Logarithmic time

Non-uniform Communication: Alltoallw Distributing messages to be sent out as bins (based on message size) allows differential treatment to nodes Send out small messages first Nodes waiting for small messages have to wait lesser Ratio of increase in time for nodes waiting for larger messages is much smaller No skew for zero-byte data with lesser synchronization Most helpful for non-contiguous messages MPI processing (e.g., packing) is sequential for non- contiguous messages

Presentation Layout Introduction Impact of PETSc Data Layout and Processing on MPI MPI Enhancements and Optimizations Experimental Evaluation Concluding Remarks and Future Work

Experimental Testbed 64-node Cluster 32 nodes with dual Intel EM64T 3.6GHz processors 2MB L2 Cache, 2GB DDR2 400MHz SDRAM Intel E7520 (Lindenhurst) Chipset 32 nodes with dual Opteron 2.8GHz processors 1MB L2 Cache, 4GB DDR 400MHz SDRAM NVidia 2200/2050 Chipset RedHat AS4 with kernel.org kernel 2.6.16 InfiniBand DDR (16Gbps) Network: MT25208 adapters connected through a 144-port switch MVAPICH2-0.9.6 MPI implementation

Non-uniform Communication Evaluation Timing Breakup (1024 Grid Size) Non-contiguous Communication 900000 100% MVAPICH2-0.9.6 800000 90% MVAPICH2-New 80% 700000 70% 600000 Percentage Time 60% Latency (us) 500000 50% 400000 40% 300000 30% 20% 200000 10% 100000 0% 0 MVAPICH2-0.9.6 MVAPICH2-New 64 128 256 512 1024 Search Pack Communicate Grid Size Search time can dominate performance if the working context is lost!

AllGatherv Evaluation AllGatherv Latency vs. Message Size AllGatherv Latency vs. System Size 1800 1800 MVAPICH2-0.9.6 MVAPICH2-0.9.6 1600 1600 MVAPICH2-New MVAPICH2-New 1400 1400 1200 1200 Latency (us) Latency (us) 1000 1000 800 800 600 600 400 400 200 200 0 0 1 32K 4K 8 64 512 2 4 Number of Processes 8 16 32 64 Message Size (bytes)

Alltoallw Evaluation 1800 MVAPICH2-0.9.6 MVAPICH2-New 1600 1400 1200 Latency (us) 1000 800 600 400 200 0 2 4 8 16 32 64 128 Number of Processes Our algorithm reduces the skew introduced due to the Alltoallw operations by sending out smaller messages first and allowing the corresponding applications to progress

PETSc Vector Scatter PETSc Vecscatter Performance Relative Improvement 35000000 100 MVAPICH2-0.9.5 30000000 MVAPICH2-New 80 Hand-tuned 25000000 Percentage Improvement 60 Latency (us) 20000000 40 15000000 10000000 MVAPICH2-0.9.5 20 Hand-tuned 5000000 0 0 2 4 8 16 32 64 128 -20 2 4 Number of Processes 8 16 32 64 128 Number of Processes

3-D Laplacian Multigrid Solver Application Performance Performance Improvement 100 90 MVAPICH2-0.9.6 MVAPICH2-0.9.6 MVAPICH2-New Hand-Tuned 80 80 Hand-Tuned 70 Percentage Improvement (%) 60 Execution Time (s) 60 50 40 40 30 20 20 10 0 4 8 16 32 64 128 0 4 8 16 32 64 128 -20 Number of Processors Number of Processors

Presentation Layout Introduction Impact of PETSc Data Layout and Processing on MPI MPI Enhancements and Optimizations Experimental Evaluation Concluding Remarks and Future Work

Concluding Remarks and Future Work Non-uniform and Non-contiguous communication is inherent in several libraries and applications Current algorithms deal with non-uniform communication in a same way as uniform communication Demonstrated that more sophisticated algorithms can give close to 10x improvements in performance Designs are a part of MPICH2-1.0.5 and 1.0.6 To be picked up by MPICH2 derivatives in later releases Future Work: Skew tolerance in non-uniform communication Other libraries and applications

Thank You Group Web-page: http://www.mcs.anl.gov/radix Home-page: http://www.mcs.anl.gov/~balaji Email: balaji@mcs.anl.gov

Noncontiguous Communication in PETSc Data might not always be contiguously laid out in memory E.g., Second dimension of a structured grid Communication is performed by packing data Pipelining copy and communication is important for performance 0 8 16 192 384 vector (count = 8, stride = 8) contiguous (count = 3) contiguous (count = 3) contiguous (count = 3) double | double | double double | double | double double | double | double Copy Buffer

Hand-tuning vs. Automated optimization Nonuniformity and noncontiguity in data communication is inherent in several applications Communicating unequal amounts of data to the different peer processes Communication data from noncontiguous memory locations Previous research has primarily focused on uniform and contiguous data communication Accordingly applications and libraries tried hand-tuning attempts to convert communication formats Manually packing noncontiguous data Re-implementing collective operations in the application

Non-contiguous Communication in MPI MPI Derived Datatypes Common approach for non-contiguous communication Application describes noncontiguous data layout to MPI Data is either packed into contiguous memory (sparse layouts) or sent as independent segments (dense layouts) Pipelining of packing and communication improves performance, but requires context information! Non-contiguous Data layout Packing Buffer Send Data Save Context Save Context

Issues with Non-contiguous Communication Current approach is simple and works as long as there is a single parse on the noncontiguous data More intelligent algorithms might suffer: E.g., lookup upcoming datatype content to predecide algorithm to use Multiple parses on the datatype lose the context ! Searching for the lost context every time requires quadratically increasing time with datatype size PETSc non-contiguous communication suffers with such high search times

MPI-level Evaluation Alltoallw Performance Noncontiguous Communication Performance 2000 1000000 800000 MVAPICH2-0.9.6 MVAPICH2-0.9.6 1500 Time (us) Time (us) MVAPICH2-New 600000 MVAPICH2-New 1000 400000 500 200000 0 0 64 128 256 512 1024 2 4 8 16 32 64 128 Grid Size Number of Processors Allgatherv Performance Allgatherv Performance 2000 2000 MVAPICH2-0.9.6 MVAPICH2-0.9.6 1500 1500 Time (us) MVAPICH2-New Time (us) MVAPICH2-New 1000 1000 500 500 0 0 4 1 64 16 256 1024 4096 16384 2 4 8 16 32 64 Message Size (bytes) Number of Processors

Experimental Results MPI-level Micro-benchmarks Non-contiguous data communication time Non-uniform collective communication Allgatherv Operation Alltoallw Operation PETSc Vector Scatter Benchmark Performs communication only 3-D Laplacian Multigrid Solver Application Partial differential equation solver Utilizes PETSc numerical solver operations