Misleading Conclusions Based on Statistical Significance at the 2020 ECSS Conference

Researcher Will Hopkins challenges the traditional use of statistical significance in drawing conclusions about effects in sports science research. His analysis of presentations at the 2020 ECSS Conference reveals how significance testing can lead to misleading interpretations. By emphasizing the importance of rejecting non-substantial hypotheses and utilizing magnitude-based decisions, he highlights the limitations of relying solely on statistical significance.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

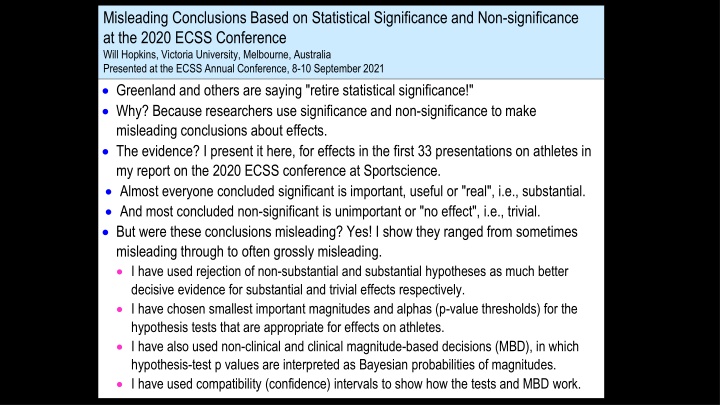

Misleading Conclusions Based on Statistical Significance and Non-significance at the 2020 ECSS Conference Will Hopkins, Victoria University, Melbourne, Australia Presented at the ECSS Annual Conference, 8-10 September 2021 Greenland and others are saying "retire statistical significance!" Why? Because researchers use significance and non-significance to make misleading conclusions about effects. The evidence? I present it here, for effects in the first 33 presentations on athletes in my report on the 2020 ECSS conference at Sportscience. Almost everyone concluded significant is important, useful or "real", i.e., substantial. And most concluded non-significant is unimportant or "no effect", i.e., trivial. But were these conclusions misleading? Yes! I show they ranged from sometimes misleading through to often grossly misleading. I have used rejection of non-substantial and substantial hypotheses as much better decisive evidence for substantial and trivial effects respectively. I have chosen smallest important magnitudes and alphas (p-value thresholds) for the hypothesis tests that are appropriate for effects on athletes. I have also used non-clinical and clinical magnitude-based decisions (MBD), in which hypothesis-test p values are interpreted as Bayesian probabilities of magnitudes. I have used compatibility (confidence) intervals to show how the tests and MBD work.

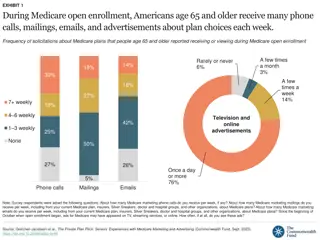

Significant Effects at the ECSS conference 31 significant effects had enough data to do the hypothesis tests and non-clinical MBD. This figure shows compatibility intervals corresponding to rejected hypotheses: Value of effect statistic positive negative trivial rejected Conclusion via Hypothesis Number non-clinical MBD testing of effects Non +ive Non ive ive only +ive only ive & +ive ive & +ive +ive ive not ive possibly/likely +ive &/or trivial not +ive possibly/likely ive &/or trivial trivial very/most likely trivial trivial very/most likely trivial very/most likely +ive very/most likely ive 16 (52%) 12 (39%) 3 (10%) Bars are 90% compatibility intervals. 95% CIs did not overlap zero (i.e., effects were statistically significant.) Arrows indicate some CIs were very wide. Authors concluded that all but one of these effects (97%) were substantial. In terms of evidence provided by hypothesis testing or non-clinical MBD That conclusion was justified only half the time. That conclusion was often misleading. That conclusion was sometimes grossly misleading. Hence significance was unacceptably misleading for non-clinical assessment of effects.

23 of these significant effects were clinically relevant. I analyzed them with tests of harmful and beneficial hypotheses appropriate for clinical MBD: Value of effect statistic beneficial harmful trivial rejected Hypothesis Number Conclusion via clinical MBD of effects very/most likely beneficial possibly/likely beneficial likely trivial likely harmful unclear 6 (26%) 8 (35%) 3 (13%) 1 (4%) harmful beneficial neither 5 (22%) Bars are 99% compatibility intervals on the harm side and 50% on the beneficial side. 95% compatibility intervals did not overlap zero (i.e., effects were all statistically significant). Authors concluded that all but one of these effects (96%) were substantial and presumably beneficial or harmful in a clinical or practical setting. In terms of evidence provided by clinical MBD That conclusion was often (65%) justified. That conclusion was sometimes (13%) misleading. That conclusion was sometimes (22%) potentially unethical (unacceptable risk of harm). However, these unclear effects became possibly or likely beneficial with odds-ratio clinical MBD, resulting in most conclusions (87%) being justified. Hence significance was somewhat misleading for clinically relevant effects.

Non-significant Effects at the ECSS conference 20 non-significant effects had enough data to do hypothesis tests and non-clinical MBD: Value of effect statistic positive negative trivial rejected ive & +ive Conclusion via Hypothesis Number non-clinical MBD very/most likely trivial testing trivial of effects 0 (0%) ive only +ive only none not ive possibly/likely +ive &/or trivial not +ive possibly/likely ive &/or trivial none unclear 10 (50%) 10 (50%) Bars are 90% compatibility intervals. 95% CIs all overlapped zero (i.e., effects were statistically non-significant.) Authors concluded that 17 of these effects (85%) were trivial. In terms of hypothesis testing or non-clinical MBD That conclusion was justified none of the time. That conclusion was often (50%) misleading. That conclusion was often (50%) grossly misleading. Hence non-significance was unacceptably misleading for non-clinical assessment of effects.

16 of these non-significant effects were clinically relevant. I analyzed them with tests of harmful and beneficial hypotheses appropriate for clinical MBD: Value of effect statistic beneficial harmful trivial rejected both Hypothesis Number Conclusion via clinical MBD likely trivial possibly beneficial possibly trivial/harmful of effects 0 (0%) 3 (19%) 0 (0%) harmful beneficial neither unclear 13 (81%) Bars are 99% compatibility intervals on the harm side and 50% on the beneficial side. 95% CIs all overlapped zero (i.e., effects were statistically non-significant.). Authors concluded that 13 of these effects (85%) were trivial. In terms of clinical MBD A conclusion of trivial was justified none of the time. That conclusion was sometimes (19%) misleading, because the effect could be beneficial. That conclusion was often (81%) misleading, because the effect could be anything. Three (19%) of these effects were potentially beneficial with odds-ratio MBD. So MBD provides reasonable evidence of benefit for 19-38% of non-significant effects. Hence non-significance was unacceptably misleading for clinically relevant effects.

Summary I have used hypothesis tests to show that statistical significance and non-significance produced an unacceptably high prevalence of misleading conclusions about effect magnitudes at this conference. In my experience, there is a similar prevalence of misleading conclusions in published articles. Conclusions with MBD are consistent with the hypothesis tests, and the probabilistic assessments of magnitude are easier to understand and realistic. MBD is also more useful for clinically or practically relevant effects, especially when the hypothesis of harm but not of benefit is rejected. Researchers should therefore account for sampling variation by replacing the nil- hypothesis test with tests of substantial and non-substantial magnitudes, or equivalently (and preferably) MBD. This slideshow is based on a full article in the 2021 issue of Sportscience at https://sportsci.org. See articles in the 2020 issue for evidence that MBD is equivalent to hypothesis tests.