Energy-Efficient Computing: Scaling Strategies and Power Optimization

This collection of images and text delves into the nuances of energy-efficient computing, focusing on the comparison between stateless and stateful systems, power consumption in data centers, wastage in server operations, and strategies for minimizing power consumption while maintaining performance guarantees in response times. The exploration also includes experimental setups for evaluating power consumption and response time trade-offs in server provisioning strategies.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

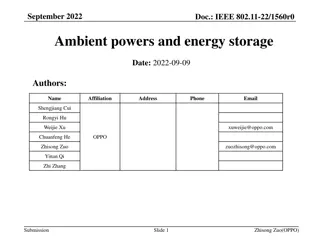

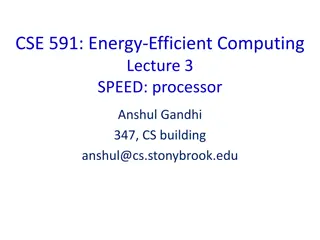

CSE 591: Energy-Efficient Computing Lecture 5 SCALING: stateless vs. stateful Anshul Gandhi 347, CS building anshul@cs.stonybrook.edu

Data Centers Facebook data center in Oregon Collection of thousands of servers Stores data and serves user requests 3

Power is expensive Annual US data centers: 100 Billion kWh = $ 7.4 Billion As much CO2 as all of Argentina Google investing in power plants Most power is actually wasted! [energystar.gov, McKinsey & Co., Gartner] 4

A lot of power is actually wasted Servers are only busy 30% of the time on average, but they re often left on, wasting power Setup cost 260 s 200 W (+more) BUSY server: 200 Watts IDLE server: 140 Watts OFF server: 0 Watts Intel Xeon E5520 dual quad-core 2.27 GHz Provisioning for peak Demand ? Time 5

Problem statement Given unpredictable demand, how to provision capacity to minimize power consumption without violating response time guarantees (95%tile) ? 1. Turn servers off: save power 2. Release VMs: save rental cost 3. Repurpose: additional work done Demand ? Time 6

Experimental setup 7 servers (key-value store) 1 server (500 GB) 28 servers Response time: Time taken to complete the request A single request: 120ms, 3000 KV pairs 7

Experimental setup 7 servers (key-value store) 1 server (500 GB) 28 servers Goal: Provision capacity to minimize power consumption without violating response time SLA SLA: T95 < 400ms-500 ms 8

AlwaysOn Static provisioning policy Knows the maximum request rate into the entire data center (rmax = 800 req/s) What request rate can each server handle? 1 server 95% Resp. time (ms) 1000 800 r max = = 14 k 600 60 400 ms 400 200 0 10 30 50 70 90 110 130 arrival rate (req/s) 60 req/s 9

AlwaysOn T95 = 291ms Pavg= 2,323W 10

Reactive r r current current = + = ( ) t % reqd k x ( ) reqd k t 60 60 T95 = 487ms Pavg= 2,218W T95 = 11,003ms Pavg= 1,281W x= 100% 11

Predictive Use window of observed request rates to predict request rate at time (t+260) seconds. Turn servers on/off based on this prediction. Linear Regression T95 = 2,544ms Pavg= 2,161W Moving Window Average T95 = 7,740ms Pavg= 1,276W 12

AutoScale Predictive and Reactive are too quick to turn servers off If request rate rises again, have to wait for full setup time (260s) Heuristic Wait for some time (twait) before turning idle servers off Energy(wait) = Energy(setup) Pidle twait = Pmax tsetup Two new ideas 10 jobs/server Load balancing? 1000 95% Resp. time 800 Un-balance load: Pack jobs on as few servers as possible without violating SLAs 600 400 200 0 0 10 20 30 jobs at server [Gandhi et al., Allerton Conference on Communication, Control, and Computing, 2011] [Gandhi et al., Open Cirrus Summit, 2011] 13

Results Reactive Reactive AutoScale AutoScale [Gandhi et al., International Green Computing Conference, 2012] [Gandhi et al., HotPower, 2011] 14

Application in the Cloud Application Tier Database Caching Tier DB req/sec req/sec Load Balancer Why have a caching tier? 1. Reduce database (DB) load ( DB << ) 16

Application in the Cloud Application Tier Database Load Caching Tier DB req/sec req/sec Load Balancer > 1/3 of the cost [Krioukov`10] [Chen`08] Why have a caching tier? 1. Reduce database (DB) load 2. Reduce latency [Ousterhout`10] Shrink your cache during low load ( DB << ) 17

Will cache misses overwhelm the DB? Application Tier Database Caching Tier p DB req/sec req/sec Load Balancer (1-p) = DB Goal: Keep DB = (1-p) low If drops (1-p) can be higher p can be lower SAVE $$$ 18

Are the savings significant? It depends on the popularity distribution Small decrease in hit rate Hit rate, p Uniform Zipf Large decrease in caching tier size caching tier size Small decrease in % of data cached 19

Is there a problem? Performance can temporarily suffer if we lose a lot of hot data Mean response time (ms) Shrink the cache Response time stabilizes Time (min) 20

What can we do about the hot data? Start state Caching Tier End state Retiring Caching Tier Option 1 Transfer Caching Tier Primary Caching Tier Caching Tier Option 2 We need to transfer the hot data before shrinking the cache 21