Discussion of Randomized Experiments and Experimental Design Challenges

Randomized experiments face statistical power challenges due to rare outcomes and high variance. Stratifying randomization can help control for correlated residual variance based on baseline values of outcomes. Implications for applied economists include addressing attrition and treatment effect heterogeneity. Reactions highlight the importance of reporting results with and without fixed effects, while discussing the use of pairwise randomization in experimental design. Replication studies should assess statistically significant differences in estimates with and without fixed effects.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Discussion of Bai, Hsieh, Liu, Tabord-Meehan Tristan Reed World Bank Development Research Group Northwestern Interactions Workshop September 22, 2023

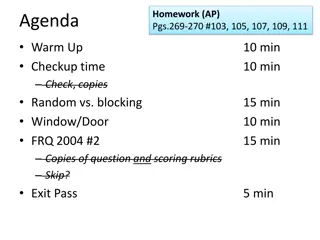

Motivation Randomized experiments struggle with statistical power Expensive to treat/survey each observational unit, but many important outcomes are rare or have high variance (e.g., superstar firm growth, mortality) One solution is to stratify randomization on baseline values of outcome, and use strata fixed effects to control for residual variance that is correlated with the outcome Limit case is pair-wise randomization: N = 2 per strata But if you have attrition of strata, and; treatment effects are heterogeneous across strata then fixed effects drop some strata, and you estimate average treatment effect only within sample that does not attrit

Implications for applied economists This is actually a very general issue, which could arise in non-experimental studies any time you use fixed effects or controls. E.g., Include individual fixed effects, but some individuals attrit; or Include controls, but some observations have missing control variables Good recommendation to report results with and without fixed effects/controls, if you have attrition or missingness (typically Column 1, Column 2, etc.) Authors prefer version without fixed effects (?) Implications for experimental design less obvious Attrition rates and treatment effect heterogeneity unknown ex-ante Stratification on groups with N>2 may suffer from less attrition but could provide less gains in statistical power, leading to weaker inference (see Bai AER 2022)

Reactions Not clear ATE conditional on attrition is less/more interesting than ATE from full sample ATE from full sample need not equal ATE in population. External validity of both full and attrited sample is questionable. Not falsifiable that either are ``biased (see Lant Pritchett) Could prefer ATE conditional on attrition if more precise, as in meta analysis literature Disagree with argument that we should prefer estimates without fixed effects, but agree we should report them (in column 1 or appendix) Do not take away that pairwise randomization is worse than randomization with larger strata Bad if referees reject because of pairwise randomization a priori In experimental design, pair-wise randomization could still maximize power, especially if you have smooth outcome, with no obvious bunching to define N>2 strata Pair-wise randomization likely more useful with one primary outcome of interest N>2 strata could potentially group observations by correlated multiple outcomes Strata design needs to account for: (i) (co)variance of outcomes of interest; (ii) potential treatment effect heterogeneity. Can get at this by looking at baseline values; reviewing prior literature

Replication with and without fixed effects The authors claim to find substantial differences in estimates with and without fixed effects in AER/AEJ papers, but don t evaluate if differences are statistically significant. This is incomplete and potentially misleading. Tantalizing and mysterious claim (Remark 3.1) that testing for differences between models is not possible because specifications with fixed effects may in fact feature an asymptotic bias in general . Standard errors are left for future research. Even so, authors should report test of whether specifications have different coefficients using usual robust standard errors Casaburi and Reed (2022 AEJ Applied) as example Randomized demand subsidies to firms, with pairwise stratification on baseline sales volume Test for price differences between subsidized and unsubsidized firms to reveal differentiation in structural model Subsidy = $150. If price is $150 lower for treated firms, market has complete differentiation Authors report original coefficient is 97% (!!) larger than alternative, but cannot reject that they are identical given robust standard errors Original Alternative VARIABLES Price Price Qualitative interpretation also unchanged: no difference in prices between treatment and control implies firms are undifferentiated Treatment (=1) -32.52 (48.40) -0.860 (39.18) Results are not ``substantially different with or without pair fixed effects R-squared Observations Pair fixed effects Note: Robust standard errors in parenthesis 0.139 1,079 Yes 0.000 1,079 No

Implications for econometricians Econometrics has revolutionized our profession The most essential faculty member is probably the one that teaches econometrics (RIP Gary Chamberlain) Unlike 25 years ago, we now have a standard toolkit for causal identification (i.e., DID, IV, RCT, RD) But publication incentives have caused a credibility counter-revolution Researchers now differentiate themselves by revisiting and reevaluating the toolkit Example of DID o Many new and competing approaches but in practice most give same qualitative answer o How to balance novel insight with creating busy work and barriers to publication? If empirics become less credible, economists could become less relevant in policy debates Give applied people the benefit of the doubt. If you replicate a study using an alternative approach: Replicate many papers using the same approach, not one (like this paper) Show not whether a coefficient is different, but whether the main message of a paper changes Give clear implications for ex-ante design not just ex-post analysis (like Bai AER 2022)