Data Warehousing: Key Concepts and Characteristics

A data warehouse is a specialized database designed for decision-making processes by storing integrated, time-variant, and nonvolatile data. It is subject-oriented and allows for the integration of data from various sources. Data marts are simplified versions of data warehouses focused on specific subjects. This compares data warehouses and data marts, highlighting their differences in size, implementation time, and flexibility. The ETL process, involving extraction, transformation, and loading, is crucial for populating data warehouses.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

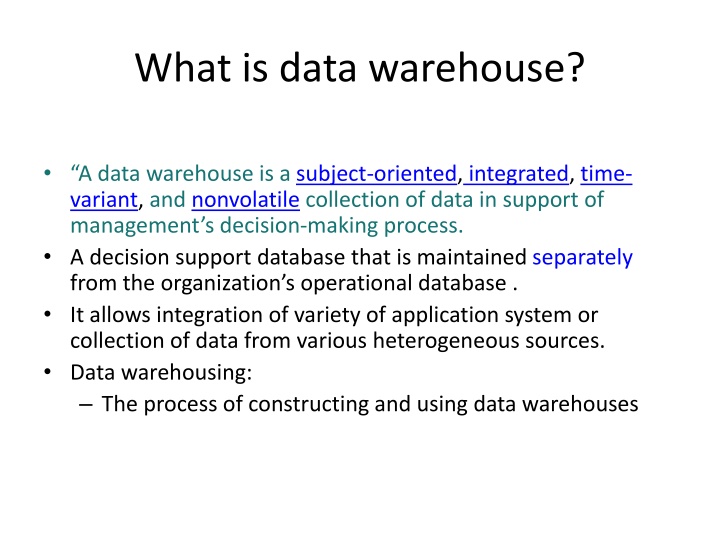

What is data warehouse? A data warehouse is a subject-oriented, integrated, time- variant, and nonvolatile collection of data in support of management s decision-making process. A decision support database that is maintained separately from the organization s operational database . It allows integration of variety of application system or collection of data from various heterogeneous sources. Data warehousing: The process of constructing and using data warehouses

Characteristics Subject Oriented: Data that gives information about a particular subjects instead of about a company's ongoing operations. such as customer, product, sales. Integrated: Data that is gathered into the data warehouse from a variety of sources and merged into a coherent whole. Time-variant: All data in the data warehouse is identified with a particular time period. Non-volatile: Data is stable in a data warehouse. More data is added but data is never removed. This enables management to gain a consistent picture of the business.

Data Mart A data mart is a simple form of a data warehouse that is focused on a single subject (or functional area), such as Sales, Finance, or Marketing. Data marts are often built and controlled by a single department within an organization. data marts usually draw data from only a few sources. The sources could be internal operational systems, a central data warehouse, or external data.

Difference Data Warehouse Holds multiple subject areas Holds very detailed information Data Mart Often holds only one subject area- for example, Finance, or Sales May hold more summarised data (although many hold full detail) Concentrates on integrating information from a given subject area or set of source systems Is built focused on a dimensional model using a star schema. Size is < 100 GB Implementation Time Is Months It is restrictive Works to integrate all data sources Does not necessarily use a dimensional model . Size is 100 GB-TB+ Implementation Time Is Months to years It is highly flexible

Types Dependent

ETL Process Extraction Transformation Loading ETL To get data out of the source and load it into the data warehouse simply a process of copying data from one database to other Data is extracted from an OLTP database, transformed to match the data warehouse schema and loaded into the data warehouse database It is industry standard used to represent data movement and transportation It is essential component to load data into DWH(data warehouse),ODS(operational data stores)and DM Many data warehouses also incorporate data from non OLTP systems such as text files, legacy systems, and spreadsheets; such data also requires extraction, transformation, and loading When defining ETL for a data warehouse, it is important to think of ETL as a process, not a physical implementation

extracting the data is the process of reading data from a database. transforming the data may involve the following tasks: applying business rules (so-called derivations, e.g., calculating new measures and dimensions), cleaning (e.g., mapping NULL to 0 or "Male" to "M" and "Female" to "F" etc.), filtering (e.g., selecting only certain columns to load), splitting a column into multiple columns and vice versa, joining together data from multiple sources (e.g., lookup, merge), transposing rows and columns, applying any kind of simple or complex data validation (e.g., if the first 3 columns in a row are empty then reject the row from processing) - loading the data into a data warehouse or data repository other reporting applications

Goals and Benifits To facilitate data movement and transportation. It performs three basic operations on data(ETL). ETL processes are reusable so that can be scheduled to perform data movement on regular basis. ETL performs MPP(massive parallel processing) for large data volume.

strategy ETL processes are usually grouped and executed as batch jobs. ETL tools like (informatica power center) are used to implement ETL process. ETL processes are designed to be very efficient, scalable and maintainable.

When to Use Data movement across or within system involving large volume data and complex business rule. It is required to load data into DWH(data warehouse),ODS(operational data stores)and DM

Functions of Load Manager Extract the data from source system.(Gateways) Fast Load the extracted data into temporary data store. Perform simple transformations into structure similar to the one in the data warehouse

Functions of warehouse manager A warehouse manager analyzes the data to perform consistency and referential integrity checks. Creates indexes, business views, partition views against the base data. Generates new aggregations and updates existing aggregations. Generates normalizations. Transforms and merges the source data into the published data warehouse. Backup the data in the data warehouse. Archives the data that has reached the end of its captured life.

Query Manager Query manager is responsible for directing the queries to the suitable tables. By directing the queries to appropriate tables, the speed of querying and response generation can be increased.

Datawarehouse Models Enterprise Warehouse Virtual Warehouse Data mart

Difference Operational Database(OLTP) Customer Oriented Contains too detailed data. Data Warehouse(OLAP) Market Oriented. Large amount of historical data provide summarization , aggregation. Star/snowflakes database design ER-data model and application oriented database design Focuses on current data Focuses on multiple versions of a database schema. Access pattern is read only operations. Long term decision support Users: hundreds manage r, executive ,analyst. Access pattern is short, atomic transaction, concurrency control and recovery mechanism. Day to day operation Users :thousands Clerk,DBA,database professional

Data Mining Data mining is the task of discovering interesting patterns from large amounts of data, where the data can be stored in databases, data ware houses, or other information repositories. Data mining is often defined as finding hidden information in a database. Data Mining means mining of knowledge. It is KDD process.

Need for data Mining Data mining turns a large collection of data into knowledge. It process data through algorithm. It helps to understand the business better and improve the performance. It brings methods together from several domains like machine learning, statistics, pattern recognition etc. Data mining used in market analysis and management, risk analysis and mitigation.

Classification Classification maps data into predefined groups or classes. It is often referred to as supervised learning because the classes are determined before examining the data. Classification algorithms require so that the classes be defined based on data attribute values. Pattern recognition is a type of classification where an input pattern is classified into one of several classes based on its similarity to these predefined classes.

Steps for classification Step 1: A classifier is built describing a predetermined set of data classes or concepts. Step 2: Here, the model is used for classification. First, the predictive accuracy of the classifier is estimated.

Classification Methods Decision Tree Induction Bayesian classification: na ve Baye s Classifier

Decision Tree Induction A decision tree is a flowchart-like tree structure, where each internal node (nonleaf node) denotes a test on an attribute, each branch represents an outcome of the test, and each leaf node (or terminal node) holds a class label. The topmost node in a tree is the root node.

Input: Data partition, D, which is a set of training tuples and their associated class labels; attribute list, the set of candidate attributes; Attribute selection method, a procedure to determine the splitting criterion that best partitions the data tuples into individual classes. This criterion consists of a splitting attribute and, possibly, either a split point or splitting subset. Output: A decision tree. Method: (1) create a node N; (2) if tuples in D are all of the same class, C then (3) return N as a leaf node labeled with the class C; (4) if attribute list is empty then (5) return N as a leaf node labeled with the majority class in D; // majority voting (6) apply Attribute selection method(D, attribute list) to find the best splitting criterion; (7) label node N with splitting criterion; (8) if splitting attribute is discrete-valued and multiway splits allowed then // not restricted to binary trees (9) attribute list attribute list ? splitting attribute; // remove splitting attribute (10) for each outcome j of splitting criterion // partition the tuples and grow subtrees for each partition (11) let Dj be the set of data tuples in D satisfying outcome j; // a partition (12) if Dj is empty then (13) attach a leaf labeled with the majority class in D to node N; (14) else attach the node returned by Generate decision tree(Dj, attribute list) to node N; endfor (15) return N;

Bayesian classification It uses bayes theorem Baye s theorem is used for finding conditional probability. Baye s theorem : posterior probability of H conditioned on X

Prediction It is type of classification. prediction is predicting a future state rather than a current state based on current and past data. Reference is made to a type of application rather than to a type of data mining approach Example:

Regression It represents reality by using the system of equation. Regression is used to map a data item to a real valued prediction variable. Regression assumes that the target data fit into some known type of function . Some type of error analysis is used to determine which function is the best. Regression also used for data smoothing.

Two types of regression: Linear Regression: Multiple Regression:

Y=wx+b Y and x =numeric database attribute. W,b=regression coefficient. It can also be expressed in terms of weight

Multiple Regression: Multiple Linear regression: It is extension of linear regression. It allows response variable y to be modeled as linear function of two or more predictor variable.

Clustering: It is the process of grouping the data into classes or clusters. Clustering is alternatively referred to as unsupervised learning or segmentation. Clustering is partitioning of data into subset. A special type of clustering is called segmentation.

Requirements of clustering in data mining: Scalability: Ability to deal with different types of attributes Discovery of clusters with arbitrary shape Minimal requirements for domain knowledge to determine input parameters Ability to deal with noisy data Incremental clustering and insensitivity to the order of input records High dimensionality Constraint-based clustering Interpretability and usability

Applications market research : pattern recognition: data analysis image processing Biology Geography Outlier detection:

Clustering methods Partitioning method Hierarchical Method. Density based method Grid based method.