Challenges and Solutions in Scaling GWAS for Bioinformaticians

Exploring the challenges of scaling Genome Wide Association Studies (GWAS) to millions of samples, covering topics like FastPCA, TeraStructure, and imputation with Eagle. The session delves into working with summary statistics, exemplified by PrediXan journal discussion, and outlines concepts and examples for tackling computational bottlenecks in big data analysis.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

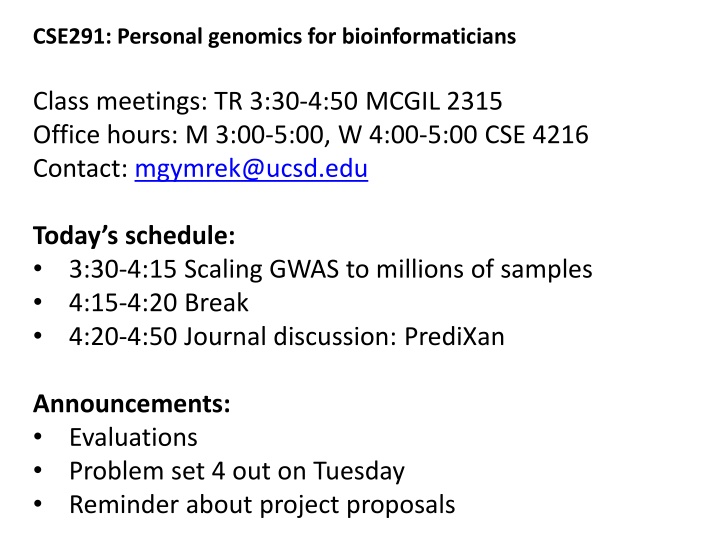

CSE291: Personal genomics for bioinformaticians Class meetings: TR 3:30-4:50 MCGIL 2315 Office hours: M 3:00-5:00, W 4:00-5:00 CSE 4216 Contact: mgymrek@ucsd.edu Today s schedule: 3:30-4:15 Scaling GWAS to millions of samples 4:15-4:20 Break 4:20-4:50 Journal discussion: PrediXan Announcements: Evaluations Problem set 4 out on Tuesday Reminder about project proposals

Scaling GWAS to millions of samples CSE291: Personal Genomics for Bioinformaticians 02/09/17

Outline Challenges in scaling GWAS Example: FastPCA Example: TeraStructure Example: Imputation with Eagle Working with summary statistics PrediXan (journal discussion)

Pipeline overview Reed et al. 2015

Big data comes with new challenges Logistical challenges Raw genotype datasets in the Gigabytes to Terabytes range Often not all the data is stored in the same place Often don t have legal permission to share raw data cross multiple analysis centers Computational bottlenecks Imputation Principal components analysis O(MN2+N3) run time Linear mixed model analysis O(MN2+N3) run time, O(N2) memory

Concepts for scaling GWAS Randomization/Approximation Randomly sample data to get an approximate answer e.g. use randomized algorithms to approximate top PCs Heuristics/Approximation Devise rules that are mostly right and speed up your algorithm e.g. during imputation only consider most relevant reference haplotypes Using summary statistics Work with summary information, like p-values and effect sizes, rather than raw genotype data E.g. Imputation, fine-mapping, meta-analyses

Traditional PCA is slow G = Cov(XT) = XTX/n Eigendecomposition of GRM Run time: O(MN2+N3)

fastPCA computational tricks Power iteration If you multiply a random vector by a square matrix, it projects the vector on to the matrix s eigenvectors, scales by the eigenvalues After repeated multiplications, the projection along the first eigenvector grows fastest blanczos method Estimate (approximately) more PCs than desired using power iteration method Project genotypes on this set of eigenvectors to reduce dimensionality while preserving the top PCs Do traditional PCA on the reduced matrix Galinksy et al. 2016

Performance comparison of PCA methods Traditional PCA: O(MN2+N3) flashPCA O(MN2) FastPCA O(MN) Galinksy et al. 2016

Imputation bottlenecks Imputation run time grows with the number of samples and number of markers More samples = more states in our HMM = run time explosions. Number of possible haplotypes can grow even superlinearly with number of samples. Some solutions: Prephase individuals before imputation Cluster haplotypes into a reduced state space Parallelize over small regions of the genome Galinksy et al. 2016

Long-range phasing using shared IBD tracts Long-range phasing (LRP): Look for long IBD tracks shared among related individuals. Phase inference at these regions is obvious Advantages: Highly accurate phasing and imputation, even for rare variants Disadvantages: Requires cohort consisting of a large fraction of the population (e.g. Iceland pedigrees) Requires phasing first to find IBD (slow) Poor performance in more general settings

Eagle: LRP+conventional phasing Eagle approach combines: 1. Long range phasing (LRP): make initial phase calls using long (>4cM) tracks of IBD sharing in related individuals (back to ~12 generations) Uses a new fast IBD scanning approach: first look for long stretches with agreement at homozygous sites, then score regions for consistency with expectation under IBD 2. Hidden Markov Model: Use an approximate HMM to refine phase calls Aggressively prune state space to use up to 80 local reference haplotypes Galinksy et al. 2016

Eagle algorithm Loh et al. 2016

Eagle performance Loh et al. 2016

Eagle summary Advantages: Far faster than existing tools on large (e.g. >100,000) cohorts More memory efficient than other methods Comparable switch error rates Disadvantages: Requires cohorts consisting of a significant portion of the entire population for accurate IBD mapping Pure HMM-based methods may be more accurate in local regions Recommendation: prephase with Eagle, then switch to SHAPEIT2 in regions of the genome lacking IBD chunks Loh et al. 2016

Eagle 2 For smaller cohorts (e.g. <100,000), statistical phasing approach of Eagle limited by amount of data available Eagle 2 uses reference-based phasing, relying on an external reference haplotype panel Key improvements: A new data structure based on the positional Burrows Wheeler Transform to store reference haplotypes Rapid search algorithm exploring only the most relevant paths through the HMM Loh et al. 2016

Eagle 2 Loh et al. 2016

Eagle 2 Loh et al. 2016

Working with summary statistics

What are summary statistics Individual level data 0 1 0 0 1 0 0 0 1 0 0 0 1 0 2 1 0 0 0 1 0 0 0 1 0 0 0 1 0 2 2 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 1 0 0 0 1 0 0 0 1 0 1 Summary statistics Greatly reduce data size Alleviate privacy concerns Effect size ( ) Std. error SE( ) P-value (p)

Meta-analysis: combining GWAS studies Diabetes GWAS #3 Sanger Diabetes GWAS #2 Broad Institute Diabetes GWAS #1 UCSD 5,000 cases 7,000 controls 20,000 cases 40,000 controls 2,000 cases 4,000 controls Mega-analysis Re-analyze individual level data Meta-analysis Jointly analyze summary statistics 1,UCSD, SE( 1,UCSD), p1,UCSD 0 1 0 0 1 0 0 0 1 0 0 0 1 0 2 1,Broad, SE( 1,Broad), p1,Broad 1,Sanger, SE( 1,Sanger), p1,Sanger 0 2 0 1 1 0 2 0 1 0 1 0 1 0 2 2,UCSD, SE( 2,UCSD), p2,UCSD 1 1 0 1 1 1 2 0 0 0 0 0 1 1 1 2,Broad, SE( 2,Broad), p2,Broad 2,Sanger, SE( 2,Sanger), p2,Sanger 27,000 cases 51,000 controls 27,000 cases 51,000 controls

Meta-analysis: combining GWAS studies 1,UCSD, SE( 1,UCSD), p1,UCSD Fisher s Method Null hypothesis: all effects are 0 Alternative hypothesis: at least one effect != 0 Based on average of log(p) across studies Doesn t take into account direction of effect 1,Broad, SE( 1,Broad), p1,Broad 1,Sanger, SE( 1,Sanger), p1,Sanger 2,UCSD, SE( 2,UCSD), p2,UCSD 2,Broad, SE( 2,Broad), p2,Broad 2,Sanger, SE( 2,Sanger), p2,Sanger Z-score based methods Zi= i/SE( i) Take (weighted) average of Z scores Takes into account direction of effect Fixed-effects meta-analysis Assume same effect size in each study Inverse-variance weighting: each study is weighted by inverse of squared standard error n,UCSD, SE( n,UCSD), pn,UCSD n,Broad, SE( n,Broad), pn,Broad n,Sanger, SE( n,Sanger), pn,Sanger

Imputation from summary statistics Traditional imputation requires individual-level data and is computationally expensive: AACACAAGCTAGCTACCTACGTAGCTACAT AACACAAGCTAGCTACCTACGTAGGTACAT Reference haplotype panel (e.g. 1000 Genomes) AACACTAGCTAGCTACCTATGTAGGTACAT AACACTAGCAAGCTACCTACGTAGGTACAT AACACTAGCAAGCTACCTACGTAGGTACGT AACACTAGC?AGCTACCTA?GTAGGTAC?T AACACAAGC?AGCTACCTA?GTAGGTAC?T Your data genotyped on SNP chip AACACTAGC?AGCTACCTA?GTAGGTAC?T AACACTAGC?AGCTACCTA?GTAGGTAC?T AACACAAGC?AGCTACCTA?GTAGCTAC?T

Imputation from summary statistics Linkage disequilibrium induces correlation in effect sizes of nearby SNPs Model zscores as multivariate normal, with covariance defined by LD (r2) Captures ~80% of information compared to using individual- level data Which reference panel to use?

Rare variant association tests Association tests for individual rare variants likely to be underpowered On the other hand, mutations under strong negative selection are likely to be rare Burden test: aggregate rare variants by some category, e.g. genes. Assume each SNP has same direction of effect Tburden=wTZ, where w give weights of each SNP and Z gives z-scores of each SNP. Meta-analysis of burden tests using summary statistics via inverse-variance weighting (i.e. upweight statistics with the lowest variance) Over-dispersion test: assumes rare variants in the same candidate gene can affect a complex trait in either direction Tburden=ZTWZ

Fine-mapping using summary statistics Fine-mapping: aims to identify the causal variant(s) driving a GWAS signal Method 1: Prioritize variants based on p-value Method 2: Compute posterior probability of causality based on likelihood to observe given data conditioned on a given SNP being causal. Method 3: Use method 2, but weight SNPs based on functional data (e.g. chromatin, expression, other annotations)

PAINTOR: Fine-mapping using summary statistics Kichaev et al. 2014

Partitioning heritability using LD-score regression See Bulik-Sullivan et al. Nature Genetics 2015 for more on LD-score regression Much faster than LMM- based variance partitioning, doesn t require individual- level data! Finucane et al. 2015

Cross-trait analyses See Bulik-Sullivan et al. Nature Genetics 2015 for more on LD-score regression Bulik-Sullivan et al. 2015

Summary Using a reference transcriptome panel (e.g. GTEx), build a model to predict genetically regulated component of gene expression (GReX) Using learned effect sizes, impute gene expression values into GWAS cohort, for which expression data isn t usually available. Test for association between gene expression and disease

Why this paper? Example of utility of summary statistic data Example of successfully integrating data from multiple studies Example of a way to gain biological insight from GWAS without necessarily having to collect a lot more data

Advantages over vanilla GWAS Directly testing functional units (genes vs. SNPs) Greatly reduces the multiple hypothesis testing burden Tissue relevance: can impute expression for different tissue types, measure which one most correlated with the trait Direction of effect: not only which gene is involved, but does under/over-expression lead to disease? Doesn t require transcriptome data from your cohort