Advances in Online Learning with Vector Costs and Bandits with Knapsacks

Explore cutting-edge research on online learning algorithms dealing with vector costs and bandits, including applications in load balancing and bandits with knapsacks. The studies cover topics such as regret minimization, minimizing vector costs, and maximizing rewards while maximizing budget constraints. Researchers delve into optimizing actions with stochastic/adversarial costs to achieve sub-linear regret and effective online decision-making strategies.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

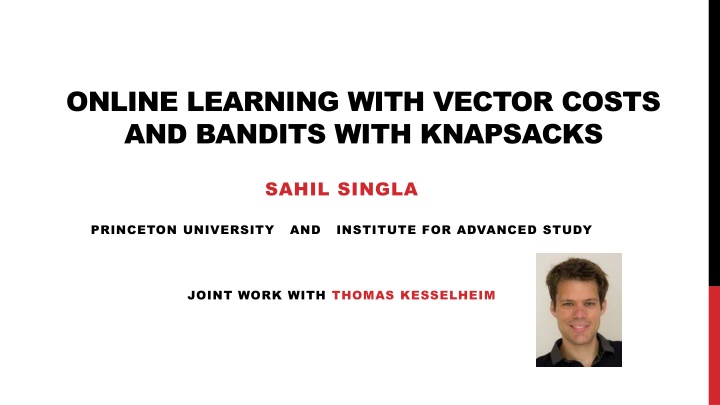

ONLINE LEARNING WITH VECTOR COSTS AND BANDITS WITH KNAPSACKS SAHIL SINGLA PRINCETON UNIVERSITY AND INSTITUTE FOR ADVANCED STUDY JOINT WORK WITH THOMAS KESSELHEIM

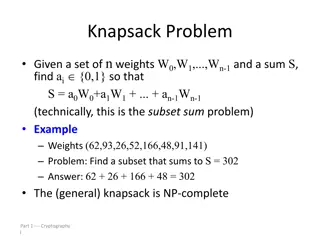

CLASSICAL ONLINE LEARNING ?(t)= t T time steps and n actions ci 1 n Each time t {1, ,T}, algorithm selects ?(t) n t [0,1] for action i {1, ,n} Stochastic/Adversarial costs ci Algorithm incurs (expected) scalar cost ?(t)?(t) We know Regret at most Full-feedback: O( T log n) Hedge Partial-feedback: O( nT log n) Exp3.PAuer et al. 02 Full or Partial Feedback Freund-Schapire 99 Observe ?(t)or just ?(t)?(t) Goal is to minimize t?(t)?(t), in particular What if the costs were vectors in ??? ?????? = t?(t)?(t) min? n t?(t)? ALG OPT 2

ONLINE LEARNING WITH VECTOR COSTS Ttime steps and nactions t ?(t)= Ci Each time t {1, ,T}, algorithm selects ?(t) n t 0,1d for action i {1, ,n} Stochastic/Adversarial costs Ci Algorithm incurs (expected) vector costC(t)?(t) d n Full or Partial Feedback What can be done: Full or Partial feedback? Stochastic or adversarial input? Observe C(t) or just C(t)?(?) Goal is to minimize tC(t)?(t) , in particular Sub-linear regret even possible? ?????? = tC(t)?(t) min? n tC(t)? We also study with ? norm OPT ALG 3

MOTIVATION VIA ONLINE LOAD BALANCING Online Generalized/Vector Load Balancing Awerbuch et al. 95, Chekuri-Khanna 04, Azar et al. 13, Meyerson et al. 13, Im et al. 15, Molinaro 17, Azar et al. 18 Tjobs and nways of scheduling each job Stochastic/adversarial vector costs for job t {1, ,T} Ci Algorithm selects ?(t) n t 0,1d for each action i {1, ,n} ? norm objective also widely studied Want to minimize tC(t)?(t) d machines T jobs Online Algorithm Online Learning 1. Costs revealed before action 1. Costs (partially) revealed after action 2. Compare to optimal policy 2. Compare to fixed distribution over actions 4

MOTIVATION VIA BANDITS WITH KNAPSACKS Badanidiyuru-Kleinberg-Slivkins 13 Immorlica-Sankararaman-Schapire-Slivkins 19 Maximization Variant of the Problem: T steps and n actions. Step t {1, ,T}, algorithm selects ?(t) n t 0,1d for action i {1, ,n} Stochastic/Adversarial costs Ci Algorithm incurs (expected) vector costC(t)?(t) Algorithm receives scalar reward?(t)?(t) for ?(t) 0,1n Several applications: 1. Dynamic pricing with limited inventory 2. Ad placement with bidder budgets Full or Partial Feedback Observe (C(t), ?(t)) or just (C(t)?(?), ?(t)?(t)) Goal is to maximize t?(t)?(t)while satisfying budget tC(t)?(t) B, particularly More generally, ? norm B ?(t)? t?(t)?(t) ?????? = max? n t=1 5 OPT ALG

MAIN RESULTS Stated results assume algorithm knows OPT THM [KESSELHEIM-S]: ONLINE LEARNINGWITH VECTOR COSTS: STOCHASTIC ARRIVAL: Sublinear regret competitive means tC(t)?(t) OPT+o(T) ADVERSARIAL ARRIVAL: ?(???{?,??? ?}) competitive Remark: Stochastic results also follow from Agrawal-Devanur 14 THM [KESSELHEIM-S]: BANDITSWITH KNAPSACKS: competitive means t?(t)?(t) OPT o(T) STOCHASTIC ARRIVAL: Sublinear regret ADVERSARIAL ARRIVAL: ?(???{?,??? ?}) competitive Remark: Stochastic results also in Badanidiyuru-Kleinberg-Slivkins 13 Remark: For adversarial setting, O(d) competitive in Immorlica-Sankararaman-Schapire-Slivkins 19 6

OUTLINE INTRODUCTION: LOAD BALANCING & BANDITSWITH KNAPSACKS OUR APPROACHUSINGA SURROGATE SCALAR PROBLEM ONLINE LEARNINGWITH VECTOR COSTS BANDITSWITH KNAPSACKS 7

WHY SIMPLE GREEDY ALGORITHMS FAIL? Recall Setting ?????? = tC(t)?(t) min? n tC(t)? ALG OPT Suppose greedy w.r.t. change in . Consider this example Only two actions: Action (1) costs (1 ) in every dimension for 0 Action (2) costs 1 in a randomly chosen dimension Better to select Action (2), but greedy selects Action (1) Competitive ratio (d) How to improve to ??? ?? 8

MAIN IDEAS ? p ( 1 + p 1)Molinaro 17 p Idea 1: Smooth potential ? 1 ln( iexp( i)) Additive approx:For any ? ? 0 d, ? ? ? +ln d Contour Maps Gradient stability:For any ? 0,1d, ? + ? 1 + ? Idea 2: A surrogatescalar problem t ?t 1,Ctei Reduce vector costs to scalar costs:ci Use classical no-regret algorithm: Reduction oblivious to feedback model How to relate surrogate to actual problem? 9

OUTLINE INTRODUCTION: LOAD BALANCING & BANDITSWITH KNAPSACKS OUR APPROACHUSINGA SURROGATE SCALAR PROBLEM ONLINE LEARNINGWITH VECTOR COSTS BANDITSWITH KNAPSACKS 10

WORKING WITH From ? 1 ln( iexp( i)) we have t ?t 1,Ctei T ?T= ?0 ?(T) ?t ?t 1 ci + t=1 ln d T ctx(t) + (1 + ) t=1 by gradient stability We could use no-regret property to bound T T ctx(t) t=1 ctx + Regret t=1 Adaptive Adversary But surrogate cost ctdepends on algorithm s load ?t 1 How to relate our cost to optimal solution? Our trajectory could be very different from that of the optimal solution 11

MAIN LEMMA ln d T ?(T) ctx(t) + 1 + t=1 How to relate surrogate cost to the optimal solution? MAIN LEMMA [KESSELHEIM-S]: 1 STOCHASTIC ( = OPT):? t=1 T T ? Ct? ctx(t) Molinaro 17 t=1 + Regret 1 5 2 tC(t)? T ADVERSARIAL ( = 5 OPT): t=1 ctx(t) Caragiannis 08 + 2 Regret ln d T Stochastic: ? ?(T) ? Ctx + 1 + t=1 + 1 + Regret 2 ln d OPT + OPT + Regret ln d +5 Adversarial: + 2 Regret ?(T) 2 tC(t)? O(ln d OPT) + 2 Regret 12

OUTLINE INTRODUCTION: LOAD BALANCING & BANDITSWITH KNAPSACKS OUR APPROACHUSINGA SURROGATE SCALAR PROBLEM ONLINE LEARNINGWITH VECTOR COSTS BANDITSWITH KNAPSACKS 13

MAIN IDEAS: BANDITS WITH KNAPSACKS Lagrangify constraints to reduce to Classical Online Learning t ?tei ci t t ?t 1 ?within budget Immorlica et al. 19 Ctei i ci Adversarial: ? Compare to scaled-down distribution log d to ensure feasibility Consider two cases: whether algorithm runs out of budget or not Stochastic Add dummy coordinate0 for time budget consumed Badanidiyuru et al. 13 Works for norm but changes problem for ? norm Define for ? ? 0 d+1, new norm ?p, (x0, (x1, ,xd)p) 14

CONCLUSION Online Learning with Vector Costs and Bandits with Knapsacks: Stochastic: Adversarial: Sub-linear regret O min p,log d competitive ratio which is tight Techniques: Use a smooth potential Reduce to a surrogate scalar problem Open Problems: Can we get best-of-both adversarial and stochastic guarantees? THANKS FOR LISTENING! 15

MAIN LEMMA How to relate OPT to the optimal solution of surrogate scalar problem? LEMMA STOCHASTIC: FORI.I.D. COSTSC(t) [0,1]d n, ? ?t 1 Ctx ?[Ctx ] T T ADVERSARIAL: t=1 t 1 exp OPT 1 ( 1 + t=1 Ctx(t) t=1 ?t 1 T Ctx Ctx(t)+1 ?t 1 ) Wrapping Up: ln d T T Stochastic: ? ?(T) t 1 Ctxt] 1 + t=1 ?[Ctx ] 1 + ?[ t=1 17