OBPMark and OBPMark-ML: Computational Benchmarks for Space Applications

OBPMark and OBPMark-ML are computational benchmarks developed by ESA and BSC/UPC for on-board data processing and machine learning in space applications. These benchmarks aim to standardize performance comparison across different processing devices, identify key parameters, and provide recommendatio

10 views • 20 slides

Crash Course in Supercomputing: Understanding Parallelism and MPI Concepts

Delve into the world of supercomputing with a crash course covering parallelism, MPI, OpenMP, and hybrid programming. Learn about dividing tasks for efficient execution, exploring parallelization strategies, and the benefits of working smarter, not harder. Discover how everyday activities, such as p

0 views • 157 slides

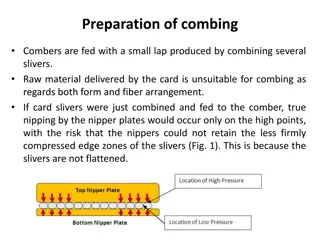

Understanding Material Preparation for Combing Process in Textile Manufacturing

Material preparation plays a crucial role in the combing process in textile manufacturing. It involves feeding combers with a small lap, ensuring fibers are evenly arranged and parallelized for efficient combing operation. Lack of parallelization can lead to fiber loss and poor quality output. Prope

2 views • 18 slides

Factors Affecting Combing Fibre Length & Uniformity in Textile Industry

Play a critical role in deciding the combing performance. Factors include short fibre content, fibre stiffness, moisture content, fiber fineness, and foreign material. Material preparation such as fiber parallelization, sheet thickness, and evenness is crucial. Machine conditions, speeds, operation,

0 views • 11 slides

Understanding MapReduce and Hadoop: Processing Big Data Efficiently

MapReduce is a powerful model for processing massive amounts of data in parallel through distributed systems like Apache Hadoop. This technology, popularized by Google, enables automatic parallelization and fault tolerance, allowing for efficient data processing at scale. Learn about the motivation

2 views • 33 slides

Exploring GPU Parallelization for 2D Convolution Optimization

Our project focuses on enhancing the efficiency of 2D convolutions by implementing parallelization with GPUs. We delve into the significance of convolutions, strategies for parallelization, challenges faced, and the outcomes achieved. Through comparing direct convolution to Fast Fourier Transform (F

0 views • 29 slides

Understanding MapReduce for Large Data Processing

MapReduce is a system designed for distributed processing of large datasets, providing automatic parallelization, fault tolerance, and clean abstraction for programmers. It allows for easy writing of distributed programs with built-in reliability on large clusters. Despite its popularity in the late

0 views • 52 slides

Enhancing Web Search Latency with DDS Prediction

This presentation delves into DDS Prediction, a technique designed to reduce extreme tail latency in web search engines by optimizing query execution times and parallelizing specific queries. It addresses the challenges of improving latency for all users and emphasizes the importance of achieving hi

0 views • 31 slides

Understanding C++ Parallelization and Synchronization

Explore the challenges of race conditions in C++ multithreading, from basic demonstrations to advanced scenarios. Delve into C++11 features like atomic operations, memory ordering, and synchronization primitives to create efficient and thread-safe applications.

0 views • 51 slides

Enhancing Parallelization in Software Transactional Memory Systems

Explore how merge semantics in STMs enable speculative parallelization, addressing irregular parallelism challenges. The research covers connected components, parallel applications, and the utilization of speculation for optimized execution in diverse computing scenarios.

0 views • 43 slides

Understanding Parallel Sorting Algorithms and Amdahl's Law

Exploring the concepts of parallel sorting algorithms, analyzing parallel programs, divide and conquer algorithms, parallel speed-up, estimating running time on multiple processors, and understanding Amdahl's Law in parallel computing. The content covers key measures of run-time, divide and conquer

1 views • 40 slides

Microarchitectural Performance Characterization of Irregular GPU Kernels

GPUs are widely used for high-performance computing, but irregular algorithms pose challenges for parallelization. This study delves into the microarchitectural aspects affecting GPU performance, emphasizing best practices to optimize irregular GPU kernels. The impact of branch divergence, memory co

0 views • 26 slides

Understanding Data Dependencies in Nested Loops

Studying data dependencies in nested loops is crucial for optimizing code performance. The analysis involves assessing dependencies across loop iterations, iteration numbers, iteration vectors, and loop nests. Dependencies in loop nests are determined by iteration vectors, memory accesses, and write

0 views • 15 slides

Understanding C++ Parallelization and Synchronization Techniques

Explore the challenges of race conditions in parallel programming, learn how to handle shared states in separate threads, and discover advanced synchronization methods in C++. Delve into features from C++11 to C++20, including atomic operations, synchronization primitives, and coordination types. Un

0 views • 48 slides

Developing MPI Programs with Domain Decomposition

Domain decomposition is a parallelization method used for developing MPI programs by partitioning the domain into portions and assigning them to different processes. Three common ways of partitioning are block, cyclic, and block-cyclic, each with its own communication requirements. Considerations fo

0 views • 19 slides

Technical Tasks and Contributions in Plasma Physics Research

This collection of reports details the technical tasks undertaken in 2022 by AMU in managing interfaces with ACH for Eiron and IMAS, as well as contributions to EIRENE_unified. It also discusses the parallelization of rate coefficient calculations and the implementation of MPI parallelisation for ef

0 views • 10 slides

Shared-Memory Computing with Open MP

Shared-memory computing with Open MP offers a parallel programming model that is portable and scalable across shared-memory architectures. It allows for incremental parallelization, compiler-based thread program generation, and synchronization. Open MP pragmas help in parallelizing individual comput

0 views • 25 slides

Efficient Parallelization Techniques for GPU Ray Tracing

Dive into the world of real-time ray tracing with part 2 of this series, focusing on parallelizing your ray tracer for optimal performance. Explore the essentials needed before GPU ray tracing, handle materials, textures, and mesh files efficiently, and understand the complexities of rendering trian

0 views • 159 slides

Multi-threaded Active Objects: Issues and Solutions

The document delves into the realm of multi-threaded active objects, exploring their principles, limitations, related works, and solutions. It covers topics such as asynchronous method calls, first-class futures, and the risks associated with active objects. Additionally, it compares various approac

0 views • 29 slides

Understanding Functional Programming with Higher-order Functions

Functional programming emphasizes the use of pure functions and higher-order functions to achieve benefits such as predictability, testability, and parallelization. By focusing on avoiding side effects and emphasizing function purity, developers can create more maintainable and scalable code. Learn

0 views • 37 slides

Development of EIRENE-NGM for Neutral Gas Dynamics in Fusion Reactors

EIRENE-NGM project focuses on enhancing the neutral gas dynamics model for fusion reactor simulations, including efficient HPC utilization, physics basis refinement, database improvement, interface development, and predictive capability validation. Collaborators from various institutes aim to create

0 views • 17 slides