Optimizing Inference Time by Utilizing External Memory on STM32Cube for AI Applications

The user is exploring ways to reduce inference time by storing initial weight and bias tables in external Q-SPI flash memory and transferring them to SDRAM for AI applications on STM32Cube. They have questions regarding the performance differences between internal flash memory and external memory, read latency of internal flash memory, impact of using external SDRAM on inference time, and steps to program and read/write data to external QSPI memory and SDRAM using X-cube-ai.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

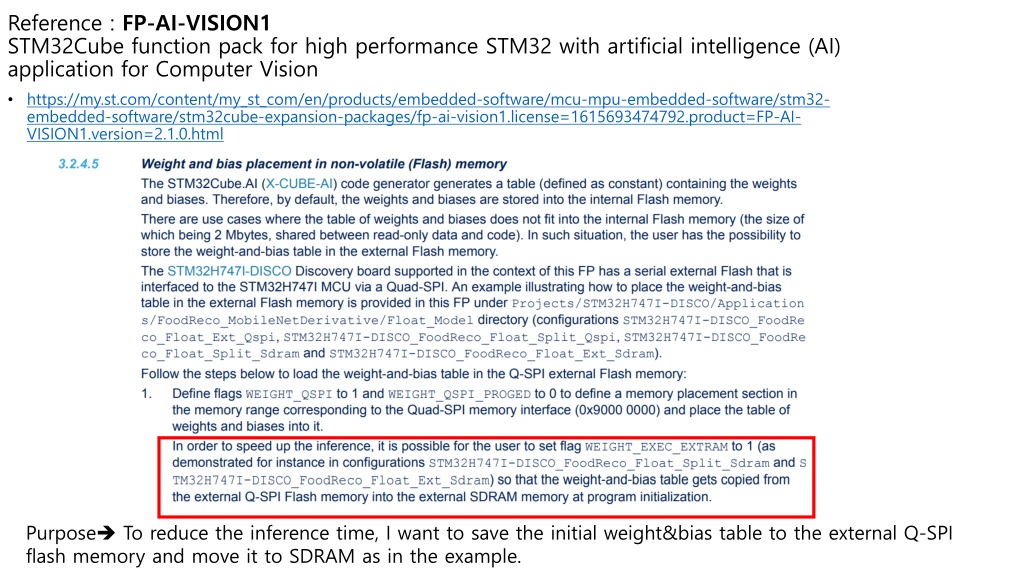

Reference : FP-AI-VISION1 STM32Cube function pack for high performance STM32 with artificial intelligence (AI) application for Computer Vision https://my.st.com/content/my_st_com/en/products/embedded-software/mcu-mpu-embedded-software/stm32- embedded-software/stm32cube-expansion-packages/fp-ai-vision1.license=1615693474792.product=FP-AI- VISION1.version=2.1.0.html Purpose To reduce the inference time, I want to save the initial weight&bias table to the external Q-SPI flash memory and move it to SDRAM as in the example.

Question1 : The inference time is faster when it is placed in the external memory when the data type is float, but in the table12, internal flash memory shows faster results , I don t understand why.

Reference : user manual UM2526 internal flash memory read Q2 : In general, what clock delay is the read time latency of the internal flash memory? Q3 : If I use external SDRAM for read operation, can I expect to decrease inference time? And How much ? Clock cycles / MACC is too slow that I expected.

To summarize the above questions, what I have checked through the current data is that I am using X-cube-ai version 6.0.0 for the 32bit floating point model (the model data type I am currently using), so I want to read data from external SDRAM in order to reduce inference time. I know that it is optimized so that the inference time is faster than reading from the internal flash memory. Currently, the model I trained is Keras 2.3.0, which is not compatible with X-cube-ai 5.0.0. In order to verify the above mentioned steps, what I am currently curious about is how to program and read/write the data required for external QSPI memory and SDRAM. I would like to know a series of steps from setting up pins in cube mx to generating code to the IDE , how to run it in the IDE and verifying that it is properly programmed. Thanks.