Understanding Inductive Bias in Machine Learning

Machine learning models rely on inductive bias, which are the assumptions made by algorithms to generalize from training data to unseen instances. Occam's Razor is a common example of inductive bias, favoring simpler hypotheses over complex ones. This bias helps algorithms make predictions and handle novel cases effectively, highlighting the importance of generalization in machine learning.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. Download presentation by click this link. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

E N D

Presentation Transcript

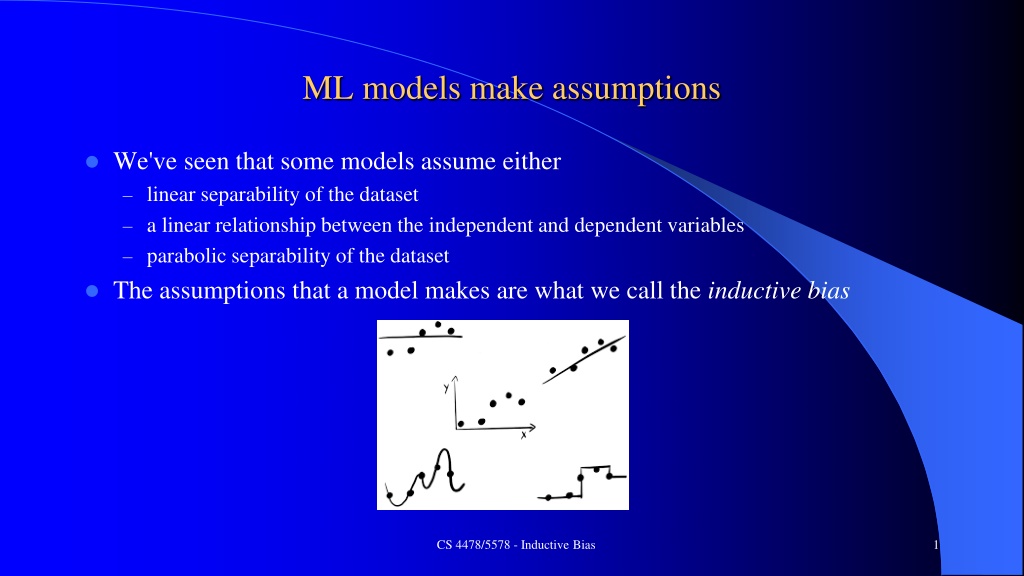

ML models make assumptions We've seen that some models assume either linear separability of the dataset a linear relationship between the independent and dependent variables parabolic separability of the dataset The assumptions that a model makes are what we call the inductive bias CS 4478/5578 - Inductive Bias 1

Wikipedia: Inductive Bias In machine learning, one aims to construct algorithms that are able to learn to predict a certain target output. To achieve this, the learning algorithm is presented some training examples that demonstrate the intended relation of input and output values. Then the learner is supposed to approximate the correct output, even for examples that have not been shown during training. Without any additional assumptions, this problem cannot be solved exactly since unseen situations might have an arbitrary output value. The kind of necessary assumptions about the nature of the target function are subsumed in the phrase inductive bias. A classical example of an inductive bias is Occam's razor, assuming that the simplest consistent hypothesis about the target function is actually the best. Here consistent means that the hypothesis of the learner yields correct outputs for all of the examples that have been given to the algorithm. CS 4478/5578 - Inductive Bias 2

Inductive Bias The approach used to decide how to generalize novel cases One common approach is Occam s Razor The simplest hypothesis which explains/fits the data is usually the best ABC Z AB C Z ABC Z AB C Z A B C Z A BC ? When you get the new input B C. What is your output? Many other rationale biases and variations CS 4478/5578 - Inductive Bias 3

One Definition for Inductive Bias Inductive Bias: Any basis for choosing one generalization over another, other than strict consistency with the observed training instances Sometimes just called the Bias of the algorithm (don't confuse with the bias weight in a neural network) CS 4478/5578 - Inductive Bias 4

Overfitting Noise vs. Exceptions CS 4478/5578 - Inductive Bias 5

Non-Linear Tasks Linear Regression? Inappropriate inductive bias for the dataset below Needs a non-linear surface Could do a feature pre-process as with the quadric machine For example, we could use an arbitrary polynomial in x Thus it is still linear in the coefficients, and can be solved with delta rule, etc. Y = b0+b1X +b2X2+ +bnXn What order polynomial should we use? Overfit issues can occur CS 4478/5578 Inductive Bias 6

Why do we need bias in the first place? Example: Assume we have n Boolean input features Then there are 22n possible Boolean functions (i.e., possible mappings) x1 0 0 0 0 1 1 1 1 x2 0 0 1 1 0 0 1 1 x3 0 1 0 1 0 1 0 1 Class 1 1 1 1 ? Possible Consistent Function Hypotheses CS 4478/5578 - Inductive Bias 7

Why do we need bias in the first place? Example: Assume we have n Boolean input features Then there are 22n possible Boolean functions (i.e., possible mappings) x1 0 0 0 0 1 1 1 1 x2 0 0 1 1 0 0 1 1 x3 0 1 0 1 0 1 0 1 Class 1 1 1 1 ? Possible Consistent Function Hypotheses 1 1 1 1 0 0 0 0 CS 4478/5578 - Inductive Bias 8

Why do we need bias in the first place? Example: Assume we have n Boolean input features Then there are 22n possible Boolean functions (i.e., possible mappings) x1 0 0 0 0 1 1 1 1 x2 0 0 1 1 0 0 1 1 x3 0 1 0 1 0 1 0 1 Class 1 1 1 1 ? Possible Consistent Function Hypotheses 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 1 CS 4478/5578 - Inductive Bias 9

Why do we need bias in the first place? Example: Assume we have n Boolean input features Then there are 22n possible Boolean functions (i.e., possible mappings) x1 0 0 0 0 1 1 1 1 x2 0 0 1 1 0 0 1 1 x3 0 1 0 1 0 1 0 1 Class 1 1 1 1 ? Possible Consistent Function Hypotheses 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 Without an Inductive Bias we have no rationale to choose one hypothesis over another and thus a random guess would be as good as any other option CS 4478/5578 - Inductive Bias 10

Why do we need bias in the first place? Example: Assume we have n Boolean input features Then there are 22n possible Boolean functions (i.e., possible mappings) x1 0 0 0 0 1 1 1 1 x2 0 0 1 1 0 0 1 1 x3 0 1 0 1 0 1 0 1 Class 1 1 1 1 ? Possible Consistent Function Hypotheses 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 Class always = 1 Class always = !x1 Inductive Bias guides which hypothesis we should prefer What happens in this case if we use simplicity (Occam s Razor) as our inductive Bias? E.g., Most common class OR smallest set of vars with explanatory correlation CS 4478/5578 - Inductive Bias 11

Regression Regularization Fitness(hypothesis) How to avoid overfit Keep the model simple For regression, keep the function smooth Inductive bias is that f(x) f(x ) no sudden changes with changes in x Regularization approach: F(h) = Error(h) + Complexity(h) Tradeoff accuracy vs complexity Ridge Regression Minimize: F(w) = SSE(w) + ||w||2 = (predictedi actuali)2 + wi2 Gradient of F(w): Dwi=c(t-net)xi-lwi (Weight decay) Useful when: Features are a non-linear transform from the initial features (e.g., polynomials in x) There are more features than examples Lasso regression uses an L1 instead an L2 weight penalty: F(w) = SEE(w) + wi CS 4478/5578 - Regression 12

Hypothesis Space The Hypothesis space H is the set of all the possible models h which can be learned by the current learning algorithm e.g. Set of possible weight settings for a perceptron Using a restricted hypothesis space (e.g., only lower-order polynomials): Can be easier to search May avoid overfit since they are usually simpler (e.g., linear or low order decision surface) Often will underfit Unrestricted Hypothesis Space Can represent any possible function and thus can fit the training set well Mechanisms must be used to avoid overfit CS 4478/5578 - Inductive Bias 13

Weight values are representation of hypothesis space Error Landscape SSE: Sum Squared Error (ti zi)2 0 Weight Values CS 4478/5578 - Perceptrons 14

Regularization = Avoiding Overfit Regularization: any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error Occam s Razor William of Ockham (c. 1287-1347) Simplest accurate model: accuracy vs. complexity trade-off. Find h H which minimizes an objective function of the form: F(h) = Error(h) + Complexity(h) Complexity could be # of nodes, magnitude of weights, order of decision surface, etc. Augmenting training data for regularization (vs. overtraining on same data) Fake data (can be very effective, jitter, but take care ) Add random noise to inputs during training Adding noise to nodes, weights, outputs, etc. E.g., dropout (discuss with ensembles) Most common regularization approach: Early Stopping Start with simple model (small parameters/weights) and stop training as soon as we attain good generalization accuracy (before parameters get large) Use a validation Set (next slide: requires separate test set) CS 4478/5578 - Inductive Bias 15

Stopping/Model Selection with Validation Set SSE Validation Set Training Set Epochs (new h at each) There is a different model h after each epoch Select a model in the area where the validation set accuracy flattens When no improvement occurs over m epochs The validation set comes out of training set data Still need a separate test set to use after selecting model h to predict future accuracy Simple and unobtrusive, does not change objective function, etc Can be done in parallel on a separate processor Can be used alone or in conjunction with other regularization methods CS 4478/5578 - Inductive Bias 16

No Free Lunch Theorem Any inductive bias chosen will have equal accuracy compared to any other bias over all possible functions/tasks, assuming all functions are equally likely. If a bias is correct on some cases, it must be incorrect on equally many cases. Are all functions equally likely in the real world? CS 4478/5578 - Inductive Bias 20

Which Bias is Best? Studies on Bias have shown there is not one Bias that is best on all problems Over 50 real world problems Over 400 inductive biases mostly variations on critical variable biases vs. similarity biases Different biases were a better fit for different problems Given a data set, which Learning model (Inductive Bias) should be chosen? CS 4478/5578 - Inductive Bias 22

ML Holy Grail: We want all aspects of the learning mechanism automated, including the Inductive Bias Outputs Just a Data Set or just an explanation of the problem Automated Learner Hypothesis Input Features CS 4478/5578 - Inductive Bias 26